element61 is a certified Databricks partner with extensive experience in development of Machine Learning algorithms and set-ups. On this page we give a rough sketch of how to leverage Machine Learning in Databricks. Feel free to contact us if you want to know more

Why run Machine Learning (ML) in Databricks?

Databricks offers a managed Spark environment made for both Data Discovery and Data Engineering at scale. Running Python or R scripts for machine learning is often limiting because it only runs on a single machine which means that processing and transforming the data can be very time consuming. Often this means that users only work with a smaller subset of the data or sampling the data which can lead to not so accurate results. This is where Spark comes in hand, because it offers a platform for data science leveraging parallel processing.

Machine Learning in Apache Spark

To benefit from the speed and compute advantage of Spark in Machine Learning, the open-source community has developed Spark MLlib, Apache Spark's scalable machine learning library. The MLlib library in Spark can be used for machine learning in Databricks and consists of common machine learning algorithms as well as Machine Learning Workflow utilities including ML Pipeline.

Complementary to MLlib, other Spark libraries exists. Most remarkable is:

- sparkdl - When a data scientists wants to use Deep Learning on Databricks, it is recommended to leverage this Spark library to get solid versions of Tensorflow and Keras working on a Spark backend. Read more about sparkdl on its github

from pyspark.ml

from sparkdl # Specific library for Deep Learning

Apacha Spark's ML Pipelines

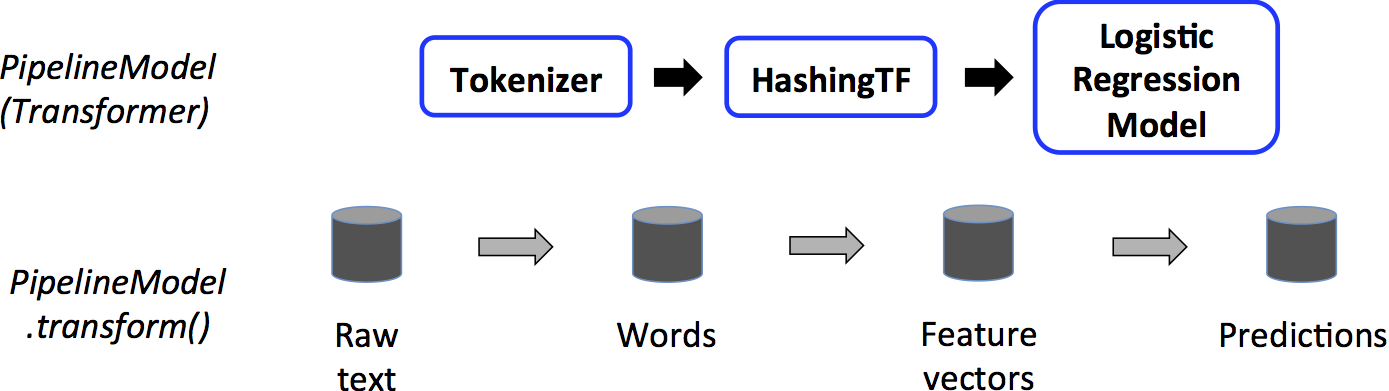

One solid feature embedded in Apache Spark v2.3 and above is ML Pipeline. With ML Pipelines, a data scientists can stage various transformations and model trainings into pipelines and apply these pipelines to various dataframes. This simplifies combining multiple algorithms into a single workflow/pipeline and saves time as your project is structured and organized.

One can get started easily by importing the functions from the default pyspark library.

from pyspark.ml import Pipeline

Example given: One pipeline could contain both the tokenizer, the hashing as well as the actual algorithm training:

from pyspark.ml import Pipeline from pyspark.ml.classification import LogisticRegression from pyspark.ml.feature import HashingTF, Tokenizer # Pyspark Code tokenizer = Tokenizer(inputCol="text", outputCol="words") hashingTF = HashingTF(inputCol=tokenizer.getOutputCol(), outputCol="features") lr = LogisticRegression(maxIter=10, regParam=0.001) pipeline = Pipeline(stages=[tokenizer, hashingTF, lr])

To fully get started, read the Spark documentation or contact us to get started

We can help!

element61 has extensive experience in Machine Learning and Databricks. Contact us for coaching, trainings, advice and implementation.

Getting started

element61 has extensive experience in Delta and Databricks Delta. Contact us for coaching, trainings, advice and implementation.