What are special features when running Databricks on Azure?

(Azure Databricks)

Azure Databricks is a managed version of the Databricks platform optimized for running on Azure. Azure has tightly integrated the platform in its Azure Cloud integrating it with Active Directory, Azure virtual networks, Azure key vault and various Azure Storage services.

In this page we outline the specifics and benefits of running Databricks in Azure (called ‘Azure Databricks’)

Active Directory integration

When running Databricks in Azure, security is handled through Azure Active Directory (AAD). This practically means that, by default, users log-in through their Azure single sign-on.

Additionally, administrators can set-up other active directory integrations and policies:

- To fully centralize user management in AD, one can set-up the use of ‘System for Cross-domain Identity Management’ (SCIM) in Azure to automatically sync users & groups between Azure Databricks and Azure Active Directory. Practically, users are created in AD, assigned to an AD Group and both users and groups are pushed to Azure Databricks. This way all the user management is on Azure Active Directory level and there is no need to separately manage users for Azure Databricks. SCIM isn’t by default set-up and requires to be set-up. Read more about SCIM in Azure Databricks or contact us to help you set it up.

- Role Based Access Control (RBAC) is another security feature but is only available for Azure Databricks in the premium tier. Only then, administrators or owners can assign different roles to different users. Based on the assigned role users can have limited actions such as permission to delete notebooks, to create new clusters and/or to pause clusters.

- Azure Active Directory also now offers conditional access for Azure Databricks. In this set-up, administrators can set up policies for access like requiring multi-factor authentication, blocking or granting access to users from specific locations, only allowing users to log-in in between office hours, etc. Read more about Conditional access and how it needs to be set-up in your Active Directory or contact us.

Virtual Network integration (Premium feature)

By default, when deploying an Azure Databricks workspace a new locked virtual network is created. This virtual network is managed by Databricks and is the place where all the clusters are created.

if you want to have more control over the network features, one can deploy Azure Databricks cluster in your own virtual network. When running Azure Databricks in Premium tier, you can deploy within an existing virtual network and thus control the security yourself: e.g. you can apply traffic restrictions using network security group rules, access data sources from on-premise, connect to Azure services using service points, specify IP ranges that can access the workspace etc.

Credential Passthrough (Premium feature)

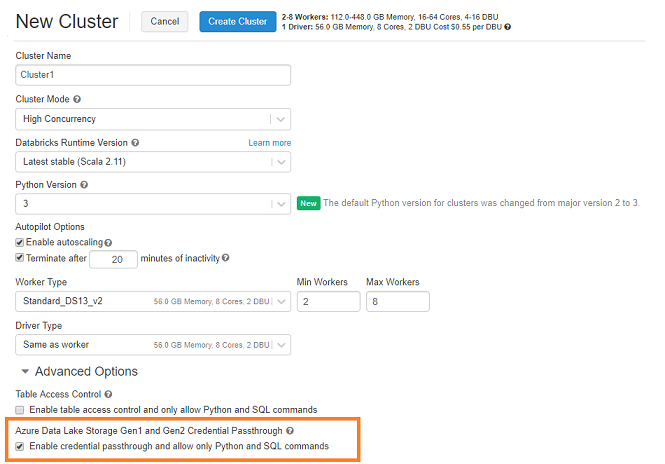

Credential Passthrough is a premium feature in Azure Databricks that allows to authenticate to Azure Data Lake Store using the Azure Active Directory identity logged into Azure Databricks. With this feature customers can control which user can access which data through Azure Databricks.

This feature needs to be enabled on the cluster (see screenshot below) and, once configured, users can then log-in & execute read/write commands to Azure Data Lake Store without the need to use service principal. The user can only read/write data based on the roles and ACLs the user has been granted on the Azure Data Lake Store.

The Credential Passthrough is available on High Concurrency and Standard clusters.

Key Vault integration

To manage secrets in Databricks we use secret scopes. There are two types of secret scopes: Azure Key Vault and Databricks based scopes. A Databricks secret scope is stored in an encrypted database which is managed by Databricks. If you create a Key Vault backed secret scope you can use all the secrets in the Azure Key Vault service.

To create a Key Vault integration, you have to go to a custom URL to set-up the integration: i.e. https://<your_azure_databricks_url>#secrets/createScope

(for example, https://westus.azuredatabricks.net#secrets/createScope). Here we create the secret scope after which we can use it in our notebooks (see second screenshot below)

Azure Storage Services integration

Azure Databricks has a very good integration with Azure Data storage services like Azure Blob Storage, Azure Data Lake Storage, Azure Cosmos DB and Azure SQL Data Warehouse. The connection with Azure SQL Database is similarly available but tougher to practically work with given it’s not possible to run an UPSERT or UPDATE statement (which is possible in the Azure SQL Data Warehouse integration).

We typically recommend to mount your Data Lake directly within your Azure Databricks notebooks. As such, one can interact directly with objects and files within the Data Lake and persist or write files directly to the Data Lake.Read more about how to mount your Azure Data Lake Storage with Azure Databricks or contact us on how to get started.

Getting started

element61 has extensive experience in Databricks. Contact us for coaching, trainings, advice and implementation.