As the official documentation is not covering this, we have built a guide on how to create an Azure Machine Learning pipeline and how to run this pipeline on an Azure Databricks compute.

At element61, we always recommend to use the right tool for the right purpose.

-

Azure Machine Learning gives us a workbench to manage the end-to-end Machine Learning lifecycle that can be used by coding & non-coding data scientists

-

Databricks gives us a scalable compute environment: if we want to run a big data machine learning job, it should run on Databricks

In this insight, we will look at how Databricks can be used as a compute environment to run machine learning pipelines created with the Azure ML’s Python SDK. This topic is something that isn’t documented too well within Azure ML documentation. By using a Databricks compute, big data can be efficiently processed in your ML projects.

What is Azure Machine Learning

Azure Machine Learning is a cloud-environment that data scientists use to train, deploy, manage and track machine learning models. In a previous insight, Azure Machine Learning, we covered the different components of the tool and gave the pro’s & con’s on when to use these components.

Azure Machine Learning has some limitations in coping with big data: the code-free components (i.e. Designer and Auto ML) can only run a Virtual Machine which is thus limited in parallelization. Similarly, running python or R code won’t be parallelized. This is where an Azure Databricks compute can help.

What is Databricks

Databricks offers an unified analytics platform simplifying working with Apache Spark (running on Azure back-end). It fits perfectly for running real-time and big data processing and AI. Through the Databricks workspace, users can collaborate with Notebooks, set-up clusters, schedule data jobs and much more.

Want to know more about Databricks: open this summary read in a separate tab

Why is integrating Databricks with Azure Machine Learning useful

By using Databricks as a compute when working with Azure Machine Learning, data scientists can benefit from the parallelization power of Apache Spark. Integrating Databricks into Azure Machine Learning experiments ensures that the scale of the compute job you are trying to solve does not matter. In other words, big data can be processed efficiently.

Three ways to use Databricks as a compute environment

Databricks can only be used as a compute environment when creating Azure Machine Learning experiments through the Python SDK. The designer and built-in automated ML functionalities cannot (yet) run on a Databricks compute. You can use Databricks as a compute environment as follows:

- Running Databricks notebooks in your pipeline steps: Databricks notebooks that cover specific machine learning tasks (e.g. data preparation, model training) can be attached to a pipeline step within Azure ML.

- Running PySpark scripts stored in the Databricks File Store (DBFS): Stored Python scripts can be attached to a pipeline step by passing the path in the file store where the script is located.

- Running PySpark scripts that are stored locally on your computer: Scripts that are stored locally on your computer can be attached to your pipeline step and subsequently be submitted to run on a Databricks compute.

Getting started with Azure Machine Learning and Databricks

As the official documentation is not covering this, we will guide you through an elaborate demo on how to create an Azure Machine Learning pipeline and how to run this pipeline on a Databricks compute. We will illustrate this process by using the Adventure Works dataset. Adventure Works is a fictitious bike manufacturer that wants to predict the average monthly spending of their customers based on customer demographics.

The pipeline, covering the entire ML cycle, will be constructed in a Databricks notebook. When running this notebook, an experiment will be created in the Azure ML workspace where all the results and outputs will be stored. To get started with creating a ML pipeline and run it on a Databricks compute, follow below five steps.

Prerequisites:

- Create an Azure Machine Learning workspace from the Azure Portal

- Create an Azure Databricks workspace in the same subscription where you have your Azure Machine Learning workspace

- Create a Azure storage account where you store the raw data files that will be used for this demo.

Step 1: Create and configure your Databricks cluster

Start by opening your Databricks workspace and click on the Clusters tab.

- Create an interactive cluster with a Non-ML Runtime of 6.5 (Scala 2.11, Spark 2.4.3) with at least two workers.

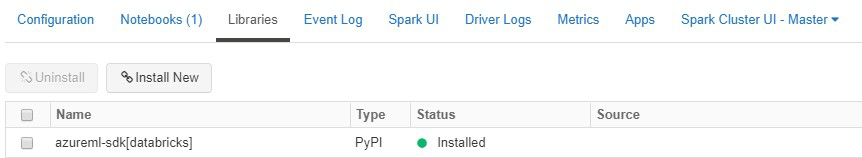

- Install the correct SDK on your cluster by clicking on the newly created cluster and navigating to the libraries tab. Press the Install New button and install the azureml-sdk[databricks] PyPi package.

Step 2: Create and configure a Databricks notebook

Once your new notebook is opened, we will start by attaching the Azure ML workspace, the Databricks compute and a Azure Blob store to interact with (read and write inputs and outputs of our pipeline).

Befor doing this, we'll need to import some Azure ML objects specific for Databricks

from azureml.core.compute import DatabricksCompute

from azureml.core.databricks import PyPiLibrary

from azureml.pipeline.steps import DatabricksStep

Create an Azure ML workspace object

Next, we'll create an Azure ML workspace object. This will be used later for experiment and pipeline submission from the Databricks notebook to the Azure Machine Learning workspace.

Creating the object can be done by passing the name of your workspace, your Azure subscription ID and the resource group where your Azure ML workspace is located.

ws = Workspace(workspace_name = '<workspace_name>',

subscription_id = '<subscription_ID>',

resource_group = '<resource_group_name>')

print('Workspace name: ' + ws.name,

'Azure region: ' + ws.location,

'Subscription id: ' + ws.subscription_id,

'Resource group: ' + ws.resource_group, sep='\n')

Create a Databricks compute object

All computations should be done on Databricks. To configure this we'll define a compute object and leverage it in those pipeline steps we want to run on Databricks.

A compute object can be registered by passing the name of your cluster, Azure resource group and Databricks workspace and by passing an access token. To generate an access token, click on the name of your workspace (top right of the Databricks interface) and go to user settings.

db_compute_name = os.getenv("DATABRICKS_COMPUTE_NAME", '<name>') # Databricks compute name

db_resource_group = os.getenv("DATABRICKS_RESOURCE_GROUP", "<resource_group_name>") # Databricks resource group

db_workspace_name = os.getenv("DATABRICKS_WORKSPACE_NAME", "<workspace_name>") # Databricks workspace name

db_access_token = os.getenv("DATABRICKS_ACCESS_TOKEN", "<access_token>") # Databricks access token

try:

databricks_compute = ComputeTarget(

workspace = ws, name = db_compute_name)

print('Compute target already exists')

except ComputeTargetException:

print('Compute not found, will use below parameters to attach new one')

print('db_compute_name {}'.format(db_compute_name))

print('db_resource_group {}'.format(db_resource_group))

print('db_workspace_name {}'.format(db_workspace_name))

print('db_access_token {}'.format(db_access_token))

config = DatabricksCompute.attach_configuration(

resource_group = db_resource_group,

workspace_name = db_workspace_name,

access_token = db_access_token

)

databricks_compute = ComputeTarget.attach(workspace = ws, name = db_compute_name, attach_configuration = config)

databricks_compute.wait_for_completion(True)

Note that contrary to the other Python-SDK pipeline demos, we are attaching a Databricks compute to our workspace (and not an Azure ML compute)

Note that you can run one pipeline step on an Azure ML VM and another step on Databricks: you thus have 100% flexibility

Create a datastore connection

This datastore object will be used to point to the location where the inputs and outputs for each pipeline step are located. By passing these locations to the pipeline steps, the correct inputs can be ingested into the attached notebooks and the outputs are written to the correct location. We want all in- and output data to be linked to our Azure Data Lake Gen 2.

To create such a connection, pass the name of your storage account, the name of your container and the storage account key (which can be found in the Azure Portal).

blob_datastore_name = '<datastore_name>' # Name of the datastore to workspace

container_name = os.getenv("BLOB_CONTAINER", "<container_name>") # Name of Azure blob container

account_name = os.getenv("BLOB_ACCOUNTNAME", "<account_name>") # Storage account name

account_key = os.getenv("BLOB_ACCOUNT_KEY", "<account_key>") # Storage account key

blob_datastore = Datastore.register_azure_blob_container(workspace = ws,

datastore_name = blob_datastore_name,

container_name = container_name,

account_name = account_name,

account_key = account_key)

datastore = Datastore.get(ws, datastore_name = "<datastore_name>")

print(datastore.datastore_type)

Once we have a workspace, compute & datastore connection connected to the notebook, we can interact with them easily during pipeline execution.

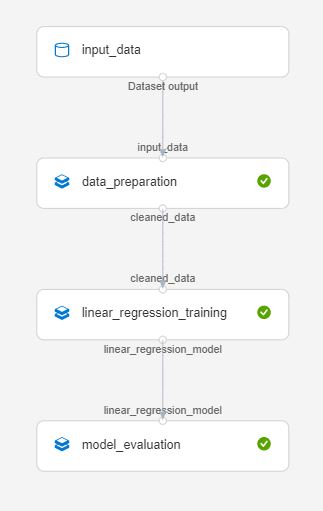

Step 3: Building the pipeline

A Machine Learning pipeline consists of various steps incl. data preparation, various model runs and evaluations. Each of these steps are pipeline steps. In our example we have 3 pipeline steps:

- Data preparation: In this step, the raw Adventure Works customer and monthly spending data will be ingested, cleaned and merged. The raw data will be read from the attached Data Lake (blob storage) and the output will be written back to the blob store.

- Linear regression training: A linear regression model will be trained based on the cleaned data. The trained model and test set will be written to the blob store.

- Model evaluation: The trained model will be evaluated, and the results will be logged to the Azure ML workspace.

Creating the notebooks that your pipeline will execute

Each pipeline step will have a particular Databricks notebook attached to it. This notebook contains all the content that a step will execute. Azure ML pipelines allow users to attach their code without having to add a lot of Azure ML specific syntax. You will only need to add code for reading inputs and writing outputs as well as code for metric/parameter/image logging to the Azure ML workspace .

- Create a folder in your Databricks workspace to store the notebooks we want our pipeline to run

- Create notebooks for data preparation, linear regression training and model evaluation

The rest of the demo will highlight some code snippets in the pipeline step notebooks that need to be added in order to run your pipeline. The bulk of the code in these attached notebooks will be standard Python/PySpark code that is used to perform the machine learning tasks in each of the steps. This code will not be discussed as this is very case specific.

Create input and output data references

Pipeline steps are defined by the underlying notebook as well as the inputs and outputs that are needed to run this notebook. In order to pass information between pipeline steps, the location of these inputs and outputs should be ingested into the notebook. These locations refer to the path in the attached datastore where inputs are stored, and outputs need to be written to. Databricks allows you to interact with Azure Blob and ADLS in two ways.

- Direct access: interact with Azure Blob or ADLS URIs directly. The input or output URIs will be mapped to a Databricks widget parameter in the Databricks notebook.

- Mounting: You will be supplied with additional parameters and secrets that will enable you to mount your ADLS or Azure Blob on your Databricks notebook.

We will start by defining the data pointers that we will be passing to our notebooks during execution. Make sure to add the following snippet to the notebook you were using to build the pipeline.

input_data = DataReference(datastore = blob_datastore,

data_reference_name = 'input_data',

path_on_datastore = 'adventureworks/raw_data'

)

output_data1 = PipelineData(

"cleaned_data",

datastore = blob_datastore)

output_data2 = PipelineData(

"linear_regression_model",

datastore = blob_datastore)

The input Data Reference refers to the path in your connected datastore that holds the raw data files to be used. These files will be ingested in the first step (data preparation) for cleaning. Cleaned data will then be passed (output 1) from the data preparation step to the model training step. This step has an output (output 2) connecting the training node with the model evaluation node.

Note: When using Data References, you need to specify the exact path in the datastore container to your files. When using Pipeline Data, Azure ML will create a folder in the root directory of your container used to store and version intermediate outputs of your pipeline (versioned by run ID).

In order to pass this information to the pipeline step notebooks using direct access, the following code snippets need to be added to each of these notebooks. The input or output paths will be mapped to a Databricks widget parameter in the Databricks notebook. The arguments of these widget parameters can be used to read the data into the notebook and write the outputs back to the datastore.

dbutils.widgets.get("input_data")

i = getArgument("input_data")

print (i)

dbutils.widgets.get("cleaned_data")

o = getArgument("cleaned_data")

print (o)

customers_input = os.path.join(i, 'AdvWorksCusts.csv')

spending_input = os.path.join(i, 'AW_AveMonthSpend.csv')

In order to pass this information to the pipeline step notebooks using mounts, the following code snippets need to be added to each of these notebooks. You will have to supply your script with additional input parameters. This is shown in the underlying code snippet.

dbutils.widgets.get("input")

i = getArgument("input")

dbutils.widgets.get('cleaned_data')

o = getArgument('cleaned_data')

try:

dbutils.widgets.get("input_blob_secretname")

myinput_blob_secretname = getArgument("input_blob_secretname")

dbutils.widgets.get("input_blob_config")

myinput_blob_config = getArgument("input_blob_config")

dbutils.fs.mount(

source = i,

mount_point = "/mnt/input",

extra_configs = {myinput_blob_config:dbutils.secrets.get(scope = "amlscope", key = myinput_blob_secretname)})

except:

print('datastore already mounted')

customers_input = os.path.join(i, 'AdvWorksCusts.csv')

spending_input = os.path.join(i, 'AW_AveMonthSpend.csv')

Note: Reading and writing Spark dataframes in the pipeline step notebooks is exactly similar when using direct access vs. mounting.

Note: When using mounts, you do not need a Blob Client to read and write data from/to the attached datastores. This is a more efficient way of data reading and writing ( = best-practice).

Note: In this case we have mounted our attached blob store using the key stored in an Azure Key Vault (= best-practice). You can also find similar code snippets for mounting an Azure Data Lake Gen 2 and should leverage OAuth 2.0

Build the Databricks pipeline step

Now that we have set up all the inputs and outputs and updated the pipeline step notebooks accordingly, we can start building the pipeline steps themselves. Many different types of pipeline steps can be used when creating Azure ML Python-SDK pipelines incl.

- PythonScriptStep: Adds a step to run a Python script in a Pipeline.

- AdlaStep: Adds a step to run U-SQL script using Azure Data Lake Analytics.

- DataTransferStep: Transfers data between Azure Blob and Data Lake accounts.

- DatabricksStep: Adds a DataBricks notebook as a step in a Pipeline.

- HyperDriveStep: Creates a Hyper Drive step for Hyper Parameter Tuning in a Pipeline.

- AzureBatchStep: Creates a step for submitting jobs to Azure Batch

- EstimatorStep: Adds a step to run Estimator in a Pipeline.

- MpiStep: Adds a step to run a MPI job in a Pipeline.

- AutoMLStep: Creates a AutoML step in a Pipeline.

In this demo, we will be using the DatabricksStep. To create a pipeline step, add the following code snippet to the notebook you use to build the pipeline.

data_prep_path = os.getenv("DATABRICKS_PYTHON_SCRIPT_PATH", "/Shared/adventure_works_project/data_prep_spark") # Databricks python script path

step1 = DatabricksStep(name = "data_preparation",

run_name = 'data_preparation',

inputs = [input_data],

outputs = [output_data1],

num_workers = 1,

notebook_path = data_prep_path,

pypi_libraries = [PyPiLibrary(package = 'scikit-learn')],

compute_target = databricks_compute,

allow_reuse = False

)

The following arguments need to be passed in order to create a Databricks pipeline step:

- Name: This is the name of the pipeline step.

- Notebook path: Path to the directory where the attached notebook can be found.

- Compute target: Specifying the compute target to be used for this step (in this case, this is the Databricks compute we attached to the notebook earlies)

Note: Whenever the pipeline is submitted to the Azure ML workspace and the run is started. Databricks will create job clusters to execute all the pipeline steps. These job clusters need to be configured in order to make sure they can run the code that is passed in the underlying notebook. This can be done by specifying the Spark version as well as passing PyPi libraries that need to be installed.

Note: for an overview of all the arguments that can be passed to a Databricks step.

Step 4: Submitting the pipeline to the Azure ML workspace

Once all the steps are created, they can be aggregated, and a Pipeline object can be built. The following code snippet can be added to the notebook.

steps = [step1, step2, step3]

Note: We start by aggregating the steps into a list. This variable will be passed to construct a pipeline object. This pipeline object is attached to our workspace. We can validate this pipeline to make sure all the steps are logically connected.

pipeline = Pipeline(workspace = ws, steps = [steps])

print('Pipeline is built')

pipeline.validate()

print('Pipeline validation complete')

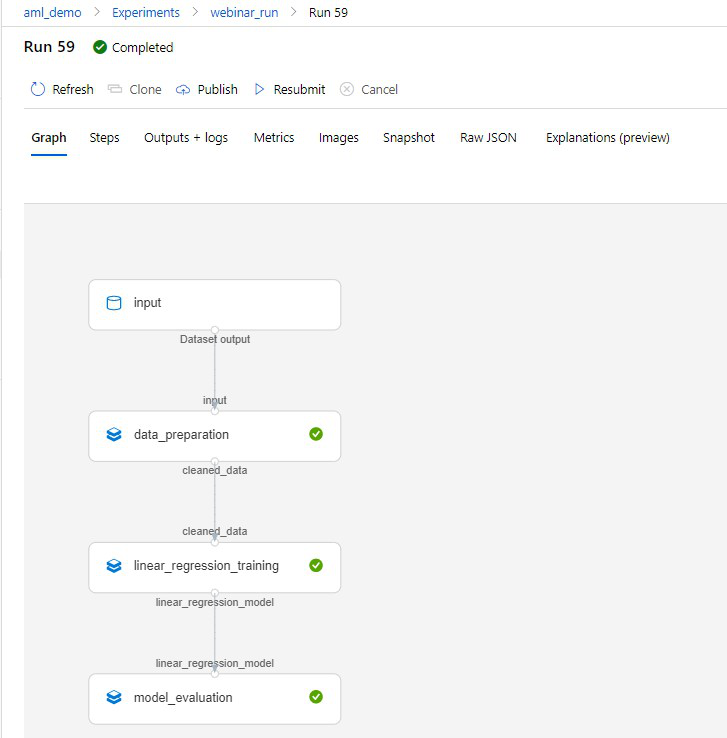

exp_name = 'webinar_run'

exp = Experiment(ws, exp_name)

pipeline_run = exp.submit(pipeline)

print('Pipeline is submitted for execution')

pipeline_run.wait_for_completion(show_output = False)

Note: It's with above code that we actually create an experiment and attach it to our Azure ML workspace. Next, we can submit the Pipeline object to the experiment. This will trigger the pipeline run. The outputs can now be viewed in the Azure ML workspace once the run is finished.

Step 5: Checking the results in the Azure ML workspace

Once the pipeline has been submitted an ongoing run will appear in the Azure ML workspace under the experiments tab. In here, you can drill down to each step to check the results and outputs.

Note: Debugging the notebooks that you are running in the pipeline can be done by clicking on the outputs + logs tab and going to the stoutlogs.txt file in the logs/azureml folder. This will redirect you to Databricks and show all the intermediate outputs of the Databricks notebook.

Strengths and weaknesses of the Databricks - Azure ML integration

Strengths

- Using Databricks as a compute environment allows big data to be processed efficiently by leveraging the power of Apache Spark.

- If you are familiar with using the Python SDK to create Azure ML pipelines, learning how to integrate Databricks is really easy.

- Data scientists can work in an environment they are used to and can convert existing notebooks into pipeline steps by adding only a small piece of Azure ML syntax.

- Smooth integration of the Azure ML workspace and the Databricks workspace (especially nice for debugging purposes).

Weaknesses

- Lack of clear/elaborate tutorials showing how to connect Databricks.

Alternative

- As an alternative to Azure ML, one could use mlflow within Databricks to ML Lifecycle management. However, to deploy the model as an API on Azure Kubernetes Service one would anyway use Azure ML functionality.

- In conclusion: element61 recommends Azure customers to use Azure ML as an end-to-end ML Lifecycle tool.

Our expertise

Building on our partnership with both Microsoft and Databricks; element61 has solid experience in setting up an Azure Machine Learning and Azure Databricks.

Continue reading or contact us to get started: