What is Airflow

Airflow is an open-source project that was initiated at Airbnb in 2014. With the strong support of the community the Airflow project is quickly evolving and releasing new versions and integrations. Airflow is currently considered one of the top projects in the Apache Foundation.

Airflow is a workflow management platform where you can programmatically schedule, orchestrate and monitor workflows. All the workflows in Airflow are written in Python and are defined as directed acyclical graphs (DAGs).

Why Airflow

Open Source

With the strong support from the community Airflow is quickly evolving and releasing new versions and integrations every few months.

Custom plugins

Airflow offers the possibility of writing custom plugins. This makes it simple to include these plugins within your tasks. It has led to a plethora of so-called operators that integrate with a variety of systems and tools. As such, Airflow has great potential within a multi-cloud setup where one might have to blend services from different cloud providers.

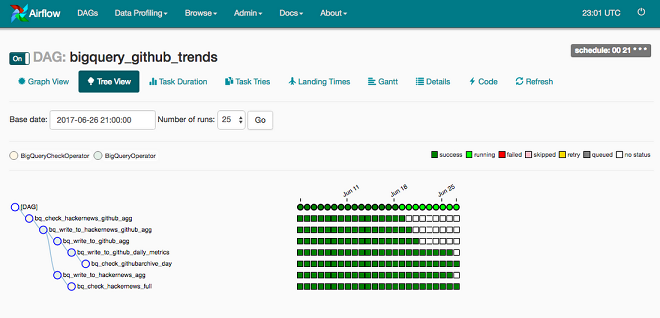

User Interface

Airflow allows you to easily monitor and debug your workflows through an UI. The built-in UI provides a neat overview on the DAGs and tasks. You can easily check the status of your jobs, the code that generates the DAGs, detailed summaries of historical runs. Moreover, triggering tasks manually or rerun earlier tasks is intuitive and supported within the user interface.

Integration

Being a fully Python code-based solution, all pipelines can be versioned and flow-related constructs such as looping and conditional statements can be easily integrated.

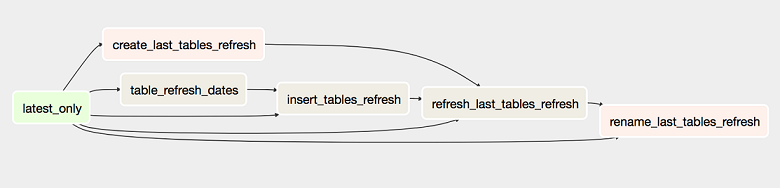

Pipelines are expressed as DAGs

DAGs – directed acyclical graphs – is the core concept of Airflow. You can think of a DAG as a number of steps that you want to execute in a specific order with a dependencies between them. The acyclical aspect refers to the fact that no loops are allowed within these workflows.

DAGs are expressed as python file. Using code to define your workflows not only makes it possible to version your pipelines, but also to use parameters & functions to keep your workflow configurations efficient & clean.

Airflow components

Database

Airflow is backed by a database that stores metadata and also contains all the historic DAG runs. Although Airflow integrates with any SQLAlchemy compliant database, MySQL or Postgres would be the preferred options. Our suggestion would be to use a cloud based database service to reduce the maintenance cost.

Scheduler & executor

The scheduler is at the heart of Airflow and is continuously polling the database to follow up on the status of each task and to assure that the necessary work is being carried out by the executor(s).

Executor

When the scheduler picks up a task for execution, it will distribute the work to an executor.

A number of executors are supported in Airflow, each with their own pros and cons:

- Sequential executor: minimal executor that is not suited for production purposes

- Local executor: simplest executor, suited for small production workloads but not suited for large scale

- Dask executor: tasks are being executed on a dask cluster, setup is a bit more complex

- Celery executor: a celery instance is being used to deistribute the tasks, very scalable approach which however requires to setup some additional resources (e.g. a task queue such as Redis or RabbitMQ)

- Kubernetes executor: running each task in a kubernetes pod. This is interesting for heavy tasks but creates some overhead when there is a large amount of small tasks.

The difference between executors comes down to the resources they have at hand and how they choose to utilize those resources to distribute work (or not distribute it at all).

An additional concept that will be important to understand this insight, is the idea of an Airflow executor, which is defined as “the mechanism by which task instances get run”. A (non-exhaustive list) number of executors are available:

Airflow has support for various executors. Current used is determined by the executor option in the core section of the configuration file.

Webserver

The web server one of the unique selling propositions of Airflow that offers an intuitive and extensive overview on DAGs and tasks. It is not only a great place to find an overview of historic and running DAGs, but also to resolve failures and resubmit failed tasks.

RBAC - i.e. role based access control - makes it possible to differentiate between the roles that users are assigned. As such, you could allow any teammember to view the existing DAGs, while only allowing a subset of those users to trigger DAG runs.

How can we help you

The team of element61 has extensive knowledge of Airflow. We have implemented this at multiple customers, we have the expertise in setting it up end-to-end and coaching organizations on some best practices in terms of development and usage. Contact us for coaching, training, advice and implementation.

Continue reading on: