As data professionals, our role is to extract insight, build AI models and present our findings to users through dashboards, API’s and reports. Although the development phase is often the most time-consuming part of a project, automating jobs and monitoring them is essential to generate value over time. At element61, we’re fond of Azure Data Factory and Airflow for this purpose.

In this article, we provide an insight on the pros and cons of both tools, as well as the potential of using them in a combined setup.

Azure Data Factory

What is Azure Data Factory?

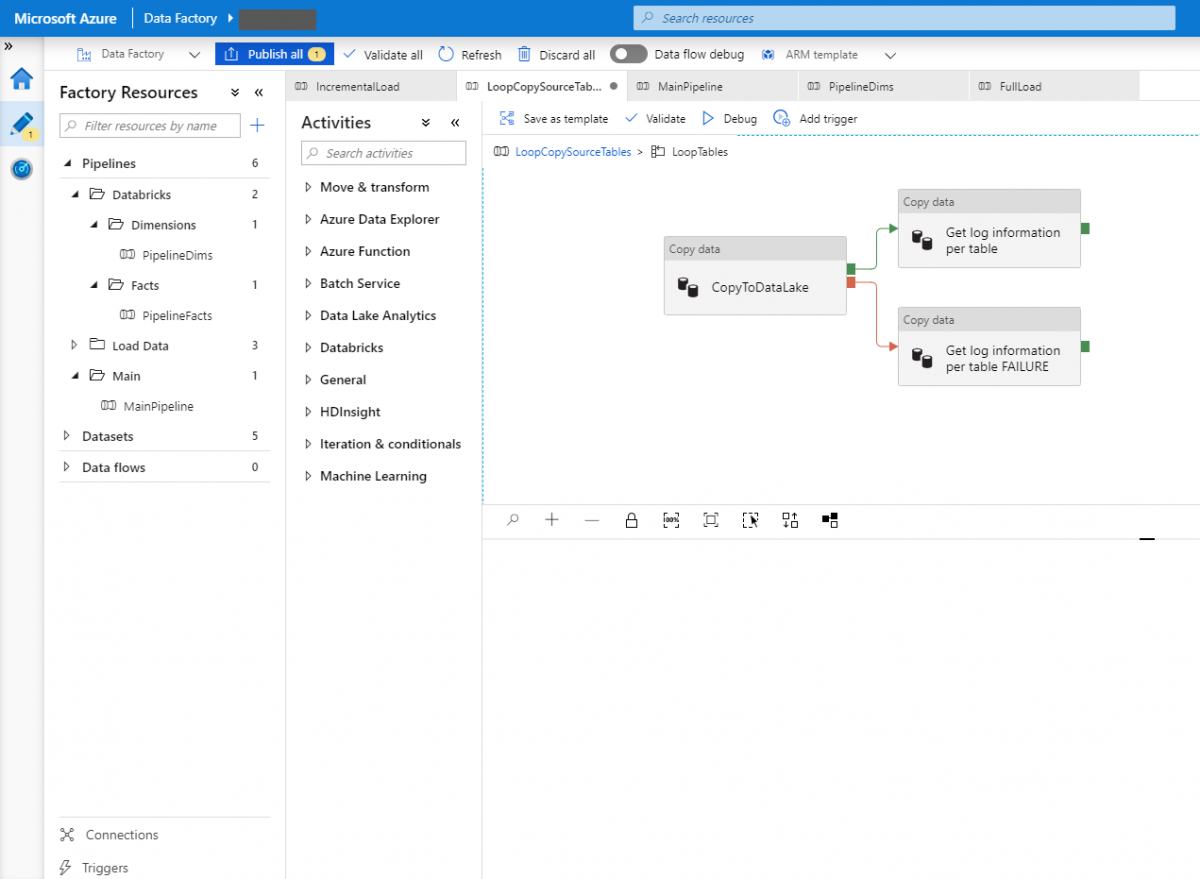

Azure Data factory (hereafter “ADF”) is a service offered by Microsoft within Azure for constructing ETL and ELT pipelines. Although ADF includes the possibility of including custom code, the majority of the work is conducted using the graphical user interface.

Advantages of Azure Data Factory:

-

Getting started with ADF is effortless; accessing the services is only a matter of a few clicks. This also applies to creating your first pipelines.

-

ADF is a “pay-as-you-go” solution. This means there is no fixed cost and pricing is fully dependent on the number of pipeline-runs and their resource utilization.

-

One of the most appealing features of ADF is the seamless integration with on-premise data sources through the Integration Runtime (IR). This software is installed on a machine that can access the on-premise data source and can be considered as a secure “tunnel” to Azure.

-

The underlying resources and infrastructure are fully managed by Azure. As a result, the user should not be concerned about the resource usage of the ETL pipelines. Note that extracting data from on -premise data sources will however incur a load on these systems. This should be taken into consideration: even though nightly copies of your on-premise database to the cloud might be plain sailing for ADF, you should also make sure that your on-premise systems can handle the load.

Disadvantages of Azure Data Factory:

-

Building custom activities and integrations is not included within the service. Users are reliant on Azure itself for new features.

-

Integrations with non-Azure services is limited. Although integrations are provided for some of the most common cloud services at other vendors (e.g. Amazon S3, Google BigQuery), standalone ADF would not stand out as our tool of choice in a multicloud strategy.

-

The ADF interface contains a tab for monitoring pipelines and activities. This interface is rather table-based and does not offer as great an overview as Airflow.

Apache Airflow

What is Airflow?

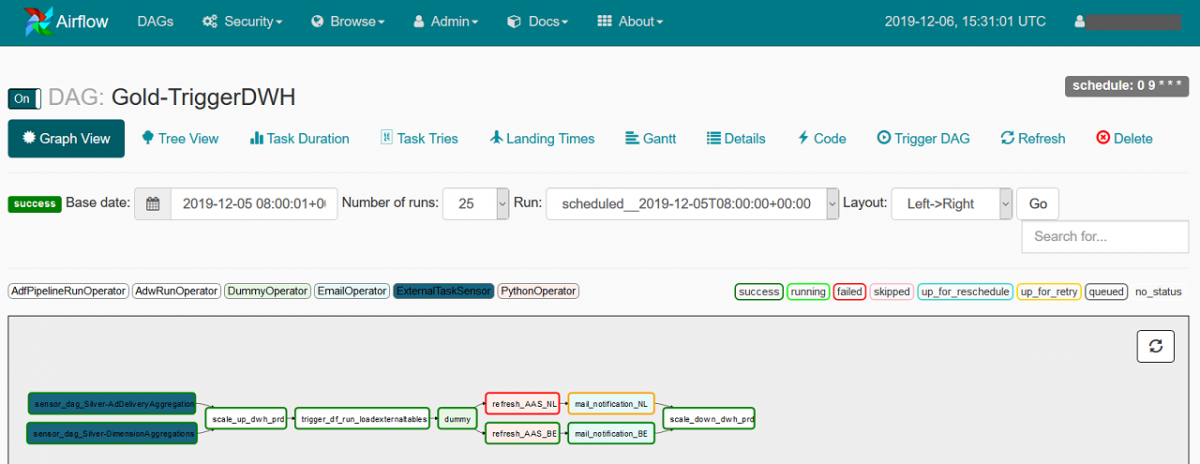

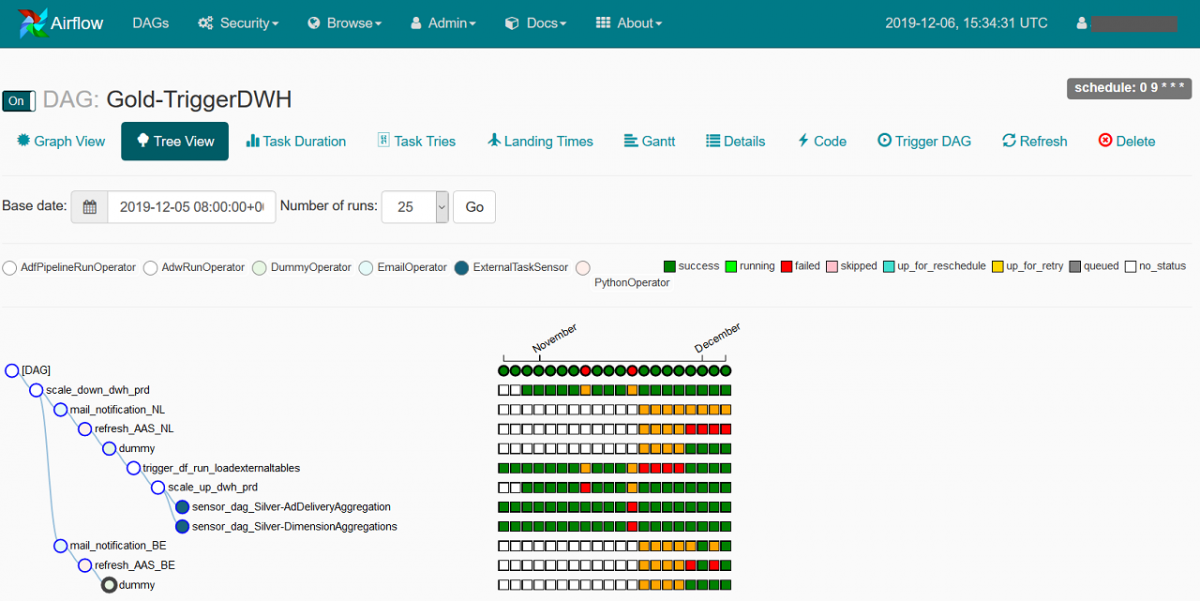

Airflow is a project that was initiated at Airbnb in 2014. It was open sourced soon after its creation and is currently considered one of the top projects in the Apache Foundation. The main features are related to scheduling, orchestrating and monitoring workflows. Workflows – directed acyclical graphs or DAGs in Airflow – are defined using Python code.

Advantages of Airflow:

-

Like many of today’s most exciting software solutions, Airflow is an open-source project. Driven by an active community, the project is quickly evolving and releasing new versions and integrations every few months.

-

Airflow offers the possibility of writing custom plugins. This makes it simple to include these plugins within your tasks. It has led to a plethora of so-called operators that integrate with a variety of systems and tools. As such, Airflow has great potential within a multicloud setup where one might have to blend services from different cloud providers.

-

The Airflow UI is appealing and provides a neat overview on the DAGs and tasks. Moreover, triggering tasks manually or rerun earlier tasks is intuitive and supported within the user interface.

-

Being a fully Python code-based solution, all pipelines can be versioned and flow-related constructs such as looping and conditional statements can be easily integrated.

Disadvantages of Airflow:

-

As for many open source projects, setting up the infrastructure and configuring the environment is a responsibility for the user. This requires a team that has experience in running and upgrading an application that is at the core of all data related jobs in an organization. Moreover, the user should take account of security. This last aspect can be quite tedious in a hybrid-cloud solution.

-

Airflow requires several components that need to be “always-on” to pick up scheduled tasks. The initial setup carries along a fixed cost that is independent of the number of jobs. Additional charges are depending on the number of pipelines, the frequency at which they are executed, and the resources required to execute them.

Why choose?

Over the last years, organizations increasingly rely on cloud services for their data-related applications. Historically, nonetheless, most data is stored in on-premise systems. In this case, element61 suggests to combine both Azure Data Factory and Airflow in a unified setup. In this setup, Data Factory is used to integrate cloud services with on-premise systems, both for uploading data to the cloud as to return results back to these on-premise systems. Airflow on the other hand – with the multicloud operators and custom extensibility – is used for scheduling all jobs that are cloud-based.

Both tools include scheduling possibilities, which makes integrating them together an important challenge. Consider for example, a pipeline running on a cloud-based Spark cluster, for which you want to export the results to your on-premise data warehouse. In the proposed architecture, we would use Airflow for orchestrating both tasks i.e. starting and monitoring both the Spark job, as well as the Data Factory pipeline that exports the data to your on-premise data warehouse.

As the Airflow project doesn’t currently offer an operator for Data Factory, we developed a custom plugin to enable this integration. This plugin allows for a setup that leverages the best of both tools: on-premise integration & security from Data Factory on the one hand, a rich user interface, clear monitoring and the power of coding workflows from Airflow on the other.

Get in touch if you would like to know more about Data Factory, Airflow or a combined setup!