What is the Data & AI Summit

The Data & AI summit is the old 'Spark Summit' and has been around for many years. It's one of the biggest Data & AI conferences in the world & organized by Databricks together with a series of other partners in the Data & Analytics vendor landscape. From 27th of June until the 30th of June 2022, the Data & AI Summit took place again in-person after various virtual editions during COVID. This year it was held in San Francisco with 5000 in-person attendees being joined by more than 70000 virtual attendees.

As element61, we were invited to give three sessions at the event, two in-person and another one virtually, which you can watch (or rewatch 😉) here (free registration required though):

-

Implementing an End-to-End Demand Forecasting Solution Through Databricks and MLflow

-

Optimizing Incremental Ingestion in the Context of a Lakehouse

Aside from giving those presentations, there were of course tons of exciting announcements and other sessions being held at the event. The number of announcements was quite large so I will just list our three main takeaways of the event:

I guess we have to talk about the Lakehouse

The main theme of the event was the ‘Lakehouse’, whether it was puns made on the free merch t-shirts or the word being dropped or depicted very frequently. Databricks really wants to revolutionize the business by doing data warehousing not in a warehouse but in a data lake, and it makes a lot of sense. No duplicate data silos which cause a lot of data being sent back and forth, all teams (BI, Machine Learning, Analysts, Engineers) can work on the same data and all governance can be managed in the same place as well. The reason this has not been done previously is mainly two-fold

- Data Warehousing needs some kind of ACID transactions, to be able to securely update values while keeping the data usable for everyone else.

- While using Spark clusters on a data lake gives amazing results, using the data lake as a data warehouse, and bombarding it with SQL-like queries, was lacking in performance.

The first problem has been tackled for a while now by the introduction of “better” data types, mainly the Delta Lake. The second problem has been alleviated by the ongoing performance improvements of the last couple of years which has culminated in the GA release of Photon by Databricks just this week.

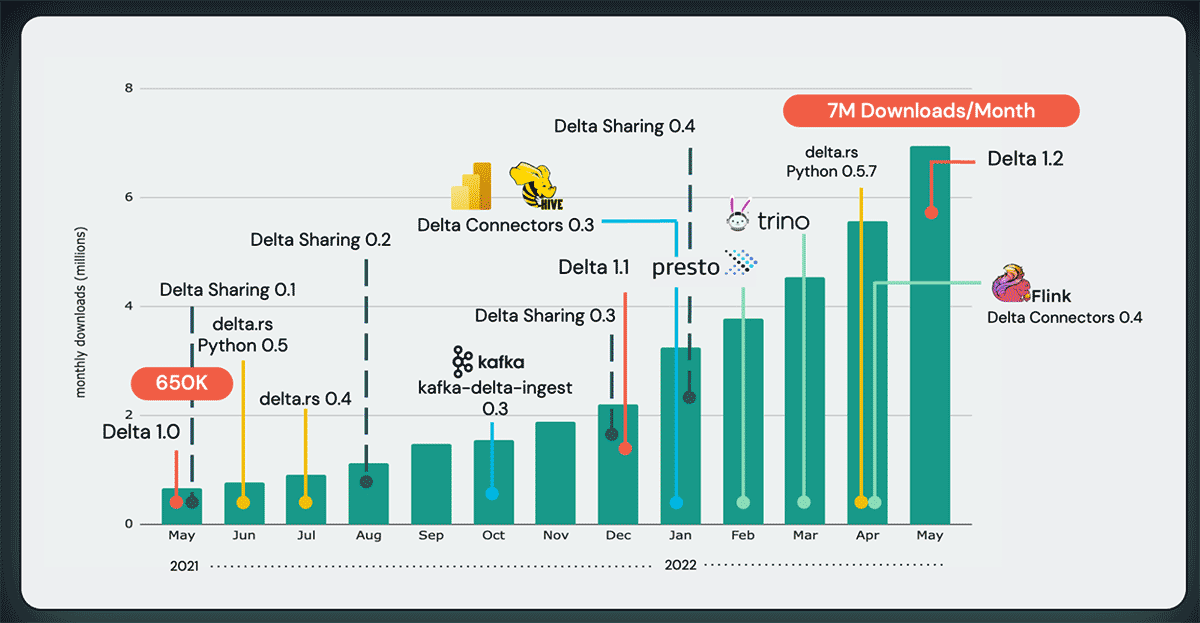

Both of these tracks had lots of exciting announcements this week. Delta Lake went completely open-source (compared to semi-open-source before) to try to get as much adoption as possible as the new standard data format, Photon has gone General Available and has made a lot of improvements to their computational framework.

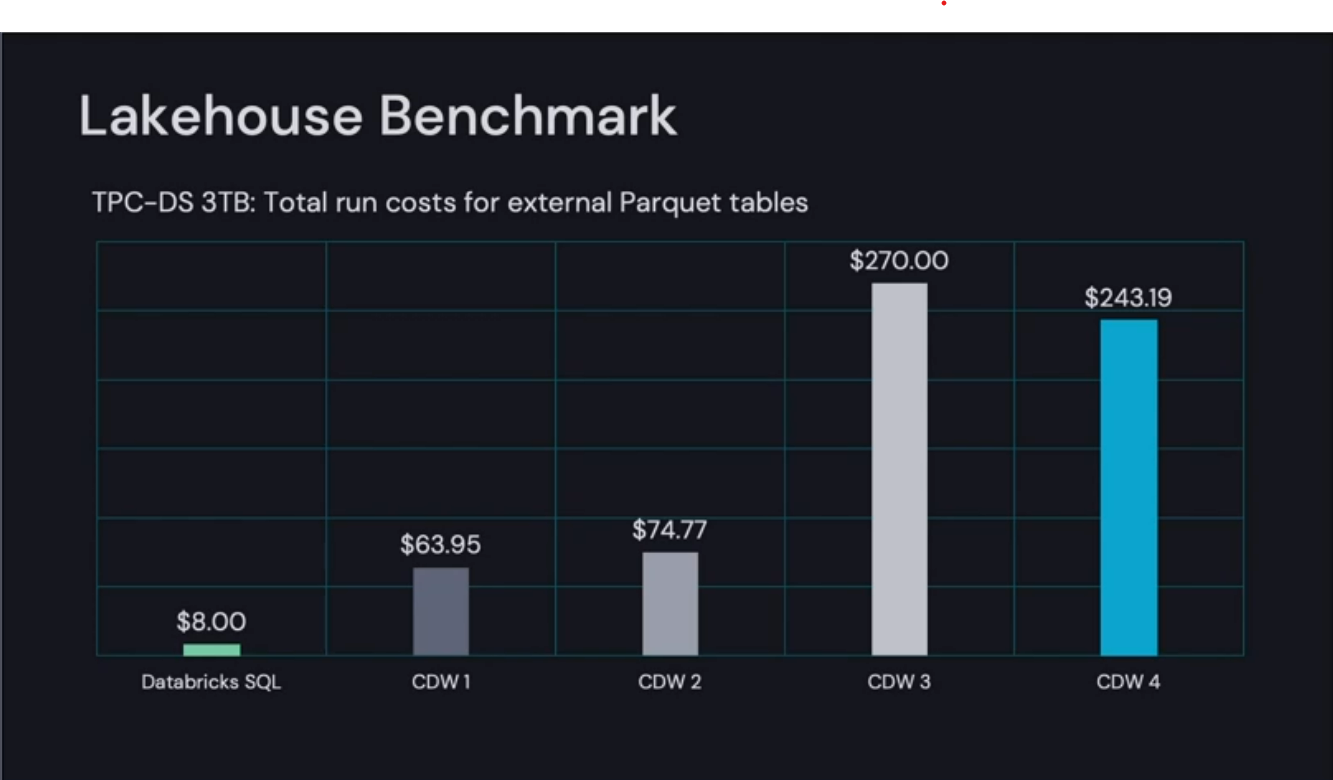

The biggest “reveal” of the event was the performance comparison that has been made between the different Cloud data lakehouse setups that are available (most importantly comparing with Snowflake). I’ll share here the specific slides, comparison one is using the different data warehouses as a data lakehouse (basically doing AI flows on it), and comparison two is using both of them as a classical data warehouse.

Lakehouse benchmark: Data & AI Summit 2022 Day 1 Morning Keynote

Data Warehouse Price/Performance comparison: Data & AI Summit 2022 Day 1 Morning Keynote

Databricks and Delta seem to be killing it on all fronts. This benchmark has been engaged by Databricks itself so they could have picked a situation where they are clearly better than the competition but still, because of the enormous difference it might not even matter much which situation they picked. Another more independent report is available online, comparing Delta (Databricks) vs Iceberg (Snowflake) and there again Databricks comes on top clearly. The revolution seems nearby!

Lots of exciting new releases coming up, but too soon to switch already

Where Databricks used to be your main tool to Spark-based data analysis on your data lake data, it now has evolved into a lot more than that, and it is trying to take a lot more on its plate as well. As discussed above, we are fairly confident that using Databricks as a Lakehouse (or a Warehousing tool) is production-ready but there are some other features out there that look really nice and promising, but we would not want to suddenly switch around everything we were doing in the past to make use of those.

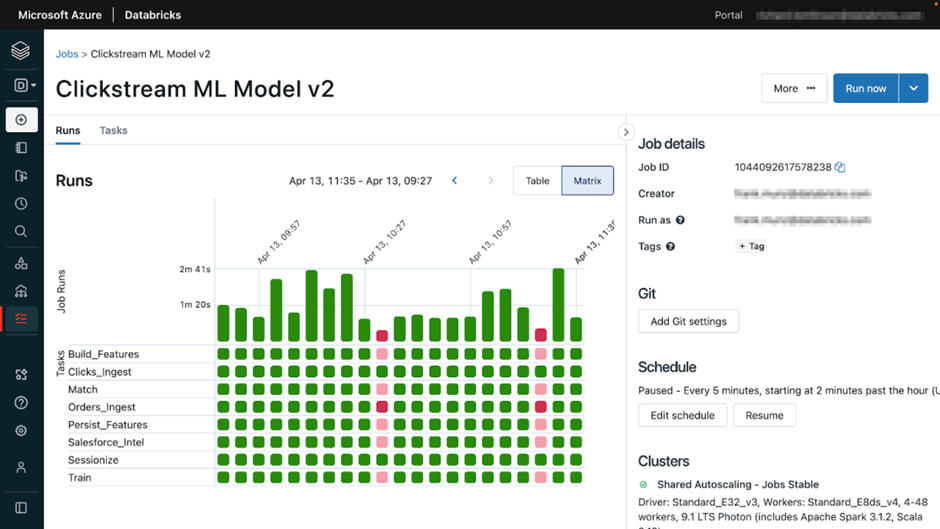

Databricks Workflows

When Databricks introduced Workflows, basically their orchestration tool, I initially was a bit skeptical about this product because I did not understand how it would fit within the whole ecosystem of a modern data platform. When we are talking about the orchestration of a modern data platform, I would rather have all orchestration done from 1 place/tool instead of having multiple tools to handle this. Just the fact that if you do not do it in one and the same tool, you will not be able to build dependencies between the different tools, hence you will have an inefficient orchestration that depends on putting the different schedules in line with each other, instead of having them depend on a certain flow being executed before.

Having said that, I do think there is room for another orchestration tool within the data space. We really like using Airflow (augmented with Azure Data Factory as you can read about here: Azure Data Factory & Airflow: mutually exclusive or complementary?) for companies who have the need for a more complicated orchestration flow or just Azure Data Factory if the orchestration flow is relatively simple. However, to run Airflow, you need quite a lot of different components (Container, Container Registry, FileShare, PostgresDB, possibly Kubernetes) and although the product runs quite smoothly, it is one of the more complex ones out there. There is a managed Airflow being offered out there as well, but we think that one is just too expensive.

Enter Databricks Workflows, which works more like a UI tool again, comparable to Azure Data Factory. It will be fully managed by Databricks itself, and apparently, the scheduling itself will be done in the Data Plane of Databricks which means you will not need to have a cluster live to actually have your scheduling done.

Demo of Databricks Workflow: databricks.com

This all sounds great, and it is, but currently the functionalities that are present are still lacking too much. However, they seem to be putting quite a lot of focus on it, with the coming features all being added shortly:

- Integration with Slack

- Conditional Flows

- Passing through result values

We especially look forward into trying to integrate our main ETL tool (ADF) into Databricks Workflows and see how that works before we fully explore the product in more detail. One other concern I have is how the CICD will work to get these flows into different workspaces (development vs production). I am very keen to keep on tracking this product.

Databricks Unity Catalog

Another exciting product which is fairly new is the Unity Catalog. Basically, it is Databricks’ answer to the increased need for governance in organizations. It allows you to take care of both the permissions of users in a Databricks workspace, as well as the permissions to data of those specific users, and it also provides some lineage graphs to top it all off.

The product will be going GA probably end of August, and it looks great. The lineage graphs are nice, even more so if you are all in on the Data Lakehouse because all your data then will be residing in one platform.

Demo of Databricks Unity Catalog: databricks.com

However, you are talking here then about governance when the data is in the data lake, not how it got there before, which might be something where you still need another tool for if you would want to have that as well.

Also, there are still some features missing that will stop it from taking off completely at this moment.

- The main one is Attribute Based Access Control, which will allow you to set row-level and column-level filtering on a per user/group basis. Currently you can achieve something like that using a new feature called Dynamic Views but it really is a workaround and not the ideal way you would want to work.

- Another one is the ability to link both Databricks Workspace and Unity Catalog Account users through SSO.

Both of these features will be available in Public Preview in the next 6 months probably.

Databricks SQL Serverless

This is a big one, and it will be going GA very soon, but only on AWS. For Azure we probably still need to wait around a month before it will be available but obviously, this would be a huge one! Instead of the usual 5-minute start-up time, they are looking to trim that to only a couple of seconds, which would be a big step into actually using Databricks as a Data Warehouse itself.

Not needing a cluster to be on at all times to have quick querying will of course be a huge improvement in costs for most companies who would want to use it as such. We are extremely excited to give this a go soon.

Databricks has huge momentum and growth

On the surface it could look like Databricks is trying to tackle a lot of issues at once. Databricks Workflows and Databricks Unity Catalog have been mentioned above already, but they are also adding Databricks Cleanrooms (a way to share data with others in a very controlled way) and Databricks Marketplace (a marketplace where code, data and solution accelerators can be shared).

However, they are able to do that because they are growing immensely in manpower, which comes on top of the fact that the adoption of Databricks, Spark, Delta and Structured Streaming all are growing exponentially! They are really buying into making the adoption even bigger, which is most clearly visible from their decision to completely open-source Delta Lake (it used to be that Databricks had a couple of extra Delta Lake features which were proprietary).

Growth of Spark: Data & AI Summit 2022 Day 1 Morning Keynote

Growth of Delta: Data & AI Summit 2022 Day 1 Morning Keynote

Growth of Structured Streaming: Data & AI Summit 2022 Day 1 Morning Keynote

Databricks really is betting on itself to keep on innovating and integrating faster than everyone else, and by the looks of it, they are succeeding. On top of the new additions mentioned above, they are also putting a lot of focus into improving their current offering, which is already top of the class.

- They are setting up Project Lightspeed, which will focus on getting streaming performance higher and higher

- They have introduced Enzyme, which will improve the performance of Data Engineering flows

- They have a lot of improvements coming as well for Photon (the Data Warehousing cluster engine) and Delta.

Exciting times ahead!