element61 is a certified Databricks partner and has extensive experience in Delta Lake and Databricks Delta.

What is Databricks Delta or Delta Lake?

Data lakes typically have multiple data pipelines reading and writing data concurrently. It's hard to keep data integrity in a data lake due to lack of how Spark and generally big data works (distributed writes, jobs taking a long time). Delta lake is a new Spark functionality bringing reliability to big data lakes. Databricks offers Delta as an embedded service called Databricks Delta.

Delta Lake is an open-source Spark storage layer which runs on top of an existing data lake (Azure Data Lake Store, Amazon S3 etc.).

- It brings data integrity with ACID transactions while at the same time, allowing reading and writing from/to same directory/table.

-

It provides serializability, the strongest level of isolation level, and thus guarantees all consumers of a Data Lake to have access to the latest and most-correct information; a unique asset for your data sciencist and data analysts.

Delta Lake provides a series of other features (next to data integrity) including:

-

Unified Batch and Streaming code: allowing us to write Spark transformations and apply them uniform on Batch and Streaming sources and sinks

-

Updates and deletes enabling your Data Lake to update data without going through your entire data lake repository. Now your Data Lake can support Slowly Changing Dimension (SCD) operations and upserts from streaming queries

-

Time travel: allowing one to access and revert earlier versions of the data and address the question "What was the value of that row in the Data Lake 2 months ago"

-

Schema evolution: as you data evolves, Delta allows Spark table to change in schema

-

and many more

While we use Delta, we use Spark and store all data in Apache Parquet format enabling Delta Lake to leverage the efficient compression native to Parquet.

How does Delta (Lake) work?

The basics of Delta

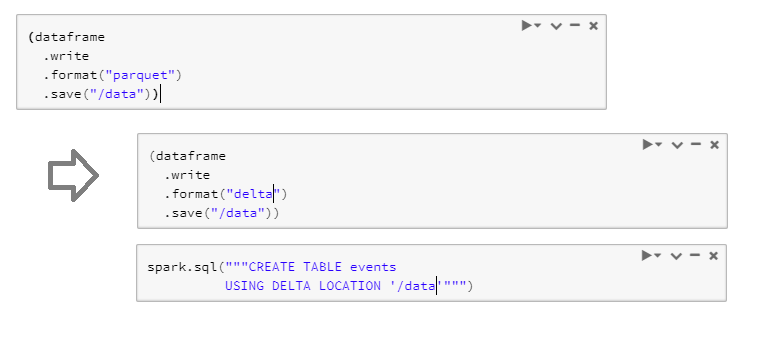

Delta Lake is part of Spark. It's a way on how we read and process the data with and through Spark. Rather dan writing our data in format Parquet, we use format "delta" and next create Spark SQL tables, analyses on top of it.

This small alteration in code made our dataframe a lot more resilient: if needed, our code is now solid to write and read data to the same location knowing integrity of data will be kept, we can now analyse on this datasets (e.g. using Spark SQL) where - in parallel - another operation writes or update the data in the delta location.

Imagine that in your Data Lake a certain job is continuously writing data to a certain location. Using below Delta code we coud read this stream and have a dataframe which is always up to date with latest data.

An architecture using Delta Lake

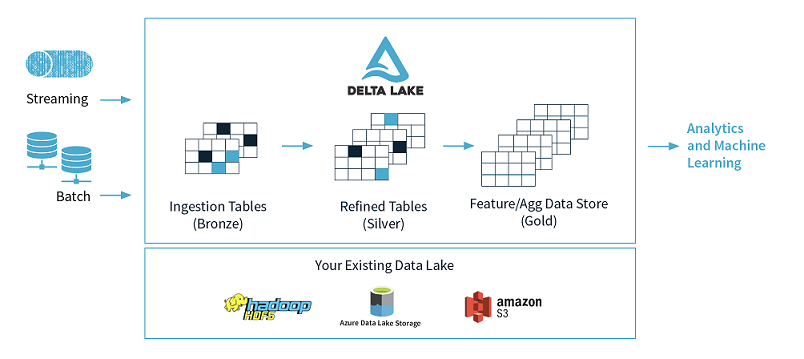

If we think beyond, we could leverage Delta Lake for our entire series of write and read Spark Jobs. Every job would leverage delta functionality and read/write data to various areas in our Azure Data Lake.

-

a first Spark Job would read the Streaming or Batch injested data and put them into our Ingestion Tables (Bronze)

-

a second Job would then use Delta to read Ingestion tables, apply cleaning (e.g. anonimisation, data masking) writing data - using Delta - to the refined tables (Silver)

-

a third Job would then use Delta to read from Silver, aggregate data and write it to a Feature of Aggregation layer (Gold) ready for reporting or Machine Learning

With this simple Delta Lake architecture, element61 has already tackled complex Data Lake set-ups and it works great. If needed, we have found that Ingest to Gold layer can be done - using readStream and writeStream - under 10 secs lead-time giving us a real-time Data Lake.

We recommend to use this set-up for both your Machine Learning data preparations, model training and scoring as well as your Modern BI architecture!

When to use Delta Lake?

Delta Lake is recommended for all companies already running or implementing a Data Lake. As the most common technology for Data Lake is nowadays Spark, embedding Delta Lake (running on Spark) from the start can help enable your Data Lake to directly support data integrity and allow real-time analytics (cfr. above example)

element61 has implemented Delta Lake in various customer set-ups. We push customers to - for sure - use Delta Lake at minimum when:

-

whenever there is streaming data:

-

Delta Lake's unified batch & real-time pipeline allows us then to stream events through various layers in the Data Lake in a matter of seconds

-

element61 has set-up a real-time streaming platform on top of an Azure Data Lake where data flows flow Azure Eventhubs through the Data Lake up to Power BI in <10 seconds lead-time.

-

Examples use-cases: real-time reporting, real-time AI scoring, etc.

-

-

whenever there are regular updates in your data:

-

Delta Lake's ability to do and keep updates enables us to keep solid consistency of the data while still supporting the

-

element61 has set-up various modern data platforms where Databricks Delta is the engine for ELT transformations and updates.

-

element61 advices all organizations investing in a Data Lake to use Delta Lake as a foundation.

5 more reasons to use Delta?

With the rise of big data, data lakes became a popular choice for storing the data for a large number of organizations. Despite the pros of data lakes, a variety of challenges arose when working with Data Lake. Delta Lake resolves a significant set of Data Lake challenges. To read more about 5 common Data Lake Challenges Delta can solve and how , read our article on 'How Databricks Delta overcomes your Data Lake challenges'

Delta vs. Databricks Delta

Databricks Delta integrates the open source Delta Lake which can be configured based on the user needs. Delta on Databricks offers extra optimization features which are not available in the open source framework.

Optimization on Delta offered in Databricks:

- Optimization layout algorithms which automatically help optimize how we structure and save our data in order to improve query speed on our data. At time of writing, Databricks Delta has two additional algorithms set-up:

- Bin-packing which coalesces multiple small files into larger ones

- Z-ordering which smartly orders all the data in the various partitions based on some specific columns

(update November 2022: This is now supported with Delta 2.0 in open source (see link))

- Optimized performance with Caching Data by creating local copies of remote (cloud stored) files in nodes' local storage.

- Ability to choose level of Isolation from serializable – strong isolation level - to writeSerializable – weaker isolation level

- Optimizing specific joins including range and skew joins.

- Optimized performance of high-order functions and transformations on complex data types

Getting started

element61 has extensive experience in Delta and Databricks Delta. Contact us for coaching, trainings, advice and implementation.