Organization & context

The Healthbox 3 - a fully IoT-enabled product

Ambition & project focus

Smart and IoT-enabled products like the Healthbox 3.0 generate a lot of (real-time) data. To turn this data into smart services for end-users, Renson needs a capable, scalable and flexible platform. To enable these capabilities Renson has been focusing on building a data platform that is equipped to handle the incoming data flow efficiently while exploiting the incoming data to generate additional value for the customers. This platform has been designed using a cloud-first approach on Azure.

The data platform is there to create better products and to deliver better customer service. Some specific use cases where the data platform proved crucial are:

- Assessment of de installation quality:

- i.e., the installation of Renson products is done through third party professionals. One of the current use cases consists of assessing the quality installations where through data analysis. That way, Renson can ensure that the end customer experiences the product in an optimal way. - Uncovering of the need for (software) correction in sensors

- e.g. based on measured vs. expected values - Optimizing comfort (such as air quality, temperature, …) by taking user preferences and building properties into consideration.

The challenge

Originally, the focus on real-time applications has naturally led Renson to start with a streaming-only data platform architecture. While this has a lot of advantages, the team has come to realize that a streaming-only architecture is not beneficial from a cost & performance perspective.

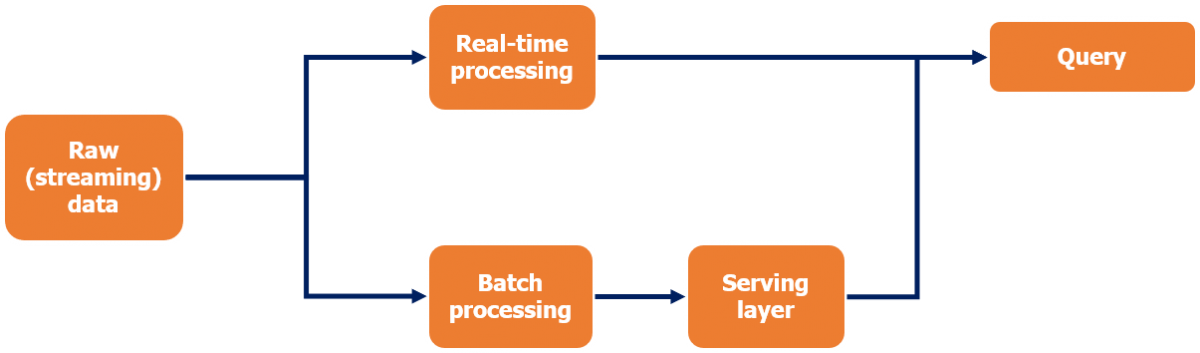

Additionally, most of the above use cases imply the usage of large datasets & machine learning methods. This has led Renson to realize that the streaming architecture needs to be complemented with a batch aspect, which de facto results in a modern data platform (lambda) architecture.

Renson worked with element61 to design, set-up & enable the modern data platform in Microsoft Azure Cloud. Combining real-time data processing with batch processing, the project enabled Renson to efficiently tackle its use-cases while being future-proof in tackling further Machine Learning and analytics use-cases.

Simplified overview of the technical data flows at Renson

Under the hood

The modern data platform at Renson consists of various Azure building blocks. As IoT platforms typically generate considerable volumes of semi-structured or unstructured data, one of the crucial features was implementing a Data Lake and leveraging Apache Spark for scalable data engineering & data science.

Data Lake

In its original architecture, Renson didn't have a Data Lake. As a result, all data was stored in a transactional NoSQL database making it expensive to query and intensive for large analyses. With a Data Lake added Renson could benefit from

- Infinite scalability to ingest & store massive data volumes while remaining cost-efficient.

- Handling of unstructured as well as (semi)-structured data in a smart structure

- Machine- learning-ready as data can be analysed using Python & distributed frameworks (like Spark)

The Data Lake was set-up in a structured number of zones incl. bronze, silver and gold - each containing respectively the ingested, cleaned & fully transformed data. Moving and transforming the data is done with a compute engine (see next).

Continue reading here to know more about a Data Lake

Spark compute using Databricks & Azure Batch

Apache Spark was selected as the computation framework to carry out all transformation operations. Spark allowed to efficiently read the Data Lake as well as to keep working with Python (Pyspark) for all data wrangling.

Being a broadly supported & implemented open-source framework, Renson evaluated a variety of ways to run Spark, ranging from self-hosted on-premise installations, to managed clusters within a cloud provider to fully managed solutions (e.g. Azure Databricks).

Although Azure Databricks is still used for ad-hoc analyses and data science workloads, Renson decided to run all (data engineering) transformation steps using a containerized implementation (i.e. Docker) of Apache Spark. Using the Azure Batch service as the underlying computation service this approach was deemed a good middle ground to leverage the scalability of cloud solutions while keeping some degree of flexibility to swap to alternative engines in the long run. The choice for Azure Batch has also been supported by the fact that this service is cost-efficient & can scale really efficiently for large scale reruns (e.g. rerun a data pipelines for a complete year).

Continue reading here to know more about Spark and Azure Databricks

Airflow for scheduling

In search of a scheduling tool, Airflow was selected over Data Factory. This choice is primarily based on the fact that Python is the go-to programming language within the Renson analytics team. On top of this, Renson deems Airflow to be more flexible and extendible with regards to open source software and a hybrid cloud scenario.

If you want to know more about Airflow vs. Azure Data Factory - read our insight on Azure Data Factory & Airflow: mutually exclusive or complementary?

Azure DevOps & infrastructure-as-code using Terraform

Version control at Renson is traditionally done using Azure DevOps. On top of this versioning, Renson is leveraging the numerous DevOps features to put CI/CD in practice. As can be expected from a mature data team, application code is automatically tested, packaged and deployed in small iterations using fully automated build & release pipelines. Overall, Renson was an early adopter of a DevOps way of working.

For the infrastructure setup, Renson used to work with an internally developed set of Python scripts to provision infrastructure. As part of the Data Lake setup, the team has agreed to experiment with Terraform, which is an open-source tool by Hashicorp, designed for infrastructure-as-code specifically. The implementation turned out to be successful in the Data Lake setup and has been rolled-out over an increasing number of projects in the past few months. As a consultancy company, it has been great to see that Renson is practising what it preaches in going for innovative solutions and rolling them out rapidly to improve the team’s efficiencies in deploying (cloud) infrastructure and managing it during the entire lifecycle.

If you want to know more about Infrastructure-as-code - continue reading here in another tab

Solutions & results

The first wave of the project has consisted of setting up & tuning the infrastructure components as described above. In parallel with the setup of the environment, we started building the first two data jobs.

Over the next couple of months, Renson has continued building data jobs. Most of these jobs are used to prepare operational data for use in machine learning experiments. One of the main success points has been the fact that the team can now prepare these datasets in less than 10 minutes, whereas this used to be approximately 3 days in the past, due to the need to execute a large number of API calls.

Moreover, this project has also been leveraged to improve the handling of all personal data. As some of the data points that are collected can be classified as personal data, one of the important focus points has been to assure that all data is processed according to regulations.

Role of element61

At element61, we favour a ‘coaching and co-development’ approach. The typical approach would be to kickstart the project with a short period of near full-time involvement, while gradually shifting down our efforts to allow the customer to take ownership of the project & the applications in place.

At Renson, we started with a one-month phase of active involvement, where element61 was present 3 days per week to kickstart the setup of the necessary cloud components and transformations pipeline. This initial period was followed with three months of bi-weekly visits, where the focus was rather on tackling specific pain-points. Regular status meetings were held during the full project to keep the developments aligned with the broader context of the company and to keep other teams informed on the progress made on the Data Lake.

On top of the support by element61, the Microsoft team has also offered active support in this project. As the cloud components in the Renson architecture are hosted on Azure, their guidance and support has been helpful to select the right components & settings.

Want to know more?

- Check out element's Azure competences

- Contact us for more information.