At element61, we followed the Databricks Tech Kickoff FY26 to stay up to date with the latest announcements and best practices in data and AI. From Lakeflow and Serverless to managed tables, the event covered important technical developments shaping the industry. In this insight, we share key trends and best practices to help businesses make the most of these innovations.

Trend 1 - Meet Lakeflow - Databricks' data engineering accelerator

Databricks is positioning Lakeflow as the standard for end-to-end pipeline management. During the Tech Kickoff, a session focused on one of its core components: Pipelines (Delta Live Tables, DLT). Earlier limitations have largely been resolved, making DLT a powerful choice for organizations seeking to streamline data engineering with automation, improved streaming performance, and reduced complexity.

A key takeaway was that DLT accelerates development when it simplifies workflows, but teams can still opt for manual Spark development if needed, without restrictions.

A major improvement is the introduction in DLT of "APPLY CHANGES", a functionality that automatically handles edge cases when working with Change Data Capture (CDC). Previously, implementing CDC required writing multiple conditional statements to ensure only the latest values were updated. With APPLY CHANGES, Databricks now provides a built-in mechanism to handle these complexities, reducing custom coding efforts in DLT. Databricks provided an example where a lot of custom logic needs to be written for a common use case.

The "APPLY CHANGES" feature simplifies CDC and MERGE workflows, eliminating the need for complex manual coding. Compared to a manual CDC approach, it offers:

- Automated Data Handling – No need to write custom logic for keeping the latest record, processing full snapshots, or handling history (e.g., Slowly Changing Dimensions).

- Built-in Edge Case Handling – Supports different CDC scenarios like partial updates (ignoring nulls), historical tracking (SCD2), and one-time backfills.

- Reduced Code Complexity – Instead of writing detailed MERGE logic, developers can rely on predefined functions, reducing the risk of errors.

This reduces over 20 lines of custom code to a concise, declarative approach using the built-in library.

Below, I’ve summarized additional key takeaways highlighted throughout the session.

- Improved schema management & performance: Databricks has expanded DLT’s integration with Unity Catalog (UC), allowing users to publish tables across multiple schemas. Additionally, liquid clustering enhances performance and query optimization, making data management more flexible and efficient.

- Enhanced debugging with interactive notebooks: A major improvement addresses a common developer challenge: debugging Delta Live Tables. Databricks has introduced interactive, notebook-based execution, enabling developers to run tables cell-by-cell, improving iteration speed and making troubleshooting more intuitive.

- DLT as the preferred choice for streaming: The broader takeaway is that streaming should be done in DLT whenever possible. It offers comprehensive features while significantly reducing coding overhead, making it the go-to solution for efficient, scalable streaming pipelines.

Trend 2 - Databricks keeps pushing Managed tables

Another major topic of discussion was the trade-off between managed tables and external tables. While the industry is increasingly leaning towards open ecosystems, Databricks remains committed to managed tables due to their built-in optimizations and performance benefits.

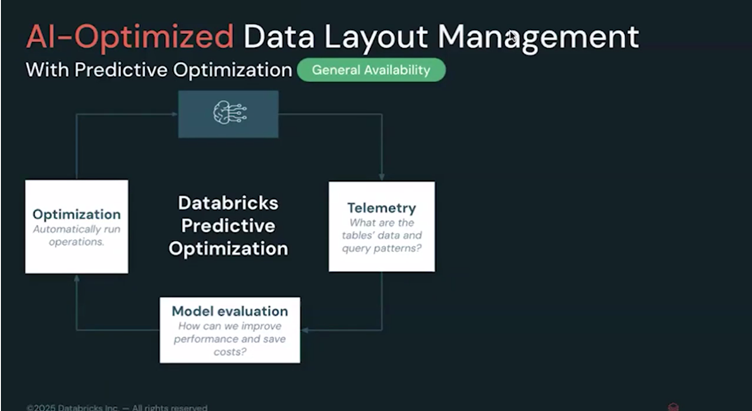

From a technical perspective, data layout and interoperability were key areas of focus. Managed tables now leverage predictive optimizations, which use feedback loops to automatically enhance query performance without the need for manual tuning. Additionally, liquid clustering can be automated through the new AUTO clustering option (currently in public preview), removing the need for manually defining cluster keys based on experience. Databricks also ensures interoperability of managed tables with external systems through capabilities like Delta Sharing.

One of the ongoing debates surrounding external tables is their perceived openness and flexibility. However, Databricks countered this argument by highlighting security risks associated with path-based access, which can be insecure and error-prone. Instead, they emphasized that all metadata and table structures can now be accessed via APIs, making managed tables more accessible while maintaining control and governance.

Despite these advancements, a potential business concern remains: managed tables are tightly integrated with Databricks, meaning organizations are more dependent on the platform. However, the efficiency gains from automated performance improvements, predictive optimization, and ease of management may outweigh the drawbacks. As a result, making managed tables the default choice could be beneficial, especially for organizations looking to minimize manual optimization efforts.

Trend 3 - Databricks is pushing Serverless Compute for Notebooks: tired of start-up time

The next key discussion focused on serverless compute for notebooks, which gained popularity among developers to avoid long startup times when working on data pipelines. Initially, users expressed concerns about unexpectedly high costs. However, over time, while these concerns may not always prove to be valid, Databricks has provided advice and tools to better manage and control costs. Given that serverless compute is highly flexible, Databricks continues to help users optimize their usage to ensure cost efficiency, even as workloads scale.

Databricks explained that every serverless notebook session starts with a singlenode setup, which is lightweight. However, when more compute is needed (e.g., when running larger workloads), the system scales up to a driver-worker architecture, leading to higher costs. To address cost concerns, Databricks introduced several monitoring and optimization tools:

- Built-in system queries that alert users when they exceed a predefined cost threshold.

- Pre-configured usage dashboards to track expenses in real-time.

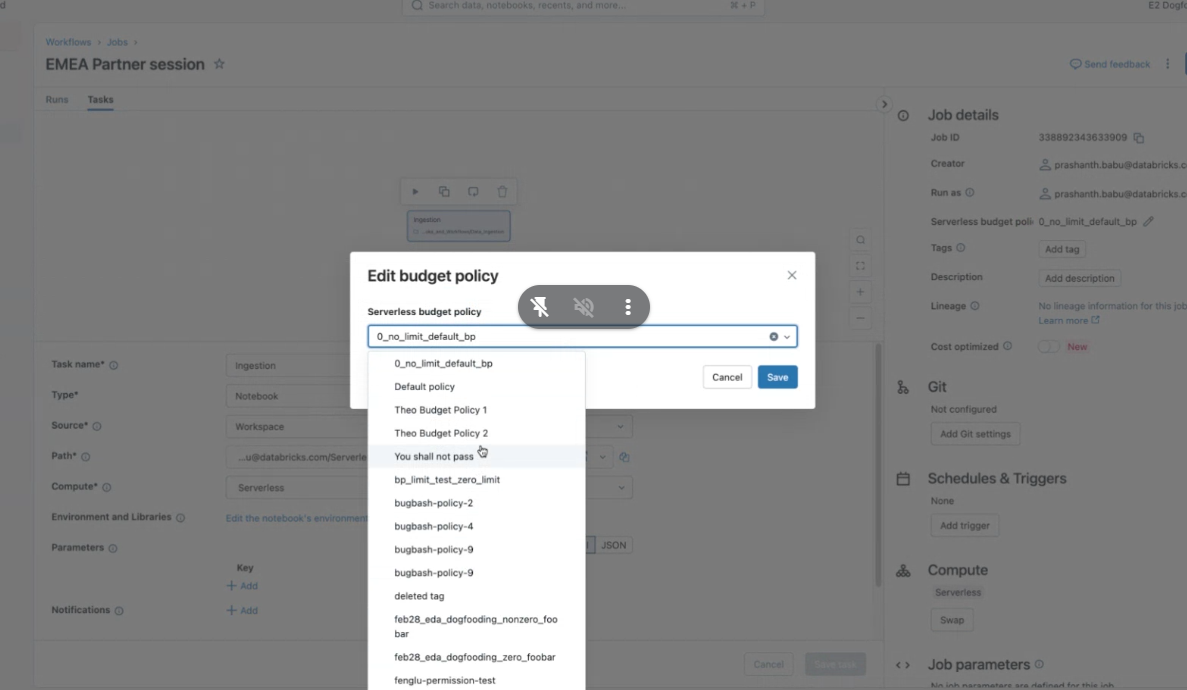

- Private preview of budget policies for workflows, allowing teams to define budgets for serverless compute usage.

- Tagging support, enabling organizations to attribute costs to specific workflows, making cost allocation more transparent.

Databricks maintains that, over time, the total cost of ownership (TCO) for serverless compute will be lower than traditional compute. To help users evaluate this, they recommend running the same pipeline on both classic compute and serverless compute to compare costs before committing. Additionally, a cost-optimized compute option will be introduced in public preview by the end of April, potentially reducing expenses for development workloads.

Conclusion

The Databricks Tech Kickoff FY26 covered a wealth of best practices, innovations, and strategies - far more than we could capture in a single insight. As businesses look to optimize their data and AI initiatives, staying informed and leveraging the right expertise is key.

At element61 we help organizations navigate these advancements, applying industry best practices to drive efficiency and value.

If you’d like to explore how these insights can benefit your business, get in touch with us!