Introduction

Data integration processes are very time and resource consuming. The amount of data and the size of the datasets are constantly growing but data and information are still expected to be delivered on-time. Performance is therefore a key element in the success of a Business Intelligence & Data Warehousing project and in order to guarantee the agreed level of service, management of data warehouse performance and performance tuning have to take a full role during the data warehouse and ETL development process.

Tuning, however, is not always straightforward. A chain is only as strong as its weakest link. In this context, there are five crucial domains that require attention when tuning an IBM Infosphere DataStage environment :

- System Infrastructure

- Network

- Database

- IBM DataStage Installation & Configuration

- IBM DataStage Jobs

It goes without saying that without a well performing infrastructure the tuning of IBM DataStage Jobs will not make much of a difference. As the first three domains are usually outside the control of the ETL development team, this article will only briefly touch upon these subjects and will mainly focus on the topics related to the developments done within the IBM InfoSphere DataStage layer. There are also major differences between the underlying architecture of the DataStage Server Edition and the DataStage Parallel Edition. This article will only cover performance tuning for the IBM InfoSphere DataStage Enterprise Edition v 8.x.

Performance Monitoring Best Practices

Usage of Job Monitor

The IBM InfoSphere DataStage job monitor can be accessed through the IBM InfoSphere DataStage Director. The job monitor provides a useful snapshot of a job's performance at a certain moment of its execution, but does not provide thorough performance metrics. Due to buffering and to some job semantics, a snapshot image of the flow might not be a representative sample of the performance over the course of the entire job. The CPU summary information provided by the job monitor is useful as a first approximation of where time is being spent in the flow. That is why a job monitor snapshot should not be used in place of a full run of the job, or a run with a sample set of data as it does not include information on sorts or similar components that might be inserted automatically by the engine in a parallel job. For these components, the score dump can be of assistance.

Usage of Score Dump

In order to resolve any performance issues it is essential to have an understanding of the data flow within the jobs. To help understand a job flow, a score dump should be taken. This can be done by setting the APT_DUMP_SCORE environment variable to "true” prior to running the job.

When enabled, the score dump produces a report which shows the operators, processes and data sets in the job and contains information about :

- Where and how data was repartitioned.

- Whether IBM InfoSphere DataStage has inserted extra operators in the flow.

- The degree of parallelism each operator has run with, and on which nodes.

- Where data was buffered.

The score dump information is included in the job log when a job is run and is particularly useful in showing where IBM InfoSphere DataStage is inserting additional components/actions in the job flow, in particular extra data partitioning and sorting operators as they can both be detrimental to performance. A score dump will help to detect superfluous operators and amend the job design to remove them.

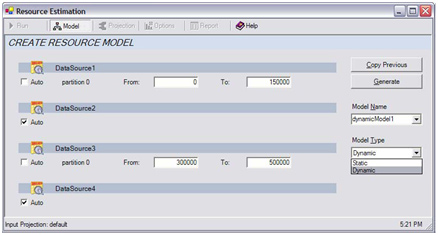

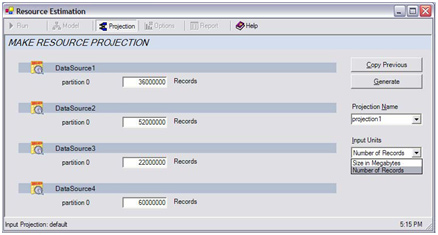

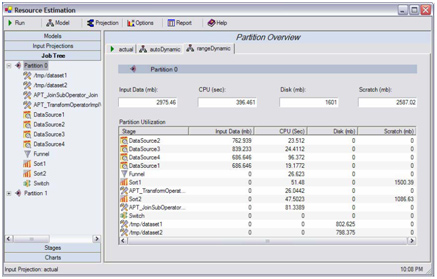

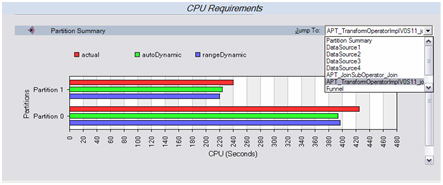

Usage of Resource Estimation

Predicting hardware resources needed to run DataStage jobs in order to meet processing time requirements can sometimes be more of an art than a science.

With new sophisticated analytical information and deep understanding of the parallel framework, IBM has added Resource Estimation to DataStage (and QualityStage) 8.x. This can be used to determine the needed system requirements or to analyze if the current infrastructure can support the jobs that have been created.

This option opens a dialog called Resource Estimation. The Resource Estimation is based on a modelization of the job. There are two types of models that can be created:

- Static. The static model does not actually run the job to create the model. CPU utilization cannot be estimated, but disk space can. The record size is always fixed. The "best case” scenario is considered when the input data is propagated. The "worst case” scenario is considered when computing record size.

- Dynamic. The Resource Estimation tool actually runs the job with a sample of the data. Both CPU and disk space are estimated. This is a more predictable way to produce estimates.

This feature will greatly assist developers in estimating the time and machine resources needed for job execution. This kind of analysis can help when analyzing the performance of a job, but IBM DataStage also offers another possibility to analyze job performance.

Usage of Performance Analysis

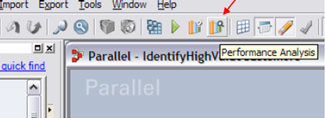

Isolating job performance bottlenecks during a job execution or even seeing what else was being performed on the machine during the job run can be extremely difficult. IBM Infosphere DataStage 8.x adds a new capability called Performance Analysis.

It is enabled through a job property on the execution tab which collects data at job execution time. ( Note: by default, this option is disabled ) . Once enabled and with a job open, a new toolbar option, called Performance Analysis, is made available .

Detailed charts are then available for that specific job run including:

-

Job timeline

-

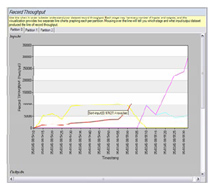

Record Throughput

-

CPU Utilization

-

Job Timing

-

Job Memory Utilization

-

Physical Machine Utilization (shows what else is happening overall on the machine, not just the DataStage activity).

|

|

|

A report can be generated for each chart.

Using the information in these charts, a developer can for instance pinpoint performance bottlenecks and re-design the job to improve performance.

In addition to instance performance, overall machine statistics are available. When a job is running, information about the machine is also collected and is available in the Performance Analysis tool including:

- Overall CPU Utilization

- Memory Utilization

- Disk Utilization

Developers can also correlate statistics between the machine information and the job performance. Filtering capabilities exist to only display specific stages.

General Job Design Best Practices

The ability to process large volumes of data in a short period of time depends on all aspects of the flow and the environment being optimized for maximum throughput and performance. Performance tuning and optimization are iterative processes that begin with job design and unit tests, proceed through integration and volume testing, and continue throughout the production life cycle of the application. Here are some performance pointers:

Columns and type conversions

Remove unneeded columns as early as possible within the job flow. Every additional unused column requires additional buffer memory, which can impact performance and make each row transfer from one stage to the next more expensive. If possible, when reading from databases, use a select list to read only the columns required, rather than the entire table. Avoid propagation of unnecessary metadata between the stages. Use the Modify stage and drop the metadata. The Modify stage will drop the metadata only when explicitly specified using the DROP clause.

So only columns that are really needed in the job should be used and the columns should be dropped from the moment they are not needed anymore. The OSH_PRINT_SCHEMAS environment variable can be set to verify that runtime schemas match the job design column definitions. When using stage variables on a Transformer stage, ensure that their data types match the expected result types. Avoid that DataStage needs to perform unnecessary type conversions as it will use time and resources for these conversions.

Transformer stages

It is best practice to avoid having multiple stages where the functionality could be incorporated into a single stage, and use other stage types to perform simple transformation operations. Try to balance load on Transformers by sharing the transformations across existing Transformers. This will ensure a smooth flow of data.

When type casting, renaming of columns or addition of new columns is required, use Copy or Modify Stages to achieve this. The Copy stage, for example, should be used instead of a Transformer for simple operations including :

- Job Design placeholder between stages

- Renaming Columns

- Dropping Columns

- Implicit (default) Type Conversions

A developer should try to minimize the stage variables in a Transformer stage because the performance of a job decreases as stage variables are added in a Transformer stage. The number of stage variables should be limited as much as possible.

Also if a particular stage has been identified as one that takes a lot of time in a job, like a Transformer stage having complex functionality with a lot of stage variables and transformations, then the design of jobs could be done in such a way that this stage is put in a separate job all together (more resources for the Transformer Stage).

While designing IBM DataStage Jobs, care should be taken that a single job is not overloaded with stages. Each extra stage put into a job corresponds to less resources being available for every stage, which directly affects the job performance. If possible, complex jobs having a large number of stages should be logically split into smaller units.

Sorting

A sort done on a database is usually a lot faster than a sort done in DataStage. So – if possible – try to already do the sorting when reading data from the database instead of using a Sort stage or sorting on the input link. This could also mean a big performance gain in the job, although it is not always possible to avoid needing a Sort stage in jobs.

Careful job design can improve the performance of sort operations, both in standalone Sort stages and in on-link sorts specified in other stage types, when not being able to make use of the database sorting power.

If data has already been partitioned and sorted on a set of key columns, specify the ?don't sort, previously sorted? option for the key columns in the Sort stage. This reduces the cost of sorting and takes more advantage of pipeline parallelism. When writing to parallel data sets, sort order and partitioning are preserved. When reading from these data sets, try to maintain this sorting if possible by using the Same partitioning method.

The stable sort option is much more expensive than non-stable sorts, and should only be used if there is a need to maintain row order other than as needed to perform the sort.

The performance of individual sorts can be improved by increasing the memory usage per partition using the Restrict Memory Usage (MB) option of the Sort stage. The default setting is 20 MB per partition. Note that sort memory usage can only be specified for standalone Sort stages, it cannot be changed for inline (on a link) sorts.

Sequential files

While handling huge volumes of data, the Sequential File stage can itself become one of the major bottlenecks as reading and writing from this stage is slow. Certainly do not use sequential files for intermediate storage between jobs. It causes performance overhead, as it needs to do data conversion before writing and reading from a file. Rather Dataset stages should be used for intermediate storage between different jobs.

Datasets are key to good performance in a set of linked jobs. They help in achieving end-to-end parallelism by writing data in partitioned form and maintaining the sort order. No repartitioning or import/export conversions are needed.

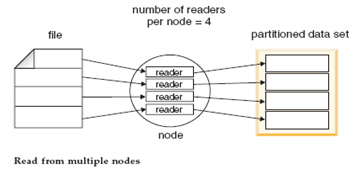

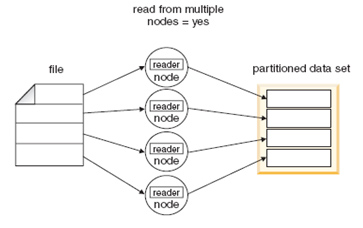

In order to have faster reading from the Sequential File stage the number of readers per node can be increased (default value is one). This means, for example, that a single file can be partitioned as it is read (even though the stage is constrained to running sequentially on the conductor mode).

The options "Read From Multiple Nodes” and "Number of Readers Per Node” are mutually exclusive.

Runtime Column Propagation

Also while designing jobs, care must be taken that unnecessary column propagation is not done. Columns, which are not needed in the job flow, should not be propagated from one stage to another and from one job to the next. As much as possible, RCP (Runtime Column Propagation) should be disabled in the jobs.

Join, Lookup or Merge

One of the most important mistakes that developers make is to not have volumetric analyses done before deciding to use Join, Lookup or Merge stages.

IBM DataStage does not know how large the data set is, so it cannot make an informed choice whether to combine data using a Join stage or a Lookup stage. Here is how to decide which one to use …

There are two data sets being combined. One is the primary or driving data set, sometimes called the left of the join. The other dataset are the reference data set or the right of the join.

In all cases, the size of the reference data sets is a concern. If these take up a large amount of memory relative to the physical RAM memory size of the computer DataStage is running on, then a Lookup stage might crash because the reference datasets might not fit in RAM along with everything else that has to be in RAM. This results in a very slow performance since each lookup operation can, and typically will, cause a page fault and an I/O operation.

So, if the reference datasets are big enough to cause trouble, use a join. A join does a high-speed sort on the driving and reference datasets. This can involve I/O if the data is big enough, but the I/O is all highly optimized and sequential. After the sort is over, the join processing is very fast and never involves paging or other I/O.

Databases

The best choice is to use Connector stages if available for the database. The next best choice is the Enterprise database stages as these give maximum parallel performance and features when compared to 'plug-in' stages. The Enterprise stages are:

- DB2/UDB Enterprise

- Informix Enterprise

- Oracle Enterprise

- Teradata Enterprise

- SQLServer Enterprise

- Sybase Enterprise

- ODBC Enterprise

- iWay Enterprise

- Netezza Enterprise

Avoid generating target tables in the database from the IBM DataStage job (that is, using the Create write mode on the database stage) unless they are intended for temporary storage only. This is because this method does not allow, for example, specifying target table space, and inadvertently data-management policies on the database can be violated.

When there is a need to create a table on a target database from within a job, use the Open command property on the database stage to explicitly create the table and allocate table space, or any other options required. The Open command property allows to specify a command (for example some SQL) that will be executed by the database before it processes any data from the stage. There is also a Close property that allows specifying a command to execute after the data from the stage has been processed. (Note that, when using user-defined Open and Close commands, locks should be specified where appropriate).

Tune the database stages for 'Array Size' and 'Rows per Transaction' numerical values for faster inserts, updates and selects. Experiment in changing these values to see what the best performance is for the DataStage job. The default value used is low and not optimal in terms of performance.

Finally, try to work closely with the database administrators so they can examine the SQL-statements used in DataStage jobs. Appropriate indexes on tables can deliver a better performance of DataStage queries.

Conclusion

Performance tuning can be a labor intensive and quite costly process. That is exactly the reason why care for optimization and performance should be taken into account from the beginning of the development process. With the combination of best practices, performance guidelines and past experience, the majority of performance problems can be avoided during the development process.

If performance issues still occur even when performance guidelines have been taken into account during development, then these issues can be tackled and analyzed using the available, discussed tools such as Resource Estimation and Performance Analysis functionalities.