Starting with the release of IBM Cognos 10.2 Business Intelligence a completely new cubing technology was introduced. Dynamic Cubes are an in-memory cube technology that will build multidimensional OLAP structures on a star schema or snowflake in the RAM memory of the server. It is marketed by IBM as a Big Data solution as it can handle very large volumes (TB) of data. The main goal of introducing Dynamic Cubes is to provide a high performance, high volume analysis that leverages the existing data structures in the Enterprise Data Warehouse.

The beauty of this technology is the ease of deployment and maintenance. Dynamic Cubes offer an extensive feature list. Multiple cubes can be combined in a single virtual cube, it is database aggregate aware and will build in-memory aggregates automatically. Add a great security model and the free availability within the BI-platform and it is clear we have a potential game changer in cubing technology.

Architecture

Dynamic Cubes are part of the Query Service on the IBM Business Intelligence 10.2 server platform. Dynamic Cubes are highly scalable en provide fail-over features.

The same cube can be deployed on multiple servers. User queries will be distributed along the servers providing a scalable architecture and if one instance goes down, the cube will continue running on the other servers. All of this is transparent to the end user.

Image 1: Failover/Scalability Architecture

Dynamic Query Mode is a new query technology that uses JDBC to retrieve data. It is faster, smarter and more scalable then the compatible query mode. DQM offers smart query optimization, extensive security aware caching and is agnostic to the fact if you are using a 32-bit or 64-bit environment. It supports a lot of data sources, however the ever popular Transformer cubes are unfortunately unsupported. Dynamic Cubes use the Dynamic Query Mode to retrieve source data and resolve user queries.

Dynamic Cubes are an in-memory technology so the downfall is that extra physical memory will need be added to the server. Sizing will require an extra 10 GB of RAM for small environments up to 150 GB of RAM in large environments. Due to these large volumes it does not makes sense to run Dynamic Cubes in a 32-bit environment. If both 32-bit and 64-bit data sources are required, a distributed environment will need to be put in place.

A Software Development Kit is included so the cube can be started programmatically, for example when the ETL is finished. Other options to starting cubes are triggers or administration tasks

Positioning

IBM Cognos now has different -if not too many to be confusing- OLAP technologies available. Offered within the Cognos Business Intelligence suite are Powercubes, DMR and Dynamic Cubes. IBM Cognos TM1 is offered as a separate product but is also well integrated in the IBM Cognos BI suite. The big question is when to use what technology ?

Powercubes have been a Cognos core product for decades. It is basically the reason why IBM Cognos products have been so successful and why Cognos was able to grow to its current size and market share. The last few years IBM was urging everyone to switch to TM1 so Powercubes could be deprecated. However, up until now Powercubes are still part of the official product offering.

This is due to the extensive user base and the lack of alternative in specific situations. A PowerCube is defined in Transformer and runs in a 32-bit environment, limiting cube size to about 2 GBs. In the current product offering, PowerCubes are still interesting because it is the only technology where you can very easily combine multiple data sources. A second big advantage is that the end result is a file that can be analysed offline. The big disadvantage of PowerCubes is that the cube size is limited to about 2GBs, a limit that is easily reached nowadays. If portability or the ability to analyse multiple data sources are a key issue, then Powercubes are and remain the answer.

Olap Over Relational (OOR) or also called Dimensionally Modeled Relational (DMR) are in essence Virtual OLAP cubes that are modeled in IBM Cognos Framework Manager. The data is presented in an OLAP-style, but is not physically stored on the server. Instead, at every user request, a query to the database is executed. This style of modeling is used when you want to enable analysis, using drill up / drill down in Analysis Studio.

Since the introduction of Dynamic Query Mode, an advanced security aware caching mechanism was put in place. This caching mechanism will provide similar performance as a physical cube when primed correctly. The advantage is that no extra hardware is required and an existing framework model can be leveraged.

IBM Cognos TM1 is an in memory cubing technology that allows for write-back functionality. It is specifically focused on what-if analysis and planning, budgeting & forecasting (CPM applications & processes). Although it is possible to use Cognos TM1 for BI reporting & analysis, this is not the primary goal of the technology. Therefore for example, it -until recently- had no automatic aggregation or relative time features.

Like IBM Cognos TM1, Dynamic Cubes are an in-memory technology that is aggregate aware and will automatically handle in-memory aggregates. It can handle large volumes of data but the data source must be a star schema or snowflake. Dynamic Cubes can only handle one data source per cube and will not allow write-back of data to the cube. The primary focus of Dynamic Cubes is thus BI Analysis and Reporting.

Image 2: Positioning

| Functionality | Technology | Considerations |

| Write back, what-if analysis, planning/budgeting | Cognos TM1 |

|

|

No write-back functionality needed, star schema source design |

Cognos Dynamic Cubes |

|

| No write-back functionality, several sources | Cognos PowerCubes |

|

| Leverage existing framework model ( | OLAP over relational |

|

In short,Dynamic Cubes are the cubing technology of choice for reporting purposes. It is very memory intensive and will require extra investments in CPUs and memory. If this is an issue, Dimensionally Modeled Relational could be an alternative if volumes are smaller than 25 million rows. If portability and the ability of including multiple data sources are required, Powercubes remain the technology of choice. When write-back functionality is required, the only alternative is TM1.

Creating Cubes

Image 3: Dynamic Cube Lifecycle

The first step in deploying a cube is creating the cube definition in Cube Designer. A cube consists of dimensions that can be shared between multiple cubes and measures. A dimension is made of hierarchies and levels. Multiple attributes can be added, the Level Unique key and Caption are mandatory. The more attributes are added, the larger the cube will be and the more memory will be required. Relative time members can be added to a date dimension for easy roll-up.

Image 4: Relative time members

Dynamic Cubes offer support for multiple languages. Both the descriptions and the data in the columns can be defined to take advantage of multilingual features.

Image 5: Multilingual Features

Image 6: Adding measures

Measures are added at cube level. A base cube can only contain 1 fact table source.

Fact tables are joined to the dimensions with joins. An actual and target table would require 2 base cubes. Fortunately, these two cubes can be combined in a virtual cube. Queries on this cube will be decomposed to the granular level and combined logically. Shared dimensions and levels are merged, if they have the same name. Members having the same Member Unique Name will also be merged. Two virtual cubes can also be combined in another virtual cube. Virtual cubes do not have their own caching, but will use the caching of the base cubes.

Image 7: Virtual Cubes

Virtual cubes have other uses as well. When the daily load of the data warehouse is finished, cubes need to be updated. It is not possible to incrementally update cubes so the entire cube is reloaded. When using a base cube for old, non-changing data and another base cube for data that is still changing, both could be combined in a virtual cube. This type of handling would reduce load times dramatically and only require a full load when the Cognos BI service is restarted.

When a cube is published a new data source connection is added, a package containing a compiled version of the cube metadata is added and the cube is added to the dispatcher. Now, the cube is ready for use. The data however will only be loaded when a report requests for data. That is why it is important to prime the caches by running an admin task to refresh the data and member caches or schedule a report that will retrieve most of the data.

Image 8: Published cube

Security

Both data content as objects can be secured in Dynamic Cubes. Data security will restrict the number of rows an end user will see, such as only a certain region. Object security will restrict the amount of dimensions or facts are user will see. For example, nobody except the HR department should see salary information. Object security can be done at dimension level, but can go as far down as the attribute level.

Implementing data security is an easy three-step process. Firs,t a security filter has to be implemented on a dimension. Next, security views are defined and thirdly, security is applied at the data source level in IBM Cognos Administration.

Image 9: Implementing Data Security

Image 10: Implementing object security

Aggregates and Dynamic Query Analyzer

Dynamic Query Analyzer is the tuning tool for Dynamic Query Mode. It will give a graphical overview of the query execution plan showing exactly how much time is spent at each step. For Dynamic Cubes, the Dynamic Query Analyzer can also be used to analyze workload log data and implement in-memory or additional in-database aggregates.

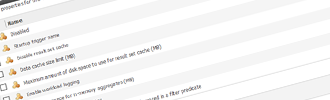

Image 11: Cube properties

The principle is easy, while users query the cube to analyze their data, workload information is stored in a log. To enable workload logging, you need to tick Enable workload logging at the cube properties and restart the cube. This data is used by the Aggregate Advisor in Dynamic Query Analyzer. Implementing the in-memory aggregates is done with a push of the button. The Aggregate Advisor will also advise on in-database aggregates and provide with scripts for implementing them. Maintaining in-database aggregates however remains the responsibility of the DBA or ETL team. In-database aggregates can be leveraged in the cube by using an Aggregate Cube.

Image 12: Aggregate advisor

Licensing

Although Dynamic Cubes come for free, there is an infrastructure implication.

When purchasing licenses by the Processor Value Unit-model (PVU), essentially Cognos is licensed to be used on a certain hardware platform with a known amount of processing power. When buying licenses on a named-user base, a certain amount of users are eligible to use the IBM Cognos software.

Dynamic Cubes are memory and CPU intensive. Ideally multiple servers are put in place where one server will handle day-to-day reports and the other is running the cubes. Fortunately setting up a distributed and balanced architecture is, from a licensing perspective, possible for both models. In the named user license model an unlimited amount and size of servers can be setup without additional licensing costs. In the PVU model, the dynamic cube server and gateway are not part of the PVU licensing model. To preserve maximum computing power to process reports, both the gateway and dynamic cube server should be installed on a different machine than the PVU based server.?

Conclusion

Dynamic Cubes offer a new exiting way in speeding up reports. Especially for the dashboard application IBM Cognos Workspace, dynamic cubes are the preferred data source, offering lightning fast performance. The automated in-memory aggregates make it easy and quick to tune cubes to reach their maximum potential. With every new major and minor release and even fixpack, features are added to Dynamic Cubes and Dynamic Query Mode proving the importance IBM values this technology.

As a Business Analytics Premier Business Partner, element61 maintains a constant and direct relation with IBM. element61's experts have a complete overview and understanding of the solutions that IBM Business Intelligence can offer. We have obtained the highest levels of certification in the IBM Cognos technology and can act as a Support Providing Partner. Feel free to contact us for any further information.