Introduction

Data quality is an often underestimated topic within data warehouse & business intelligence solutions.

Most companies are content in their belief that their data is good enough, although they have no factual basis for that belief. It takes facts to change their minds into a more correct awareness: ‘I had no idea the problem was that large'.

An undertaking of improving data quality or getting insight into the data quality provided, should answer amongst other the following questions;

- Which data quality issues exist?

- Is data quality getting better or worse?

- Which source systems or data providers generate the most and least data quality issues?

- Are there interesting patterns or trends revealed over time?

- Which business (decisions) is (are) impacted by a lack of data quality?

- Is there any correlation observable between the data quality levels and the performance of the organization as a whole?

- Which of the data quality issues and validations consume the most/least time in the ETL window?

It is vital that data quality insight gets its proper place within the overall (meta)data & business intelligence strategy.

Finally, it should be noted that a lot of times the (initial) setup of a data warehouse & business intelligence solution is used to measure and sometimes helps in cleansing the source systems data quality.

It is clear that any data quality improvement initiative will need to be a part of an overall data quality assurance program. Within such a program the right attention also needs to be given to, amongst others, an overall data governance framework and certain data quality related roles, such as data stewards. The objective of this Insight however is to offer an introduction to data quality in general and a data quality technique in more detail.

The data quality technique set forth in this insight is based on an article written by Ralph Kimball and additional information provided in the book ‘Data Quality: The accuracy dimension' by Jack Olson.

Before diving into the topic of data quality it is important to stand still at the first part of the expression ‘data quality':

Data

-

drives the current operations of an organization

-

records the history of activities of an organization

-

is used as basis for important future business decisions

-

gets more valuable, time after time, as additional ways are found to employ it (e.g. RFID – Radio-Frequency Identification, which is an automatic identification method, relying on storing and remotely retrieving data using devices called RFID tags. RFID technology enables improved insight in item locations, item movements and other item properties, such as temperature, on a very granular level)

-

volumes are increasing exponentially whereas data is generated by more people, is used in the execution of more tasks by more people, etc.

-

is the main

asset of many large organizations like insurance companies, banks, etc. but also increasingly for

manufacturing organizations, which merely produce and ship products, but use data that drives processes of material acquisition, shipping, billing, just-in-time delivery, ...

Data quality

A strong correlation exists between data & its use, therefore: Data has quality if it satisfies the requirements of its intended use. This is best explained through a simple example:

Suppose having a database containing records of Belgian doctors, where the data quality is assumed to be approximately 85% (records missing, double information, incorrect values, etc.).

-

When this database is used for notifying doctors about a new law concerning assisted suicide, then data quality would be considered as low

-

When this database is used to find potential customers for a new surgical device manufacturer, then data quality would be considered as high

Data quality has various dimensions: accuracy, timeliness, relevance, completeness, understood & trusted. However if the data is not accurate to start with, then the other dimensions are of little importance.

Possible impact of poor-quality data

Next to a decreasing end-user confidence in IT applications, there's a significant financial impact on business. Some examples:

-

Transaction rework costs: e.g. Organizations which have entire departments handling customer complaints on mishandled orders where data errors might be the cause: wrong part numbers, wrong amounts, incorrect shipping addresses

-

Cost incurred in implementing new systems: e.g. New systems which need to cope with older systems rules, data migration, bad data, un-documentation

-

Delays in delivering data to decision makers: e.g. Wrong data can require, in a recurring way, manual massaging of the information before it can be released for consumption

-

Poor customer satisfaction or lost customers through poor service: e.g. Customers consistently getting orders shipped incorrectly, customers getting wrong invoices

-

Lost production through supply chain problems: e.g. Supply chain systems delivering wrong parts or wrong quantity to the production line, causing an oversupply or a stoppage

Sources for inaccurate data

Initial data entry mistakes are obviously one of the main sources for inaccurate data, but not the only ones:

-

Wrong values entered

-

Data entry people who do not care to do their job right

-

Confusing and contradictory data entry screens or forms

-

Procedures that allow for data to not be entered, wrongly entered

or not be entered on time -

Procedures or policies that promote entering wrong values

-

Poorly defined database systems and problems due

to data syncronisation between different systems -

Data that is initially created accurately, but becoming inaccurate over time when the database is not updated to reflect the occurred changes

-

Inaccurate data is often created from perfectly good data through the processes used to move and restructure data such as extract, cleansing, transformation, loading, integration or migration

Problem approach

Before a remedy can be fabricated, it is important to identify the data quality problems. Here 2 approaches can be taken.

Outside-in

Here we look within the business for evidence of negative impacts on the organization that may originate in bad data quality. E.g. returned goods, modified orders, complaints, rejected reports, lost customers, missed opportunities, incorrect decisions, etc. Finally the problems are taken to the data to determine if the data or data entry processes caused the problems or not.

Inside-out

Here we start with the data itself. Inaccurate data is studied to determine the impacts on the business that either already have occurred or have the potential to occur in the future. This methodology depends heavily on analysis of data through a data profiling process.

Inside-out starts with a complete and correct set of rules that define data accuracy for the data. This is additional metadata (element descriptions, permitted values, relationships, etc.). Based on this metadata, inaccurate data evidence can be produced.

The inside-out approach is generally easier to accomplish, will require less time, will use own data quality analysts, whilst minimal bothering other departments. At the same time it will catch many problems which the outside-in approach will not catch.

A data quality program should use both approaches when not wanting to miss some important issues.

Screening

The screening technique is a data warehouse data quality technique which uses the inside-out approach (described above). It is a simple and scalable solution which can be easily integrated next to the existing data warehouse objects.

This technique can be used to execute a data quality assessment on various data sources, being different tables within various functional domains or an assessment of various external data providers delivering similar data sets (e.g. A global company gathering information from its subsidiaries).

For obvious reasons, before enabling a screening module, it is advisable to use data profiling. A data profiling tool will use analytical techniques in order to discover the true content, structure and quality of data of a data source. In the end, in any serious data warehouse & business intelligence project, room should be made for a source assessment phase, where data profiling should be used to early discover the (lack of) quality and associated issues with it in order to keep on track for the rest of the project.

The driving engine of the screening technique consists of a table containing a number of data quality screens. Each screen acts as a constraint or data rule and filters the incoming data by testing one specific aspect of quality. The set of screens can be build gradually based on the knowledge gathered on the source data so far (cfr. data profiling). At any point in the ETL-process, a screen can be added and executed against a set of data, which can result in one of the following:

-

Screen succeeds = data ok

-> Data is loaded further downstream -

Screen fails = data not ok (severe errors)

-> Data is placed in suspension until further investigation or

-> Data will be subject to cleansing or

-> ETL is fully stopped

In the case of different parties delivering their data, and when one or more severe errors are detected, it might be a valid option to not load the data any further, but to ask for new, higher quality data. These severe errors will also aid in reducing the number of iterations required in a pre-production phase, in order to have good quality datasets. In a post-production phase these severe errors will remain as they are. -

Screen fails = data is suspicious (warning)

-> Data is tagged, but loaded further downstream

The sole purpose of ‘warnings' is to flag ‘strange' data, and to communicate this appropriately to the corresponding source owner. In the context of different parties providing their data to a central data warehouse, these warnings will aid in reducing the number of iterations required in a pre-production phase, in order to have initial quality datasets. In a post-production phase the organization can decide to disable these screens. In this case, the warnings do not have the pretention to be an exhaustive formal data content validation. They are merely a help towards the different parties, to improve data quality.

Screens can be categorized in:

-

Column screens – rules based on one single column

E.g.

- Mandatory columns containing null values

- Values not adhering to a predefined list of values

- Numeric values not fitting within a predefined range

- Column lengths which are too long -

Structure screens – rules based on multiple columns

E.g.

- Invalid hierarchical roll ups

- Invalid parent - child relationships

- Invalid foreign key – parent key relationships

- Invalid code – description relationships -

Business rule screens – complex rules

E.g

- ‘Key account' customers should have at least 100.000 euro revenue in a predefined period

In order to avoid having inaccurate data in the data warehouse, the screening should occur as soon as the data has entered within the reach of the data warehouse (e.g. on top of external tables, in the staging area or other) enabling an early diagnosis.

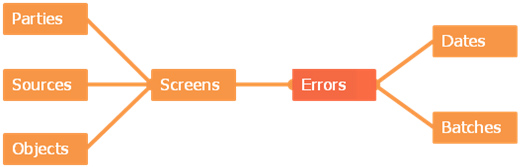

Screening star schema

In the screening star schema all errors are trapped within a single table. This table is designed as a data warehouse fact table which can be easily seen from a number of perspectives or dimensions; by table, by source, by screen, by screen type, by batch, etc. The grain of the error fact table is each individual error triggered by a screen. In practice however it is sometimes advisable for certain errors to only have 1 error-line for a certain type of screen. E.g. when a column in a 100.000 records source table is always empty, then it make much more sense to store only 1 record, with an occurrence of 100.000 instead of vice versa. On the other hand, when working with different parties each delivering their data, you need to make sure, that each (detail or summary) error can be traced back to the original source data.

The screens dimension represents all individual validations or screenings which can be executed against the delivered source data.

Most important attributes:

-

All foreign surrogate keys referring to the primary surrogate keys in the dimensions parties, sources & objects

-

Screen description: describing what the screen is about

-

Screen category: column, structure or business rule screen

-

Screen type: a grouping of screens which logically belong together. E.g. all screens which validate whether a mandatory column is filled in or all Referential Integrity screens

-

Other clusters next to screen type & screen category might be added as well

-

Object: source table or flat file to which the screen applies to

-

Column: column name to which the screen applies to

-

Stage before / after indicator: indication whether the screen should be executed before or after the stage mentioned in the attribute stage

-

Stage: in which ETL stage should the screen be run

-

Processing order: order within the stage in which the screens should be run

-

Corrective action: Possibly a corrective action can be made / defined on the erroneous record. This can be the description of the action, or the actual SQL statement to perform the correction

-

Exception action: should the record be passed or rejected when this type of error is encountered? Is the screen a severe error or merely a warning? Should the ETL be stopped overall?

-

Active flag: possibly a screen is no longer required (retired) or not yet required for a certain source / object. Depending on the requirements a screen can be flagged active in a pre-production phase and can be retired when going to a production phase.

-

Error detail: specifies whether all erroneous records will be logged in the error table, or only a summary will be logged or yet another scenario

-

SQL statement: contains the SQL statement to be executed. Other attributes within this table form the metadata which will be used to dynamically create the SQL statement which needs to be executed. Optionally an explicit SQL statement, which doesn't need to be generated, can be stored here

-

Generate flag: specifies whether the SQL statement needs to be generated or not.When new screens are added, the corresponding SQL statements should be generated by running a procedure

The dates dimension is a typical data warehouse time dimension, containing amongst other a roll up from the calendar date up to year.

The batches dimension represents all occurrences of the overall batch process. A batch contains a predefined number of stages (e.g. flat file to staging area stage). Each stage contains a predefined number of processes (e.g. load customer data from flat file to staging area). Within the processes dimension the following attributes might be very interesting:

-

Process description: for the screening processes, each executed screen will be shown as a process in the process table. Optionally the entire SQL statement which was executed to satisfy a screen could be stored here for reference purposes.

-

Start time: time before a process is started

-

End time: time after a process is ended

-

Time elapsed: time expressed in seconds between start time & end time. This value expresses the time window required for executing a specific screen. Interesting towards aggregation of screen timings and trend analyses for 1 specific screen.

-

Status: expresses whether the screening was successful or a failure

The parties dimension contains all parties which deliver data. This can be

one single entry, up to all delivering subsidiaries for a global company.

one single entry, up to all delivering subsidiaries for a global company.

The sources dimension contains all source systems which can deliver data.

The objects dimension represents all tables or flat files, related to a specific source within a specific party which can deliver data.

Most important attributes:

- Natural key: business key which is defined for the object

The errors fact contains all errors which were triggered by a screen.

Most important attributes:

-

Surrogate primary key in case a single record needs to be identified in the fact table

-

All foreign surrogate keys referring to the primary surrogate keys in the dimensions dates & batches

-

Time: time in seconds since midnight

-

Key: the record id (in case of a flat file) or the business key value (in case of a table) is stored here. To be used as a back pointer to the source system

-

Error description: a comprehensive error description is given here

-

Occurrence: number of occurrences to which the error applies to. 1 by default

Valuable metadata is generated, when the screening technique is used, metadata which forms the diagnosis of the quality of the source systems.

A best practice is to create a reference table containing all possible screens definitions together with clear examples which can be communicated to the source owners. On top of the screening star schema a semantic layer, through means of a business intelligence tool, could be deployed. The objective should be to have a self-explanatory error report. In this way a fully automated loop can be established between data being delivered, data screening and communication returned to the owner.

Advantages of the screening technique

A vast number of advantages exist within the screening solution:

- Framework for capturing all data quality errors

- Framework for measuring data quality over time

- Easily integrated with existing metadata

- Reusable

- Validations, which are no longer needed, can be easily (logically) removed

- Transparent

- Thorough – being as complete as possible

- New validations – screens - can be added easily

- Possibility to qualify severity

- Readable & maintainable by non-technical persons

- Fast

- Low cost

- One central place for all errors

- Error traceability - backward traceability up to the delivered flat files or sources

- Possibility for trend analysis or other analytics via the star schema

Closing

Screening is an inside-out technique for improving data quality or getting insight into the data quality provided.

It should be clear that a technical attempt such as screening will only function when it is part of a data quality assurance program which is accepted by the organization as such.

Only then a foundation can be created for cleaning & redesigning the source systems, forms, processes & procedures.