On June 18th 2020, Databricks announced that the Apache Spark 3.0 release is available as part of their new Databricks Runtime 7.0. Apache Spark 3.0 itself got released only 8 days prior on the 10th of June. We really love these quick innovation cycles and are happy Databricks is on top of their game in acting very fast here.

What is Spark?

Spark is an open-source distributed cluster-computing framework, built primarily to perform big data processing. It can rapidly perform processing tasks on huge datasets and can disperse the computations over a cluster of different computers. It provides APIs that allow programming tools such as Python, Scala, Java, SQL and R to interact with it. Today, Spark is the most-used cluster-compute framework for both real-time, big data and AI applications.

What is Databricks?

![]()

Databricks offers an integrated platform that simplifies working with Spark. It is a popular product that is quickly gaining recognition with companies who want a platform where both data scientists and data engineers can collaborate.

Together they can set up real-time data pipelines, integrate various data sources, schedule jobs, run streaming tasks and much more.

The newest Spark Release: version 3.0

Apache Spark was originally developed in 2009 at UC Berkeley and has been gaining popularity ever since. Spark 2.0 got released 4 years ago and was the first release to include Structured Streaming, which was a key cog in the usability of the Spark framework for real-time big data. At element61, we use Structured Streaming at practically all of our clients that need real-time big data solutions. There have been plenty of smaller releases between now and then, but Spark 3.0 is obviously a huge release, including the resolution of more than 3400 Jira Tickets with contributions coming from people at Facebook, Google, Microsoft, Netflix, etc.

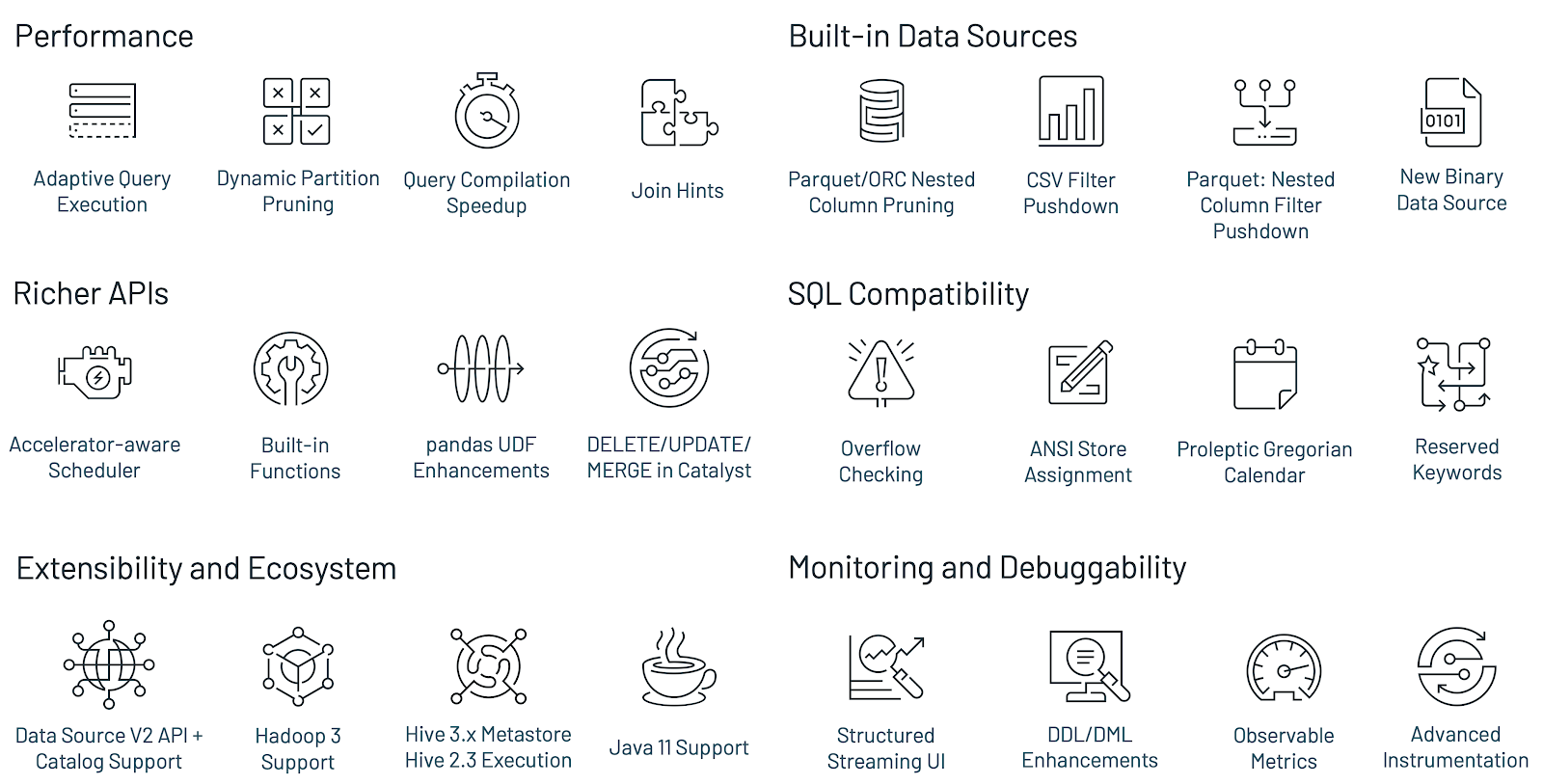

Spark 3.0 includes plenty of new features, bug fixes and other capabilities, of which a nice overview graph is listed below. However, in this insight, I will highlight those that I am the most excited about to try them at our different clients using the newly released Databricks Runtime 7.0 which includes the Apache Spark 3.0 release.

Overall Performance Improvements

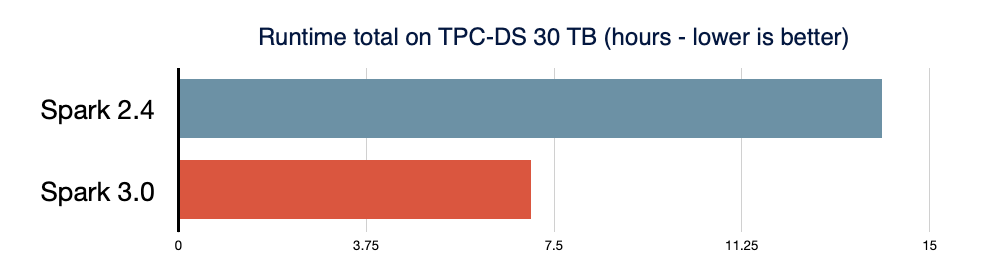

In the world of big data processing, the name of the game is speed! The 3.0 release does not disappoint in that regard. In the TPC-DS 30TB benchmark, which is the industry standard benchmark for measuring the performance of data processing engines, Spark 3.0 performs roughly 2x better than Spark 2.4. Testing this out ourselves on some decently big datasets, we can attest to these performance improvements as the two tested queries went down from 10 minutes to 4 minutes and from 60 minutes to 15 minutes respectively.

There are two major features in the new Spark release that contribute most to these performance improvements.

- The first one is the Adaptive Query Execution framework, which actually changes the execution plan of the query during runtime. When you want to run a query, initially an estimate will be made in order to optimize how many partitions to shuffle or if to use a broadcast join or not. But because queries can be quite complicated, this initial estimate might not be the best one. Adaptive Query Execution helps with this as it will determine during run time, based on the run time statistics of the different tables, what is the optimal scenario when it comes to shuffling or picking the best join. AQE is actually turned off as a default setting in Spark 3.0 so if you want to use it you need to enable it by setting SQL config spark.sql.adaptive.enabled to 'true'. Often for people it is tricky to estimate how many partitions one optimally needs, but because of AQE it matters less as there is some magic happening behind the scenes that optimizes this.

- The second one is Dynamic Partition Pruning. In big data analytics, it is important to know which parts of the data not to scan as they are irrelevant to the executed query. This is called partition pruning. Sometimes the processing engine is unable to detect at parse time which partitions it can eliminate and this is where Dynamic Partition Pruning comes in. This would for example be the case in star schemas where we have plenty of fact tables and dimension tables, and you would want to filter on one side of the join in your dimension table, to only include stores from Belgium, but the sales data fact table that would be more interesting to prune is on the other side of the join. The Dynamic Partition Pruning makes it possible to still prune in these situations.

Observable Metrics

When working with data pipelines that are running batch or continuous streaming jobs, it is very important to monitor any changes to your overall data quality. Previously it was quite tricky to try doing this, but with the release of Observable Metrics, these endeavors are bound to become a lot easier.

Observable Metrics are arbitrary aggregate functions that can be defined on a query. Whenever a batch is finished or a streaming epoch is reached, an event will be sent that contains these metrics for the processed data. By listening to these events, you can personalize your monitoring a lot more, for example electing to get a mail when a certain metric exceeds a pre-defined threshold.

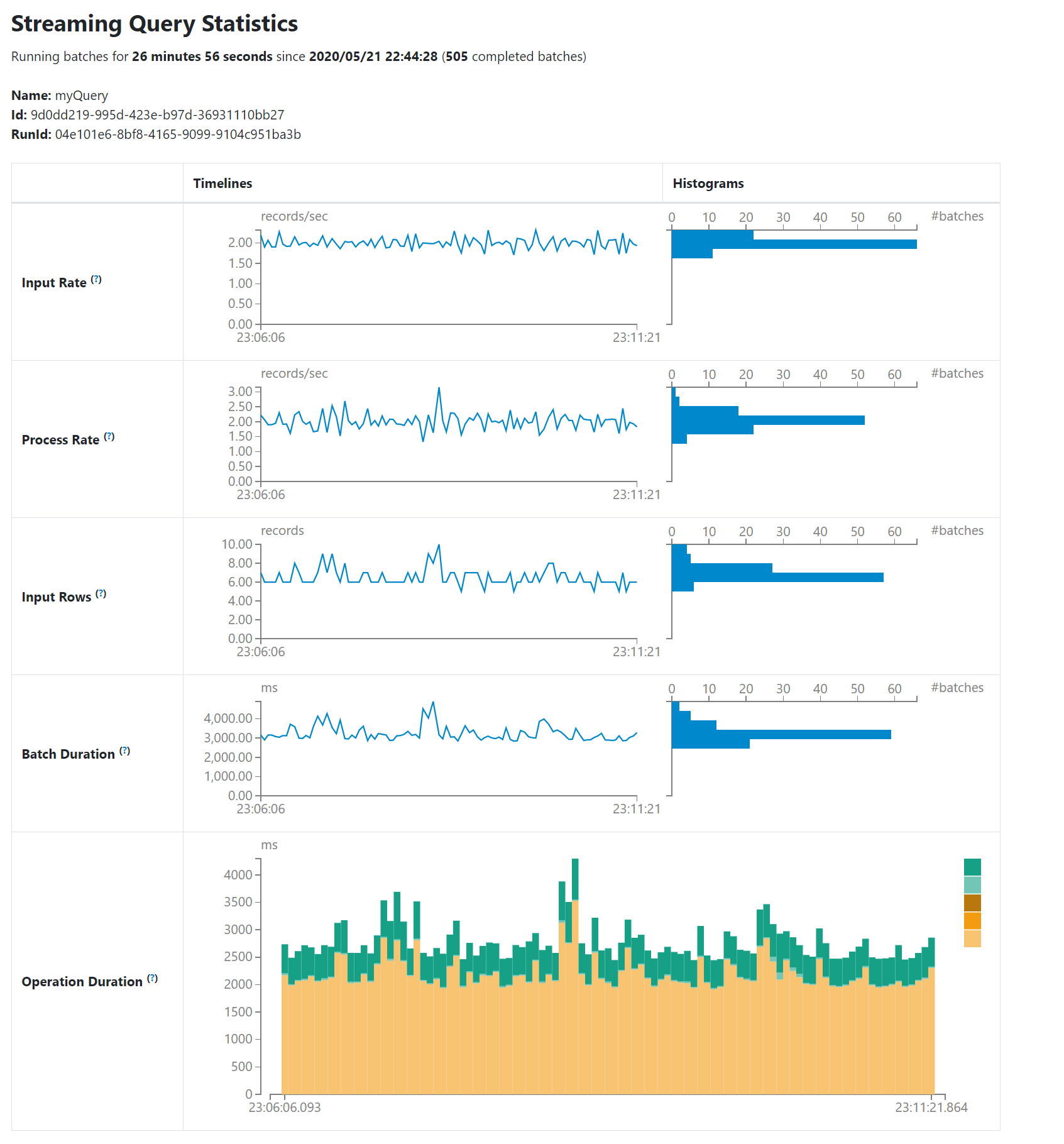

Structured Streaming UI

The Spark 3.0 release adds a new dedicated Spark UI which can be used for analyzing streaming jobs. It adds metrics such as

- the input rate

- the process rate

- the input rows count

- the batch duration

It also adds the duration of the different kind of operations that make up one complete batch process, which are addBatch, getBatch, latestOffset, queryPlanning and walCommit. Previously, looking at the performance of a streaming query was tricky and unpractical so we are very happy that efforts were made in this regard.

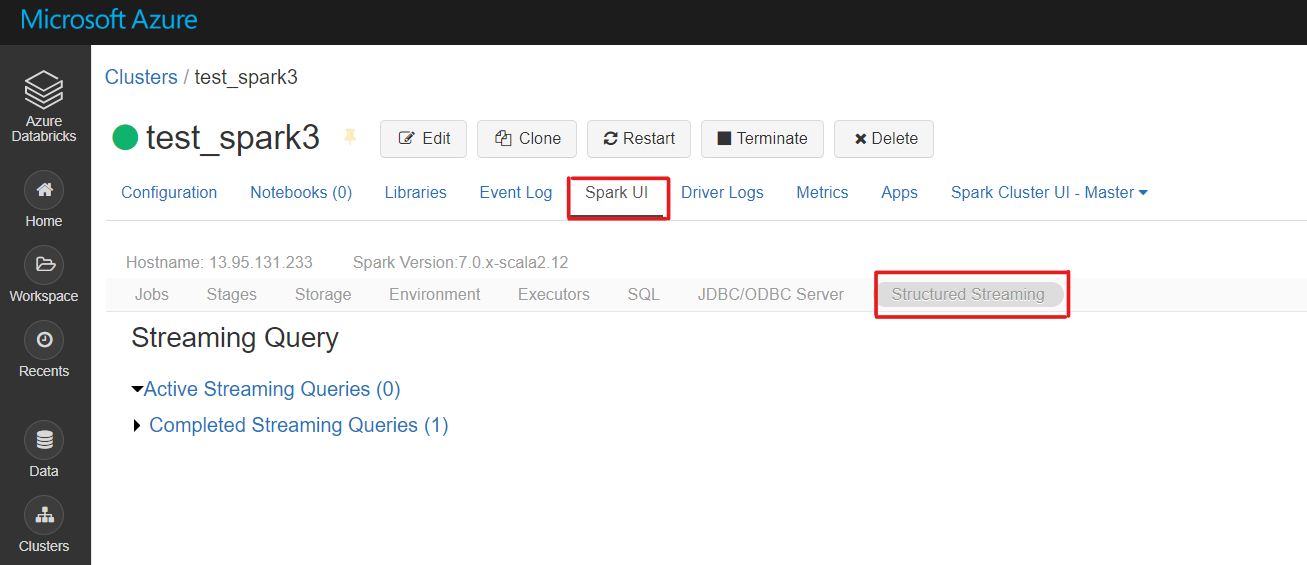

In Databricks, you can find this by clicking on your Spark 3.0 Cluster, selecting Spark UI and selecting the Structured Streaming tab. Unfortunately, the Databricks 7.0 runtime which includes Spark 3.0 does not currently support Azure Event Hub integration (in contrast with all the previous runtimes) but this is something that will be added in the near future.

Compile-time type enforcement

This particular feature almost got lost to me, as it is part of a bigger improvement, namely the ANSI SQL compliance improvements, but it is an exciting change. When currently using Spark, a big cause of worries and issues has been schema enforcement. For example, when I am uploading new data to a table, and a particular column which always used to be an integer suddenly is comprised of float values, Spark used to object, saying it cannot merge incompatible datatypes IntegerType and DoubleType. The only way around this, would be to explicitly CAST the column as a DoubleType column.

However, now in Spark 3.0, this is not the case anymore, as Spark will take care of the type coercion according to ANSI SQL standards, and as such will transform the column to a DoubleType column when DoubleType values come in. For me this is a welcome addition to Spark, but for some people this can be unwanted behavior so it is good to know that the option can be turned off by putting spark.sql.storeAssignmentPolicy to “Legacy”.

Power of the open source

Aside from my favorite highlights of the Spark 3.0 release, there were plenty of other interesting additions such as improvements to Python UDFs, better error handling, accelerator-aware scheduling, etc.

Spark continues to be a great project with a lot of backing by the biggest companies in Tech and element61 is looking forward to applying the new features and improvements of Spark 3.0 in our current and future projects.

Do you have some questions about Spark and Databricks and how it can transform your big data endeavors? Do not hesitate to get in touch!