TL;DR

Databricks Data Classification automatically detects, classifies, and tags sensitive PII data at the catalog level using AI, enabling centralized and scalable governance. It regularly scans for new data, provides classification confidence scores, and allows manual review or removal of incorrect tags. Although reclassification can’t be triggered manually and costs are higher due to AI usage, it greatly simplifies the identification and management of sensitive columns.

Combined with Attribute-Based Access Control (ABAC), governed tags from Data Classification can be used to enforce column masking or row filtering through policies defined at the catalog level. This eliminates the need for table-by-table maintenance, creating a scalable, flexible, and centralized access control system for managing sensitive data in Databricks.

Introduction

Data Classification is a new feature in Databricks, which enables the automatic identification, classification, and tagging of columns containing confidential PII data at the catalog level. These tags can be used as a base for access control using Attribute-Based Access Control (ABAC). This results in a scalable, flexible, and centralized access control system, where the tags can be controlled and updated on the account level. Policies can then be created with the governed tags and in combination with UDFs for centralized governance of column masking and row filtering.

Data classification

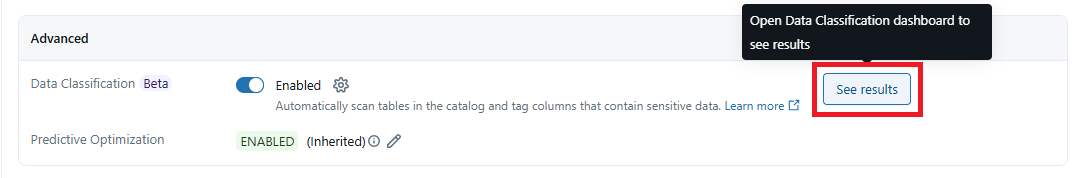

When Data Classification is enabled at the catalog level, Databricks will scan all tables in the catalog, looking for columns containing sensitive data using AI. When the system detects a column containing PII data, it will classify and label the column. Databricks will periodically incrementally scan for new data and new tables in the catalog, making sure that new data is classified without requiring a costly re-run of already classified data.

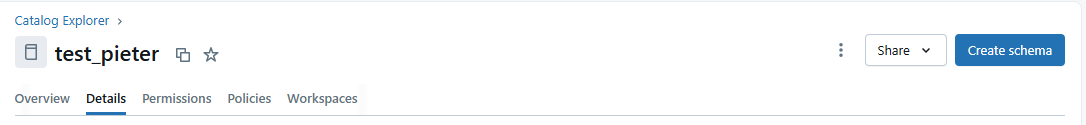

After the classification is complete, you can check the results in the Catalog Explorer > Details > Advanced > See results. Here you will get an overview of the different tables and columns which have been identified containing PII data and were classified by Databricks.

If you do not see this result, you may need to click on Edit draft and publish.

You can also check the classification log, where you can see newly classified columns over time.

You will also find an overview of every table and column with their classification and a short indication as to why the column was classified, and some example column values. Databricks also provides a match score, indicating the level of confidence Databricks has in the classification.

Original data:

In this example case, we can see that Databricks successfully classified all 5 sensitive PII columns. However, we see that in the case of the IBAN bank accounts, Databricks did not recognize the values in the column as IBAN accounts. They derived the classification based on the column name.

Databricks allows you to manually remove classifications and their tags, enabling you to remove any misclassified data. We would recommend regularly checking the new classifications to make sure the data is correctly classified. Note that Databricks will not incrementally re-classify columns where the generated classification was removed; Databricks will only check new tables/columns incrementally and classify them.

Performance-wise, Data classification will take place incrementally for new tables, giving classification results within 24 hours after changes. However, a downside is that there is no way to trigger a re-classification manually. When creating a new table or updating an existing table, Databricks will internally schedule an incremental re-scan within 24 hours. In our case, it took nearly 3 hours before the new table was classified.

When looking at the Costs of Data Classification, we see that tasks are billed at a cost per DBU that is 3 times higher than serverless Workflow ($1.50 / DBU in West Europe, due to the use of AI models for the classification process). The initial scan will be the costliest, as this will be a full scan. Later re-scans will be cheaper as they will run incrementally for new data. More information on how to track your costs here.

It is important to note that Databricks does not currently support classification for views. However, you can apply classification to the underlying tables.

The list of supported PII classes which can be automatically classified can be found here. We expect this list to grow over time.

Attribute-Based Access Control (ABAC)

Attribute-Based Access Control is another new feature which streamlines access control. ABAC makes use of governed tags, which can be used to create a scalable, flexible, and centralized governance system, where the tags can be controlled and updated on an account level. The end goal of the ABAC is to enable flexible row-level security by filtering or flexible column-level security by masking, effectively hiding sensitive data from users without the required access.

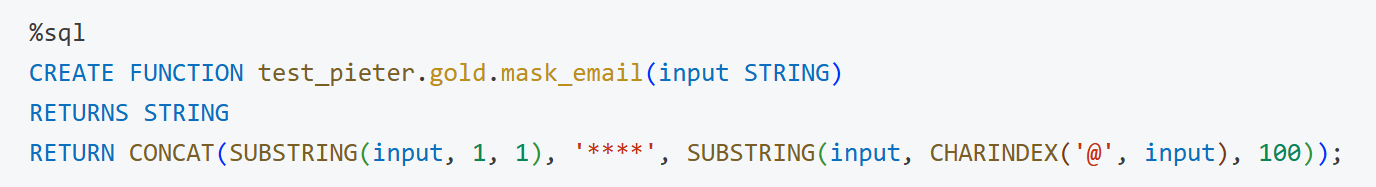

To make use of ABAC, we first want to create a column masking or a row filtering Function. In this example, we create a column masking function for email addresses.

Then we can create a Policy, where we make use of the function and attach it to a Governed Tag, which can be created manually by the user and assigned to a column, or preferably assigned automatically by Data Classification. Data Classification works only for the predefined system tags, which can be found here.

When defining policies at the catalog level, all child schemas and tables will automatically inherit these policies, effectively allowing all columns with the same tag to have the same column masking applied. For example, when all email columns in the catalog are tagged with the system tag class.email_address by automatic Data Classification, we can create a policy applying a masking function to all the columns with that system tag. This eliminates the need for table-level masking/filter functions and table-level maintenance of these functions. Before ABAC was introduced, we had to manually apply the row filtering or column masking functions to each table specifically, which is difficult to scale and hard to maintain.

Here is an example for column masking of emails, after creating a masking function and applying a policy to the class.email_address governed tag, and after automatic Data Classification.

Customer table:

Supplier table:

ABAC is currently not supported for views, materialized views, or streaming tables. When you apply a column mask to a table, the mask is automatically inherited by any view that references that column. However, row filters do not behave the same way, as you must explicitly include the row filtering logic within the view definition. More information here.

Conclusion

When combining Databricks Data Classification with Attribute-Based Access Control (ABAC), we can automatically identify PII data and match it with governed tags. We can then easily set up a catalog-wide access control strategy where we attach the governed tags to a policy using a UDF for column masking or row filtering. This results in a single UDF being applied to all necessary columns without the need for a table-specific governance setup and table-specific governance maintenance, increasing scalability.

If you are keen to learn more, do reach out via the element61 contact form.