TL;DR

Databricks is evolving into a more business-ready platform by closing legacy gaps in SQL (transactions, stored procedures, collations...), simplifying operational integration with Lakebase, and unifying data engineering through Lakeflow. Unity Catalog now strengthens governance with BI Semantic Layers and ABAC, while Databricks One delivers a consumer-grade UI that brings AI/BI Dashboards, AI/BI Genie and Databricks Apps to business users, reducing migration friction, boosting trust in analytics, and expanding adoption beyond data teams.

Introduction

The Partner Tech Summit is an annual webinar organized by Databricks exclusively for its partners, including element61. It typically builds on the feature announcements made during the Data & AI Summit, held a couple of months earlier (you can find a summary of this year’s edition here). This year was no exception: the event provided valuable insights into the latest releases, with new features ranging from DBSQL to Gen AI clearly explained and demystified.

The Best Data Warehouse Is a Lakehouse

Databricks SQL continues to evolve, with many new features either in private or already in public preview. In the past, achieving certain functionalities commonly available in traditional on-premises databases (such as recursive CTEs, selective overwrites, or multi-statement transactions) required writing complex PySpark code. Today, these capabilities are natively supported in DBSQL, enabling pure BI profiles to implement advanced business logic that previously was only reserved to Spark developers.

To name a few pain points they addressed:

-

Previously, comparing strings in Databricks often required workarounds such as repeatedly using lower(), upper(), or trim() functions and even nesting replace() functions when dealing with non-English alphabets. These approaches not only hurt performance but also caused confusion, especially for users coming from environments like SQL Server. With the introduction of collations, data processing becomes far more intuitive. Collations define rules for sorting and comparing text that respect both language and case sensitivity. In practice, this makes databases language- and context-aware, ensuring text is handled exactly as users expect.

CREATE TABLE emp_name(name STRING COLLATE UTF8_LCASE);

-

Many legacy data platforms rely on SQL stored procedures for data processing (for example, executing a merge statement). In the past, migrating these workloads to Databricks meant rewriting stored procedures into PySpark code—a time-consuming and resource-intensive task. With the introduction of Unity Catalog (UC) stored and governed procedures, customers can now take a lift-and-shift approach instead of being forced into a “modernize first” strategy.

-

Similarly, tasks like organizational reporting or bill of materials management used to be challenging to implement in Databricks. The arrival of recursive CTEs now makes it possible to traverse hierarchical or graph-structured data using a straightforward, familiar SQL syntax.

WITH RECURSIVE bom_qty (component, quantity) AS (

-- Base case: start with top-level parts for one bike

SELECT component, quantity

FROM bill_of_materials

UNION

-- Recursive case: multiple quantity by parent's qty

SELECT b.component, b.quantity * t.quantity

FROM bill_of_materials b

JOIN bom_qty ON b.parent = t.compone

-

Another area where Databricks had been lagging was geospatial data processing. With the introduction of Spatial SQL and Geo Data Types, migrating from platforms like PostGIS or other geospatial databases has become much more straightforward. This eliminates the need to rely on GeoPandas (limited to a single node) or Sedona (which requires complex configuration).

-

In addition, multi-statement transactions are now supported in Databricks. Previously, certain data transformations such as updating a Slowly Changing Dimension Type 2 (SCD2) table required chaining multiple transactions, where a failure in the last transaction could result in bad data. With this new capability, a serie of transactions can also become fully atomic: either all steps succeed, or none do.

BEGIN TRANSACTION;

INSERT INTO silver.orders

SELECT * FROM bronze.orders WHERE

o_isvalid;

DELETE FROM bronze.orders

WHERE o_orderkey IN

(SELECT * FROM silver.orders);

COMMIT TRANSACTION;

-

Lastly, selective overwrite will help developers avoid writing large amounts of boilerplate code. It provides a flexible and efficient way to replace only a subset of a table, streamlining development and reducing complexity.

INSERT INTO target_tbl AS t

REPLACE ON (t.date <=> s.date)

(SELECT * FROM source_tbl) s

Reverse ETL made easy

With the announcement of Lakebase, Databricks introduced its own fully managed Postgres database. Its key advantages include the separation of storage and compute as well as native integration with the Lakehouse. Lakebase is NOT a low-latency alternative to DBSQL for analytics. Instead, it focuses on delivering high-performance row-level operations supporting high queries per second (QPS) and low-latency reads and writes within applications. A prime use case is reverse ETL, a fancy word for bringing curated gold data back into operational applications.

Traditional reverse ETL solutions often rely on databases such as (unmanaged) Postgres, MySQL, Oracle, or SQL Server. These setups are typically complex to integrate, difficult to manage, and provide an outdated developer experience. Databricks positions Lakebase to overcome these challenges through a modern, fully managed approach. The real question, however, is what costs will come with this promise.

Solving the fragmented data stack problem one connector at a time

Lakeflow aims to unify the “holy trio” of data engineering: ingestion, transformation, and orchestration. With Lakeflow Connect, Databricks is actively expanding its library of connectors to popular data sources. Still, this vision can only be realized once a broad range of connectors already available in tools like Fivetran, Azure Data Factory, or Airbyte are supported. One particularly interesting feature to watch is Zerobus, a direct write API that enables event data to be pushed straight into the Lakehouse, simplifying ingestion for IoT, clickstream, telemetry, and similar use cases.

Another promising element is Declarative Pipelines, which allow users to design data processing pipelines with little to no code. While appealing on paper, the actual developer experience will need close examination before relying on them for production-grade workloads. At this stage, the most mature and reliable component of Lakeflow is its Job capability, which stands as the strongest pillar of the framework today.

One Catalog to rule them all

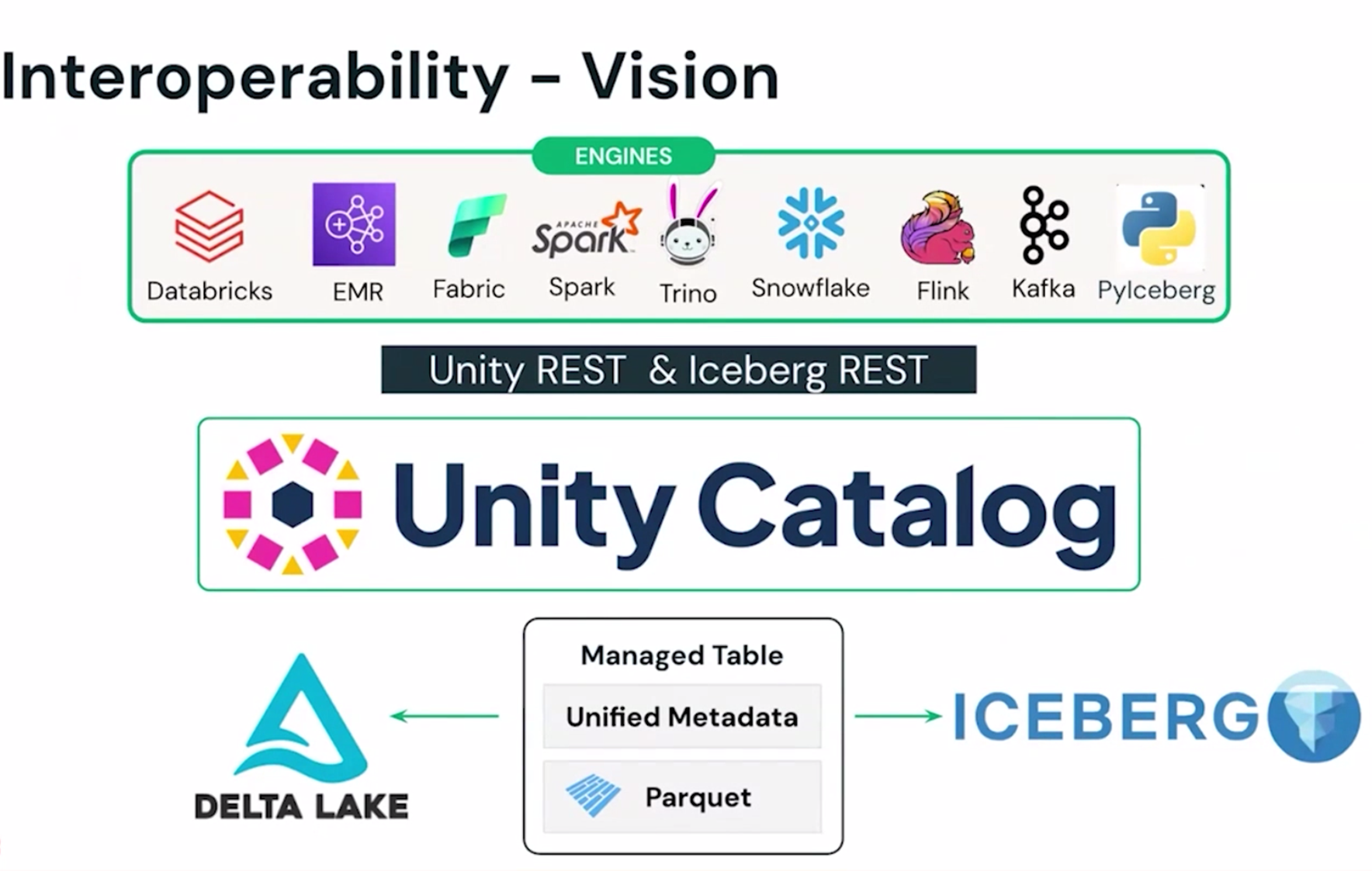

The company has continued to advance its vision of making Unity Catalog the central hub for interoperability across engines and open-source data formats. Two new features stand out in this effort: the introduction of BI Semantic Layers and the addition of Attribute-Based Access Control (ABAC).

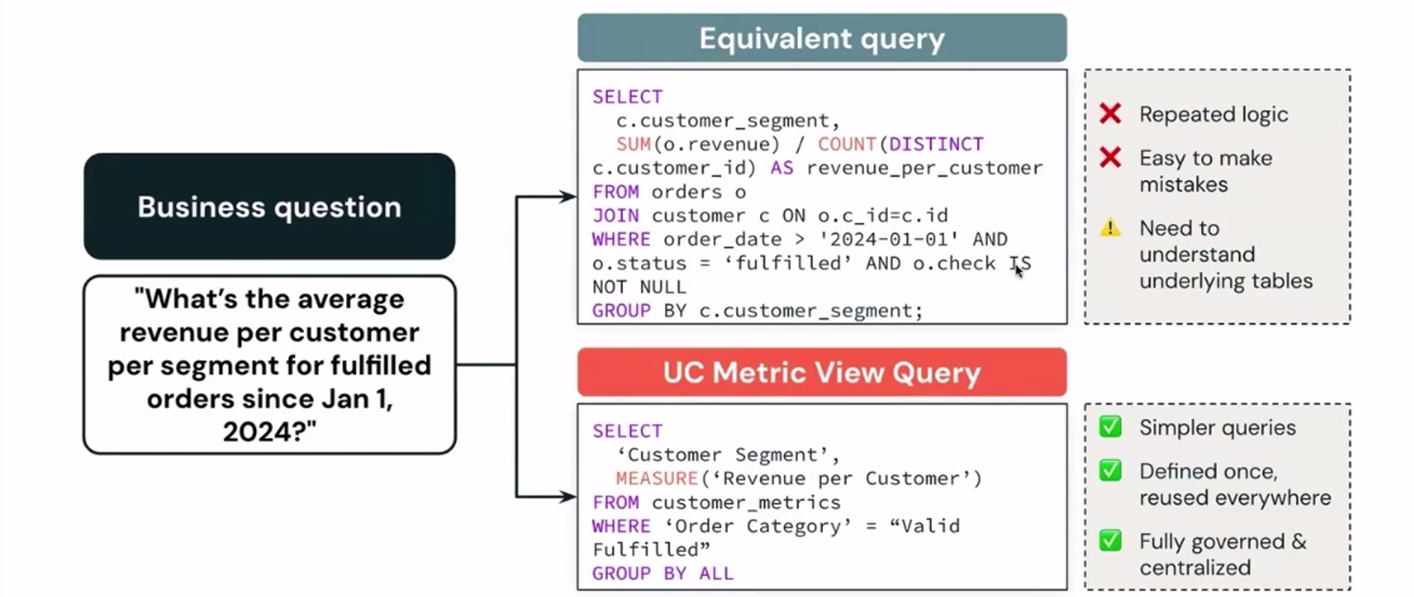

A recurring challenge in analytics is that business units often define metrics differently or use separate datasets to calculate them. This fragmentation creates inconsistencies and undermines trust in reporting. BI Semantic Layers aim to address this issue by providing a unified semantic foundation with centralized governance, ensuring reliability and consistency across the organization. At the same time, Databricks is betting on openness by offering access via REST API or SQL, enabling seamless integration with tools such as Tableau, Hex, or Sigma.

Business Semantics is currently limited to Metric Views. Metric Views, now in Public Preview, allow users to define their data models, measures, and dimensions once through either the UI or YAML files and then reuse them across queries and front-end tools (AI/BI Dashboards but also external tools like Tableau). This concept is similar to measures in Power BI: measures can be aggregated across dimensions without requiring explicit aggregation functions. Querying a Metric View is enabled by a new MEASURE column type and function. Meanwhile, Agent Metadata, expected to launch by the end of the year, will further enrich this framework with AI.

In short, Metric Views extend traditional SQL views by treating aggregation logic as a stand-alone data type. They automatically separate the calculation of aggregates from the grouping of dimensions, eliminating the need to define complex aggregations or maintain multiple views. This simplifies query development and ensures consistency across reports and dashboards.

ABAC combines attributes, policies, and inheritance to automate and scale access control. For example, you might define a policy such as ‘Only admins can view assets tagged with PII'. With data classification, you can automatically tag all columns containing PII data. The policy then leverages this attribute to apply the access control rule to all tables in the catalog, ensuring consistent enforcement without manual configuration.

Agent Bricks to help you build, evaluate and optimize AI agents

Gen AI has been a major focus at Databricks (and basically everywhere) in recent months. In June, the company announced Agent Bricks—an auto-optimized agent that leverages your data. The Beta release already includes different types of agents, supporting both system-to-system interactions and human-in-the-loop workflows.

Due to the expensive serverless compute, running agents on Databricks is generally more expensive compared to running an agent in environments like Azure. The key question is whether the announced ease of use of Agent Bricks and auto-optimize features can offset these higher costs.

AI/BI Dashboards: From uneven adoption to broad business impact

Adoption of AI/BI in Databricks has been promising, but uneven. While a few accounts are seeing real momentum, most are still stuck at fewer than ten active users. The takeaway? We’re just scratching the surface, and the opportunity is massive.

To grow AI/BI from pilot projects into game-changing business value, three things matter most:

- Secure a champion and set the vision. AI/BI thrives when there’s someone pushing it forward. Pair that leadership with UC metric views to “shift semantics left” and create trusted sources of truth.

- Land before you expand. With automated identity management (AIM) in Azure and the arrival of Databricks One (Public Preview), onboarding new users is easier than ever. Business users don’t want clusters or notebooks, they want answers. Databricks One gives them that, with dashboards, Genie, and apps in a clean, consumer-grade UI.

- Accelerate consumption by expanding reach. Scale governance, embrace the new consumer access entitlement, and extend insights into where business users already work, like embedding dashboards in Salesforce.

The bottom line: AI/BI in Databricks isn’t just about reports. It’s about building a movement; one champion, one win, one expansion at a time, until every business user can tap into AI with zero friction.

Databricks One is a brand-new, business-friendly experience designed to put AI and BI into the hands of every user, not just data teams. With a clean, consumer-grade interface, business users can explore AI/BI Dashboards, ask natural-language questions with Genie, and tap into tailored Databricks Apps without being confused by Machine Learning or Data Engineering interfaces. Backed by Unity Catalog governance and simple onboarding via the new consumer access entitlement, Databricks One makes insights fast, secure, and intuitive. The full experience entered public preview last week.