In this insight I will guide you through what Power BI dataflows are all about and how you can easily setup a "date" Power BI dataflow, which you can reuse in all your datasets.

What are Power BI dataflows?

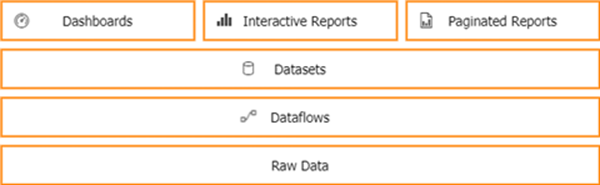

Power BI dataflows are Power Query processes running in the cloud, with the same set of data preparation functionalities, data source connectors, gateways, and transformations. The dataflows are created and managed in the online Power BI service and they exist next to Power BI datasets, dashboards, and reports in a Power BI workspace.

With Power Query, and thus Power BI dataflows, you can develop do-it-yourself ETL processes, which you can use to connect with business data from various data sources. In no time you can start your own Proof of Concept and share Datasets with other people.

Power BI dataflows is positioned, by Microsoft, NOT to be a replacement for your Data Warehouse architecture nor your ETL procedures, but more as an addition to your analytics environment.

Why should I use Power BI dataflows?

- The biggest advantage is reusability: Power BI dataflows can be shared with other people across the Power BI environment. In case you have a library full of Power Queries ("M" scripts) or if you find yourself copying the scripts between Power BI datasets, then you should consider creating Power BI dataflows.

- Power BI dataflows is a low-code/no-code solution. You do not need to write a single line of code to create your data transformations. Dataflows are created using Power Query Online, a powerful and friendly transformation tool already familiar to tens of millions of Excel and Power BI users. The "M" scripts are available for review or for changes, but you do not need to write any of it!

- These dataflows can be made available in a shared Power BI Workspace, e.g. owned by a specialist team, who creates validated entities which can be used throughout the enterprise. The creation of validated customer golden records can be incorporated into several new datasets, who are built on a self-service base by individuals or teams.

- Dataflows are designed to work with large amounts of data. You do not even need the Power BI Desktop client to create a Power BI dataflow, because you have the ability to perform the data preparation in the Power BI portal.

- Dataflows, which require different refresh timings, can all be scheduled individually. When you’re using Power BI Premium/Embedded capacity, you can also enable incremental refresh for Power BI dataflows entities that contain a DateTime column.

Actually there's no reason to hold back Power BI dataflows usage, but keep in mind to create agreements (best practices) on how you will organize the dataflows environment and how people will consume datasets in a well-governed way.

Where is the Power BI dataflows output stored?

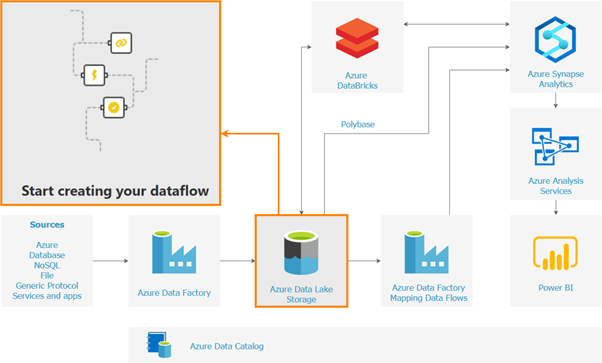

At the moment you have 2 scenarios for storing the data from Power BI dataflows:

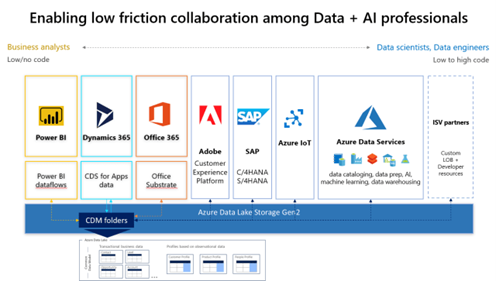

- an Azure Data Lake storage, internally managed by Power BI. When the dataflows are executed, the resulting dataset is stored in the underlying CDM (Common Data Model (*) - see explanation at end of this Insight) folder in the data lake storage. The Power BI Service hides the details of this Azure Data Lake Storage.

- or a bring-your-own Azure Data Lake Storage. When Power BI dataflows is configured with bring-your-own ADLS, then the data is written into your own Azure Subscription. Of course, you have to pay Azure fees for the Azure Data Lake Storage that you're using

When dataflows are used standalone (Azure Data Lake Storage managed by Power BI), the storage limits are 10 GB of storage per PRO license and 100 TB of storage per Premium/Embedded license, but if you're integrating with Azure Data Lake Storage then there's no limit.

The biggest reason to choose for Bring-Your-Own Data Lake Storage is that the data is not limited for usage within Power BI, but you can use it everywhere. This means that the produced data can be used by data scientists or by data engineers using Azure Data Factory or Azure Databricks.

What are the benefits of Power BI Premium/Embedded, compared to Power BI Pro?

- In Power BI Premium/Embedded you can schedule the refresh 48 times, and in Power BI Pro 8 times a day.

- Because you can prepare big datasets with dataflows, you can load data by incrementally refreshing Power BI dataflows.

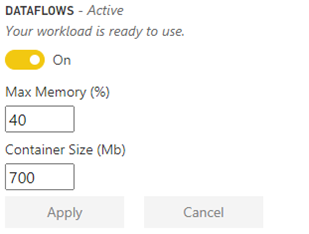

- Performance: transformations are executed in parallel, which leads to faster entity refreshes.

- Entities stored in other workspaces, can by refreshed by using linked entities. Linked entities are entities in one Power BI dataflow that reference entities in another dataflow.

- If you want to create a new entity based on an existing entity, then you can use computed entities in dataflows. Computed entities are entities that reference linked entities, and which rely on in-storage calculations for performance and scale.

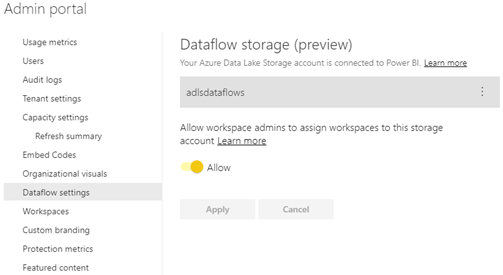

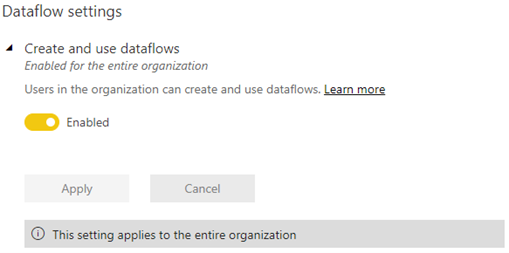

(!) Don't forget to enable dataflows in the Power BI Admin Portal if you're running on Premium/Embedded capacity.

Date dimension?

Almost every analysis makes use of a date dimension to slice the dataset by month, by quarter, by year, or to do some time intelligent calculations. This, particularly, makes the date dimension a very good candidate to create a Power BI dataflow and reuse them in your analyses.

What do you need to get started creating a common date Power BI Dataset?

- Power BI Pro license or Power BI Premium license

- Enable dataflows

- Power Query skills to create the date dimension

How do I create a Power BI dataflow?

- Create a Power BI dataflow –

- Add the date M script

| let StartDate = #date(StartYear,1,1), EndDate = #date(EndYear,12,31), NumberOfDays = Duration.Days( EndDate - StartDate ), Dates = List.Dates(StartDate, NumberOfDays+1, #duration(1,0,0,0)), #"Converted to Table" = Table.FromList(Dates, Splitter.SplitByNothing(), null, null, ExtraValues.Error), #"Renamed Columns" = Table.RenameColumns(#"Converted to Table", {{"Column1", "FullDateAlternateKey"}}), #"Changed Type" = Table.TransformColumnTypes(#"Renamed Columns", {{"FullDateAlternateKey", type date}}), #"Inserted Year" = Table.AddColumn(#"Changed Type", "Year", each Date.Year([FullDateAlternateKey]), type number), #"Inserted Month" = Table.AddColumn(#"Inserted Year", "Month", each Date.Month([FullDateAlternateKey]), type number), #"Inserted Month Name" = Table.AddColumn(#"Inserted Month", "Month Name", each Date.MonthName([FullDateAlternateKey]), type text), #"Inserted Quarter" = Table.AddColumn(#"Inserted Month Name", "Quarter", each Date.QuarterOfYear([FullDateAlternateKey]), type number), #"Inserted Week of Year" = Table.AddColumn(#"Inserted Quarter", "Week of Year", each Date.WeekOfYear([FullDateAlternateKey]), type number), #"Inserted Week of Month" = Table.AddColumn(#"Inserted Week of Year", "Week of Month", each Date.WeekOfMonth([FullDateAlternateKey]), type number), #"Inserted Day" = Table.AddColumn(#"Inserted Week of Month", "Day", each Date.Day([FullDateAlternateKey]), type number), #"Inserted Day of Week" = Table.AddColumn(#"Inserted Day", "Day of Week", each Date.DayOfWeek([FullDateAlternateKey]), type number), #"Inserted Day of Year" = Table.AddColumn(#"Inserted Day of Week", "Day of Year", each Date.DayOfYear([FullDateAlternateKey]), type number), #"Inserted Day Name" = Table.AddColumn(#"Inserted Day of Year", "Day Name", each Date.DayOfWeekName([FullDateAlternateKey]), type text) in #"Inserted Day Name |

- Refresh the Power BI dataflow

How to start using the newly created date dataflow?

Now that I've created the date Power BI dataflow, other users can easily reuse the Date entity in their own datasets, without having to configure anything.

* CDM or Common Data Model : The Common Data Model is a shared model embedded in various Microsoft technologies. CDM data is stored as JSON files in Azure Data Lake storage and enables master data exchange and integrations between Power BI, Power Apps, Dynamics365, …, and many other Azure Data Services. For more explanation, see also the Common Data Model.

Keen to know more?

Continue reading or contact us to get started: