AI beating world champions in complex strategy games such as chess or Go? Have you ever wondered how this is possible?

The next few paragraphs will tackle the underlying training technology, namely Reinforcement Learning, why it can be interesting in various business domains and how to get started with training your own smart “agents”.

What is reinforcement learning?

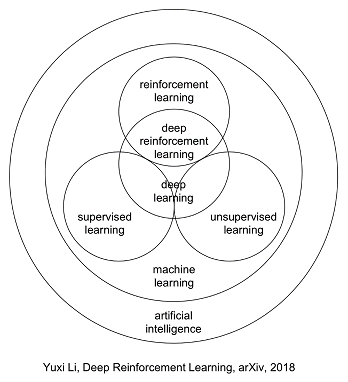

Artificial intelligence, machine learning, deep learning… Odds are high that most of these terms ring a bell. But, what exactly is the relationship or difference between them? And where does Reinforcement Learning come in play? Before elaborating on Reinforcement Learning, some additional context is preferred.

- Artificial Intelligence (or AI) is an overarching, broad term for machines that are programmed to act or think like a human. This does not always have to be complex. For example, a machine could be programmed to win easy games like “tic-tac-toe” with if and else rules and be considered as AI. For example, if player 1, starts out with move A, react with move B.

- However, you could also train machines to make its own decisions by leveraging Machine Learning algorithms. Machine learning is thus a subset of AI, enabling machines to become smarter by experience, without being explicitly programmed to take certain actions. Machine Learning covers various ways to train machines in tasks ranging from predicting the weather, to classifying customers or beating a Go world champion.

- Reinforcement learning is one of the three major areas of machine learning, next to supervised and unsupervised learning. With reinforcement learning, we allow machines (or agents) to learn actively from an environment through its actions and the continuous feedback.

How does reinforcement learning work?

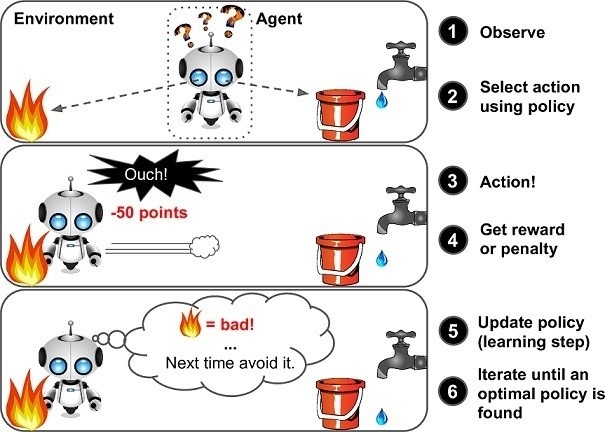

In reinforcement learning, the AI learns from its environment through actions and the feedback it gets. The agent, our algorithm, run tens, thousands or sometimes millions of experiments where every time the agent observes the situation > selects an action > does the action > and observes the feedback (reward/penalty). Learning-by-doing, our agent becomes smarter on which actions to do in order to reach its goal.

Although the principle is simple, there are important reflections when building a reinforcement agent:

- We need to set rules to limit an agent’s range to only feasible actions. Your queen in chess can’t do all moves. As such, policy setting is an important step in configuration as your agent will start exploring all possible different actions to reach its predefined goal and, if the rules are wrongly defined, surprising results can occur. If policy isn’t set correct, an agent would train itself to play non-allowed chess moves.

- An agent starts without bias or any historical knowledge and learns over time. First the agent will start exploring through random actions. While doing so, the environment will provide feedback and encourage good and discourage bad actions. Quickly the agent will learn from its random actions and its decisions will become more and more based on previous experiences.

- We need to introduce noise to ensure that an agent will keep exploring. Noise ensures an agent that for a small part of its actions it will try out a different action than it was taught. This technique helps agents to learn quicker by taking more different actions and also helps agents to keep exploring towards the maximal reward.

To manage this training process for more complex tasks such as learning chess or Go, Reinforcement Learning empowers its principles by leveraging deep learning principles such as deep neural networks. Those networks mimic the human brain and are therefore really great to use for complex tasks.

AlphaGo is a reinforcement learning model trained by iteratively playing Go. In 2016 the AlphaGo algorithm won against Go master Lee Se-dol

What's key to remember on reinforcement learning:

- In reinforcement learning, an agent learns through iteration and continuous feedback

- Agents will learn to deliver with the end goal in mind. If winning a chess game means, sacrificing a queen 5 steps before leading to a victory, the agent will do so. A human will probably not.

- Agents are not biased or influenced in any way, they only rely on actual feedback.

Why should businesspeople care?

Reinforcement Learning is widely and mostly known as the research area that allows machines to beat humans. But, how could this possible be interesting for business practices?

Reinforcement learning can be activated in all domains/processes where it is not possible to write an action-based agent yourself. On the one hand, new business opportunities arise in fields such as robotics, text mining or trading. Elaborated examples could be:

- Train robots to pick up delicate items that are randomly placed

- Train an agent to automatically summarize long, incoming reports

- Train an agent to make the best long-term trade investments for you (no bias - emotionally detached from the money)

Alternatively, existing business areas could be enhanced or supported, such as logistics, finance or even medicine. Elaborated examples could be:

- Train an agent to support warehouse managers in optimizing space in warehouses

- Train an agent to automate the planning for truck deliveries on a large scale

- Train an agent to support management in making important strategic financial decisions

- Train an agent to support a health practitioner in prescribing the best possible treatment for a given person

Next to these specified business use cases, Reinforcement Learning is a great type of Machine Learning when you need to achieve a certain goal, without exactly knowing all of the steps involved to reach that goal. That is why Reinforcement Learning applications can come up with very creative (for humans), new solutions. For example, Google’s agent AlphaGo Zero shocked the Go community by inventing a whole new strategy to ensure winning against the world champion.

Can you think of an application for your business?

How to get started and create your own smart agent?

Before elaborating on how to put the theory to the test, it has to be mentioned that you will need two things to be able to create your own proper smart agent.

- a solid Python background

- understanding of the various Reinforcement Learning algorithms

In Reinforcement Learning an agent trains itself by receiving continuous feedback on its actions. Therefore, to be able to train an agent, an environment is needed in which your agent can develop itself. Some getting-started environments are provided by an online toolkit called OpenAI Gym in which you can create your own software agent.

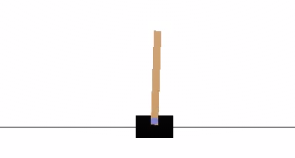

OpenAI Gym offers multiple arcade playgrounds of games all packaged in a Python library, to make RL environments available and easy to access from your local computer. Available environments range from easy – balancing a stick on a moving block – to more complex environments – landing a spaceship. The library even includes various games from Atari, so you can try to create an agent that beats your own high score!

Using OpenAI Gym we can configure and play with multiple environments including learning (our AI) how to balance a stick or how to land a spaceship

Getting started with OpenAI Gym

First step is to install the Gym Python library

pip install gym

Once successfully installed, you should prepare a virtual python environment in which you will install all necessary packages and dependencies for your chosen environments. For details on what to install for which environment, I recommend you to take a look at the various environments available on Github.

# Set-up an environment for the Moon Lander Game import gym gym.make('LunarLanderContinuous-v2')

Next, define the different input parameters of the agent.

agent = Agent(alpha=0.000025, beta=0.00025, input_dims=[8], tau=0.001, env=env,

batch_size=64, layer1_size=400, layer2_size=300, n_actions=2,

chkpt_dir='tmp/ddpg_final1')

Create a loop in which the agent can train itself through many iterations.

for i in range(3000):

obs = env.reset()

done = False

score = 0

while not done:

act = agent.choose_action(obs)

new_state, reward, done, info = env.step(act)

agent.remember(obs, act, reward, new_state, int(done))

agent.learn()

score += reward

obs = new_state

env.render()

score_history.append(score)

print('episode ', i, 'score %.2f' % score, 'trailing 100 games avg %.3f' % np.mean(score_history[-100:]))

The previous snippet of code consists of 2 loops. The first loop, the for loop, is used to iterate through multiple episodes, with every episode starting with a resetted environment and a score of 0.

for i in range(3000):

obs = env.reset()

done = False

score = 0

The second loop, the while loop, remains active during the whole duration of one episode. Every fraction of a second the agent needs to react to the changing environment. Therefore, first, the agent chooses to execute an action given the current state of the environment.

act = agent.choose_action(obs)

Next, the agent's action triggers a change in the environment and will lead to a direct reward or penalty.

new_state, reward, done, info = env.step(act)

Then, to learn from its actions, the agent leverages the remember and learn function by storing the old environment state, the action, the new environment state and the reward.

agent.remember(obs, act, reward, new_state, int(done))

agent.learn()

Lastly, the reward is added to the total and the new state becomes the current state. Preparing the environment for another iteration in the while loop.

score += reward

obs = new_state

All the functions that the agent leverages in this main script should be defined in another dedicated py script where the neural networks are configured and built to reflect the choose_action and learn statement. For the moonlander environment, we used a continuous action space, meaning that the agents actions are expressed in values between 0 and 1 instead of 0 or 1. To tackle this kind of environment, a DDPG or deep deterministic policy gradient algorithm was leveraged. 4 neural networks form the basis of DDPG: an actor, a critic, a target actor and a target critic. All networks have different roles:

- The actor decides on the next best action

- The critic evaluates the actions and trains the actor in taking a better decision next time

- Both target networks are time-delayed copies of the above. They greatly improve stability in learning.

We won't go further in the specific agent code but with above instructions (feel free to contact us if you would want the code), you have all basics to get started.

At element61 we developed a small demo applying reinforcement learning with OpenAI Gym on the Lunar Lander environment. Take a look at our demo:

Click to go to our reinforcement demo

Key requirements to get started

- Do your research on the gym environments. First, fully understand how the environment works, how the actions are scored and what the dimensions are of your in- and output variables, before thinking about algorithms.

- Understand the principles. Understand how Reinforcement Learning algorithms are structured and how that structure should reflect in code

- Start with the basics. First try to solve an easy environment with few dimensions and a discrete action space before diving into a complex continuous action space

- Internet is your best friend. OpenAI Gym is a well-known toolkit, therefore a lot of code is available to get you going in any given environment.

Now the only thing left to do is write your algorithm and train your agent! Good luck and have fun!