By launching Gemini, Google emerged on the Gen AI field like a 600-pound contestant in a sumo wrestling match. With flashy demos and astounding performances on various foundation model benchmarks, Google seems to be back in the race for AI dominance. In this post, we aim to shed light on what Gemini actually entails and whether or not it is as great as Google wants us to believe.

What is Google Gemini

But first things first: what is Google Gemini all about? According to Google, Gemini is the first Generative AI model that is natively multimodal. This means that instead of training models for different modalities separately—like text, vision, and speech—and stitching those models together to obtain multimodality, Gemini was trained to be multimodal from the ground up.

According to Google, one of the benefits of going in this direction is the ability to keep the nuance in more conceptual and complex tasks. Say, you want to translate some spoken text from Chinese to Dutch and you are using the traditional Generative AI approach (read GPT-4). In this scenario, what is likely going to happen is your speech is going to be converted to text, the text is then going to be translated to Dutch text and then converted again to Chinese. However, during these steps, a lot of context and conceptual information – like intonation and nuances in the meaning of different words – might get lost. However, when a model is truly multimodal it can get rid of these intermediary steps and -translate the Chinese speech to Dutch speech in one go. No speech-to-text and text-to-speech are needed. In essence, a natively multimodal model is an anything-to-anything model, enabling it to transform input from any modality directly into any other modality.

Another benefit linked to this “multimodal first” approach is positive transfer. Researchers at Google discovered that while they were finetuning the Gemini model for certain modalities, say vision, the model also improved at other modalities, say text. This insinuates that if a model is trained on multiple modalities at the same time, it has the potential to outperform Gen AI models that are specialized in only one specific modality, say video.

Finally, the Gemini cream comes in 3 different flavors: Ultra, Pro, and Nano. Let’s have a look at what you need to know about these different variations:

- Ultra: “The largest and most capable model for highly complex tasks” – Google

- This model will be released early next year

- Will not be available in the UK and Europe due to regulatory reasons

- Pro: “The best model for scaling across a wide range of tasks” – Google

- Quite similar to GPT-3.5

- Often compared to GPT-4 in terms of performance as Gemini Ultra is not available for the public yet. GPT-4 seems to outperform Gemini Pro.

- For the moment, it can only respond with text and code (and not yet with what you see in the demos from Google)

- Starting December 13th, developers and enterprise customers can start using Gemini Pro via the Gemini API

- Bard uses Gemini Pro in most countries except the UK and EU countries

- Nano: “Most efficient for on-device tasks” - Google

- In other words: Nano is meant for mobile usage

- Gemini Nano is coming to the Pixel 8 Pro with features such as summarization and smart reply

But how good is it

Well, let me break it to you: if you have seen the demos or visited the launch page, you have probably been subjected to what we call the maximum marketing hype. So allow me to paint you a more nuanced picture: let’s talk about those demos and benchmarks and see what they truly represent.

Let’s first have a look at the cool stuff: the demos! While there are multiple demos to critique or “debunk”, allow us to quickly talk about one particular demo and show you how you can see Google's marketing efforts through a more realistic set of goggles. In the demo, from which I’ve included a screenshot below, a person is hiding a piece of paper under one of 3 plastic cups. And, after some shuffling the Gemini model seems to be able to guess correctly under which cup the paper is. In Generative AI modeling terms, this demo would lead you to believe that Gemini is capable of processing complex reasoning on video in real-time. However, this is far from how the experiment actually went in reality.

The real test happened by taking pictures of different stages of the process: one for hiding the paper in one cup, one for shuffling individual cups, and so on. Then the model was instructed to guess under which cup the paper was based on those pictures by using a technique called few-shot prompting. In essence, the model was shown some different examples of correct solutions when shuffling the cups and used those examples to guess the location of the paper after receiving the pictures taken from the demo. In other words, not real-time and not video! Another interesting quirk is the fact that the model seemed to make mistakes when including “fake passes” – or switching 2 cups that do not contain the paper. Also, when trying this exact approach with GPT-4, the results were quite similar to those of Gemini. In other words, be aware of the fact that these videos are edited for maximum marketing value and look at them with a “this is the potential of these models”-mindset.

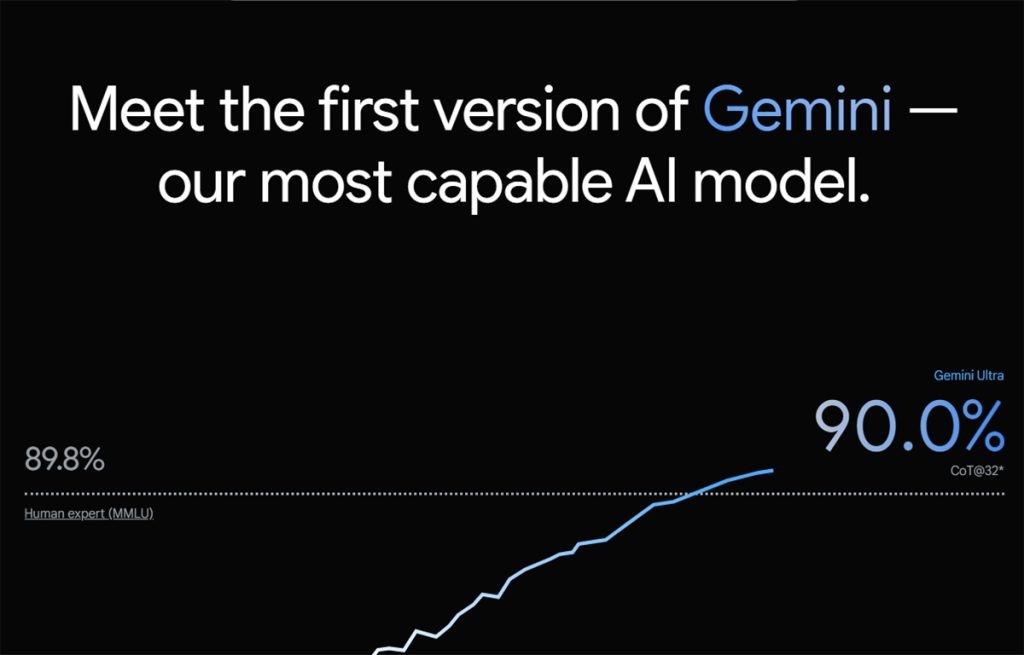

Secondly, there are the benchmarks and to be honest, there is so much to cover that I’ll have to cherry-pick the most interesting findings yet again. First of all, there’s the big “first model to beat human expert level” claim, which needs to be taken with a big grain of salt. In the Gemini introduction video, this statement is mentioned while showing the results of Gemini on the MMLU benchmark. While not going too much into the nitty gritty here, there are some reasons why this statement deserves at least some more explanation, especially when it comes to the comparison of Gemini with GPT-4.

First of all, while being a very widely used benchmark, the MMLU has the reputation of not being the most reliable benchmark out there. In short, the MMLU is a multichoice test across 57 different subjects from marketing to professional law to mathematics. In other words, a very generic test (which is completely fine). However, a couple of months ago Anthropic wrote an interesting blog post explaining the issues about this benchmark. One of the issues is that the answers to several questions in the benchmark aren’t even correct. This leads to the fact that a higher score of a couple of percentage points on this particular benchmark doesn’t really say a lot. For that reason, the general reaction in the industry was that it’s a bit strange for Google to still report on this benchmark months later.

Another issue with that comparison is the fact that Google scored the model with a 32-Chain-of-Thought with Self Consistency, while GPT-4 was scored with a 5-shot approach – which is until now the more industry standard way of doing this test. In short, a 32-chain-of-thought allows the Gemini model to create 32 potential solutions and then choose the best solution from those 32. At the same time, the 5-shot approach only shows 5 examples of solutions to similar problems and then lets the GPT model produce 1 single solution. While the practical details of these approaches are less important here, it’s clear to see that the models were not compared in an “apples-to-apples” way.

Even more interestingly when comparing GPT-4 and Gemini Ultra with the same 5-shot learning approach, we can see that GPT-4 comes out on top. It is only when Gemini Ultra uses this Chain-of-Thought with Self Consistency approach that it is able to beat GPT-4. For that reason, it seems like Google “invented” this reporting method just to be able to create the headline that they are “better than GPT-4”.

However, it’s not all smoke and mirrors and we should not be too harsh on Sundar Pichai and Demis Hassabis – the CEOs of Google and DeepMind respectively. In the multimodality spectrum, Gemini Ultra seems to have a clear edge on GPT-4, especially in the image and video department. This should come as no surprise as Google owns YouTube, which provides them with massive amounts of graphical data to work with. In short, Gemini Ultra beats all the current state-of-the-art models for 9/9 image understanding benchmarks, 6/6 video understanding benchmarks, and 5/5 speech recognition and speech translation benchmarks.

What does this mean for the future of Generative AI

Everyone is currently looking at OpenAI and Anthropic. Google has come out swinging and has thrown everything on the table at once. There are rumors of a potential release of an OpenAI GPT-4.5 model as well as a Q*-project, which is up until now still rather mysterious. Also, Anthropic is rumored to be working on a multimodal model as well, which could be seeing the light of day in the next weeks/ months.

Secondly, there’s the question about pricing. One must not forget that while Google has its state-of-the-art foundation model now, it also has other tricks up their sleeves. One of these tricks is the fact that Google also has its own TPUs (tensor processing units) on which it runs these models. Especially since competitors such as OpenAI and Anthropic are more dependent on NVIDIA for their chips, Google could try to push them out of the game by undercutting them. We’ll definitely see a War of the Giants in the coming year, now is the time to get a front-row seat.

So what should you take away from this

- Gemini is multimodal “from the ground up”, which means that it was trained on different modalities at the same time (text, speech, image, video, code, …), rather than training different models for each modality and stitching those together

- The model comes in 3 flavors: Ultra (most capable, comparable to GPT-4), Pro (best for scaling across a wide range of tasks, comparable to GPT-3.5) and Nano (most efficient for on-device tasks)

- Due to regulatory issues, Gemini Ultra won't hit the market in Europe and the UK yet. Also, Gemini Pro is not yet implemented in Bard for these regions.

- While Google reports that Gemini outperforms GPT-4 on most benchmarks of all its modalities, there is quite some scrutiny in the industry as to whether these comparisons were presented in a fair “apples-to-apples” fashion

- The coming months will be very interesting: how will Gemini Ultra perform when it hits the market and gets tested by developers? Will OpenAI react by releasing GPT-4.5 or Q*? Is Anthropic coming with a multimodal LLM? Will other open-source multimodal models hit the market?

A lot of topics to cover, so stay tuned as we will tell you everything you need to know.

Resources

- Gemini Release page

- Gemini Technical Report

- Gemini Blogpost by Google (including benchmarks reported by Google)

- How it's Made: 3 cup shuffle demo

- 3 Cups shuffle with GPT-4

- Demis Hassabis Wired Post

- MMLU benchmark

- Anthropic post about evaluating AI systems (and critique on MMLU)

- Criticism about Gemini demos and benchmarks

- More on criticism about Gemini demos and benchmarks

- Gemini vs GPT-4 vs Grok

- Tested and Ranked: Gemini GPT-4 and Grok

- Gemini demos

- Problems with MMLU benchmark