Intro

In this post, we plunge into the heart of vector-based search with the Embedding Generator for Cognitive Search. Brace yourself to witness how vectors wield their magic, transforming conversations into high-dimensional representations. Discover how this transformative capability ignites contextual understanding, enabling your chat and AI applications to engage with users at a whole new level of sophistication.

Azure Cognitive Search custom skills

Custom skills are a powerful feature that allows you to extend the capabilities of Azure Cognitive Search’s indexing pipeline. When you ingest data into Azure Cognitive Search for indexing, it goes through a series of processing steps to tokenize, normalize, and prepare the data for search. Custom skills allow you to insert your custom code or external services at different points in this processing pipeline, enabling you to perform custom actions on the data before it is indexed.

These custom actions can include tasks such as:

Text extraction and analysis: You can use custom skills to extract specific information from documents, such as named entities, keywords, or sentiment analysis scores. This can enhance the search experience by providing more context to users.

Language translation: If you have content in multiple languages, you can use custom skills to translate the content into a common language for indexing, making your search system multilingual.

Image analysis: If your search data includes images, you can use custom skills to analyze the images and extract useful information, such as object recognition, image captions, or other metadata.

Data enrichment: You can use external data sources or APIs to enrich your indexed data. For example, you could use a custom skill to retrieve additional information about a product from an external database and include that information in the indexed content.

Entity recognition: You can identify and tag specific entities in your data, such as names of people, locations, organizations, or product names, which can help improve search accuracy and relevance.

To implement a custom skill, you generally need to provide a function or a web service endpoint that performs the desired action on the data. Azure Cognitive Search then invokes this function or endpoint as part of the indexing process.

Azure Open AI Embedding Generator

This part of the project is about understanding what happens with documents once it's passed into the indexing pipeline in Azure Cognitive Search where you configured a Power Skill, (OpenAI Embeddings). This Power Skill generates the embeddings and stores them, which is crucial to be able to do a similarity search later and use an LLM model for generating text based on internal documents that you have indexed.

The code is open-sourced from Microsoft and you can find it here: https://github.com/Azure-Samples/azure-search-power-skills/blob/main/Vector/EmbeddingGenerator/README.md

Document

This code defines a Python data model for representing documents. The class is named Document, and it is created using Python’s data classes module, which helps to simplify the creation of classes by automatically generating special methods like init, repr, and eq based on the class attributes.

ChunkingResult

The 'ChunkingResult' code on GitHub.

The ‘@dataclass’ decorator is applied to this class, which is a feature provided by Python’s data classes module. By using this decorator, we can create a class with automatically generated special methods like init, repr, and eq, based on the class attributes. It significantly reduces boilerplate code that would typically be written for these special methods.

TokenEstimator

The 'TokenEstimator' code on GitHub.

Very self-explanatory, it just lets us know the Token Length of some text. We can use any encoding here.

TextChunker

The 'TextChunker' code on GitHub.

This code is the main responsible to split the text into chunks. It uses langchain MarkdownTextSplitter, RecursiveCharacterTextSplitter, and PythonCodeTextSplitter.

The main method: chunk_conten takes a piece of content and breaks it down into smaller chunks. It’s used to split large documents into smaller segments for processing or analysis. Some comments were added to the code to improve readability.

ChunkEmbeddingHelper

The 'ChunkEmbeddingHelper' code on GitHub.

The code defines a ChunkEmbeddingHelper class to generate embeddings for content chunks using TextEmbedder. The generate_chunks_with_embedding method processes large documents in smaller parts, embedding each chunk separately. It introduces delays between embedding calls to avoid exceeding the OpenAI API rate limit. The _generate_content_metadata method creates metadata for each chunk, including field name, document ID, index, offset, length, and embedding. This approach allows efficient processing of large texts and enables individual analysis of content chunks.

TextEmbedder

The 'TextEmbedder' code on GitHub.

This code defines a TextEmbedder class responsible for embedding text using the Azure OpenAI API. It sets up the API connection and handles embedding requests. The clean_text method removes unnecessary spaces and truncates the text if it exceeds a specified limit. The embed_content method retries embedding requests using exponential backoff in case of errors. It returns the embedding of the provided text with adjustable precision, using the Azure OpenAI API. The class is designed to facilitate embedding text and handling potential API issues while ensuring a smooth and reliable process.

The function

It's an HTTP Trigger that implements text chunking and embedding using the Azure OpenAI API. The function expects a JSON request containing a list of documents, each with a unique identifier (recordId) and relevant data fields (text, document_id, filepath, and fieldname). The text from each document is processed using the TextChunker and ChunkEmbeddingHelper classes.

The text_chunking function receives HTTP requests and validates the JSON payload against a predefined schema using JSON schema. If the request is invalid, it returns an error response.

The function retrieves environment variables related to the Azure OpenAI API and text chunking parameters (e.g., NUM_TOKENS, MIN_CHUNK_SIZE, TOKEN_OVERLAP, AZURE_OPENAI_EMBEDDING_SLEEP_INTERVAL_SECONDS).

It iterates through each document in the request, chunks the text content into smaller segments using the TEXT_CHUNKER object, and generates embeddings for each chunk using the CHUNK_METADATA_HELPER object. The sleep_interval_seconds introduces delays between embedding calls to avoid API rate limits.

For each chunk, the function stores the embedding metadata in the original chunk object.

The resulting chunked data is assembled into a response JSON and returned.

The get_request_schema function defines the JSON schema for the request payload, ensuring it contains the required fields and proper data types.

Testing it locally

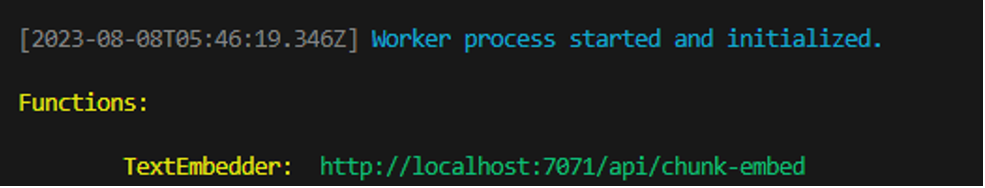

To test it locally, we use PostMan, first, we press F5 in our VS Code project, this will launch the project and will allow us to make HTTP calls locally. When you press F5 you will see in the terminal Window something like this, your REST API is ready for testing.

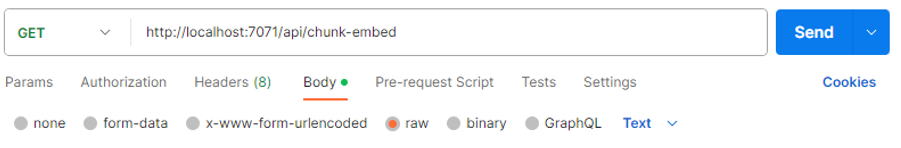

Now in Postman just create a new Request, in the body select raw and paste:

{

"values": [

{

"recordId": "1234",

"data": {

"document_id": "12345ABC",

"text": "This is a test document and it is big enough to ensure that it meets the minimum chunk size. But what about if we want to chunk it",

"filepath": "foo.md",

"fieldname": "content"

}

}

]

}

If it works, then you will get the embeddings like this:

{

"values": [

{

"recordId": "1234",

"data": {

"chunks": [

{

"content": "This is a test document and it is big enough to ensure that it meets the minimum chunk size. But what about if we want to chung it",

"id": null,

"title": "foo.md",

"filepath": "foo.md",

"url": null,

"embedding_metadata": {

"fieldname": "content",

"docid": "12345ABC",

"index": 0,

"offset": 0,

"length": 130,

"embedding": [

0.011029272,

0.012983655,

0.02085585,

Deployment

So you test it locally, now just deploy it to Azure Functions by just following these guidelines: https://github.com/Azure-Samples/azure-search-power-skills/blob/main/Vector/EmbeddingGenerator/README.md

Integration of Azure Function as Custom Skill

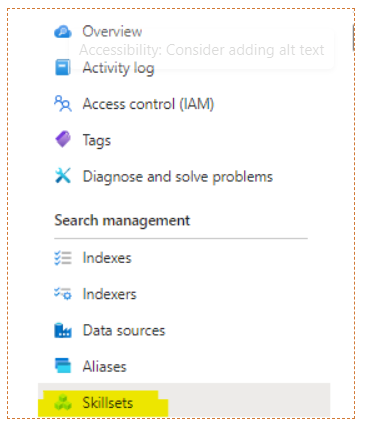

To use this skill in a cognitive search pipeline, you’ll need to add a skill definition to your skillset. Here’s a sample skill definition for this example (inputs and outputs should be updated to reflect your particular scenario and skillset environment. On your Cognitive Search environment click on SkillSets

Then Add SkillSet

Then copy the code below inside the skill[] array:

{

"@odata.type": "#Microsoft.Skills.Custom.WebApiSkill",

"description": "Azure Open AI Embeddings Generator",

"uri": "[AzureFunctionEndpointUrl]/api/chunk-embed?code=[AzureFunctionDefaultHostKey]",

"batchSize": 1,

"context": "/document/content",

"inputs": [

{

"name": "document_id",

"source": "/document/document_id"

},

{

"name": "text",

"source": "/document/content"

},

{

"name": "filepath",

"source": "/document/file_path"

},

{

"name": "fieldname",

"source": "='content'"

}

],

"outputs": [

{

"name": "chunks",

"targetName": "chunks"

}

]

}

Be sure to replace the Azure Function URL and the Code (API KEY)

For reference, the entire JSON will look like this:

{

"name": "skillset1691474262532",

"description": "",

"skills": [{

"@odata.type": "#Microsoft.Skills.Custom.WebApiSkill",

"description": "Azure Open AI Embeddings Generator",

"uri": "[AzureFunctionEndpointUrl]/api/chunk-embed?code=[AzureFunctionDefaultHostKey]",

"batchSize": 1,

"context": "/document/content",

"inputs": [

{

"name": "document_id",

"source": "/document/document_id"

},

{

"name": "text",

"source": "/document/content"

},

{

"name": "filepath",

"source": "/document/file_path"

},

{

"name": "fieldname",

"source": "='content'"

}

],

"outputs": [

{

"name": "chunks",

"targetName": "chunks"

}

]

}],

"cognitiveServices": {

"@odata.type": "#Microsoft.Azure.Search.DefaultCognitiveServices"

}

}

Conclusion

Congratulations, element61 reader, for embarking on this illuminating journey into the realm where data insights seamlessly evolve into dynamic interactions. You’ve delved into the intricacies of Azure Cognitive Search’s custom skills, witnessed the transformative power of the OpenAI Embedding Generator, and mastered the art of crafting a tailored ecosystem that transcends conventional search boundaries.

As we reach the conclusion of this chapter, it’s only the beginning of what promises to be an exciting saga. The path we’ve traversed, from understanding the intricacies of custom skills to unlocking the potential of embedding generation, is merely a stepping stone. There’s a world of possibilities ahead, waiting to be unveiled in the upcoming second part of this series.

In the forthcoming installment, we’ll venture even deeper, exploring how these enriched indexes and embeddings fuel the capabilities of advanced Language Models. Imagine conversing with an AI that not only comprehends your queries but comprehends them in the context of your data. Envision a future where interactions with information are as fluid and natural as a conversation with a colleague.

Stay tuned as we dive into the heart of Language Models and chatbot integrations. We’ll reveal how the bridges we’ve built between search, embeddings, and AI models transform the way we interact with our data. In the world of tomorrow, knowledge isn’t just at your fingertips — it’s within your conversational grasp.

Thank you for joining us on this path of innovation and exploration. Until the second part unveils its wonders, keep dreaming, keep experimenting, and keep pioneering the future where insights and interactions converge in ways yet unimagined.

Want to know more?

This insight is part of a series where we go through the necessary steps to create and optimize Chat & AI Applications.

Below, you can find the full overview and the links to the different parts of the series:

- Overview: Elevate Chat & AI Applications: Mastering Azure Cognitive Search with Vector Storage for LLM Applications with Langchain | element61

- Part 1 - Architecture: Building the Foundation for AI-Powered Conversations | element61

- (this article) Part 2 - Embedding Generator for Cognitive Search: Revolutionizing Conversational Context | element61

- Part 3 - Configuration Deep Dive: Empowering Conversations with Vector Storage | element61

- Part 4 - Backend Brilliance: Integrating Langchain and Cognitive Search for AI-Powered Chats | element61

- Part 5 - Frontend Flourish: Craft Immersive AI Experiences Using Streamlit | element61

If you want to get started with creating your own AI-powered chatbot, contact us.