Informatica

Informatica is an independent company providing data-integration software and services. Founded in 1993, Informatica employs more then 1.400 employees and has more than 3.000 customers worldwide, including 91 of the Fortune 100 companies.

PowerCenter 8 is Informatica's enterprise data integration platform that serves as the foundation for all data integration projects. PowerCenter 8 is a scalable, platform independent product, capable of reading from and writing to virtually

any data source. The platform allows for processing both structured and unstructured data coming from relational, file-based, mainframe and even message queue data sources. Data can be processed in batch, real-time or by using change data capture (CDC). Dynamic partitioning allows for data to load in concurrent data streams. PowerCenter 8 supports high availability, grid-computing and has extensive failover options. Metadata is stored in a global metadata repository, enabling data lineage functionality.

extended support for scalable services. The Advanced Edition finally will add data profiling and reporting capabilities, extended metadata analysis and team based development.

Tuning

Tuning a PowerCenter 8 ETL environment is not that straightforward. A chain is only as strong as the weakest link. There are four crucial domains that require attention: system, network, database and the PowerCenter 8 installation itself. It goes without saying that without a well performing infrastructure the tuning of the PowerCenter 8 environment will not make much of a difference.

As the first three domains are located in the realms of administrators, this article will only briefly touch these subjects and will mainly focus

on the items available to developers within PowerCenter 8.

Tuning is an iterative process: at each iteration the largest bottleneck is removed, gradually improving performance. Bottlenecks can occur on the system, on the database (either source or target), or within the mapping or session ran by the Integration Service. To identify bottlenecks, run test sessions, monitor the system usage and gather advanced performance statistics while running. Examine the session log in detail as it provides valuable information concerning session performance. From the perspective of a developer, search for performance problems in the following order:

-

source / target

-

mapping

-

session

-

system

Session logs

The PowerCenter 8 session log provides very detailed information that can be used to establish a baseline and will identify potential problems.

Very useful for the developer are the detailed thread statistics that will help benchmarking your actions. The thread statistics will show if the bottlenecks occur while transforming data or while reading/writing. Always focus attention on the thread with the highest busy percentage first. For every thread, detailed information on the run and idle time are presented. The busy percentage is calculated: (run time idle time) / (run time * 100).

Each session has a minimum of three threads:

-

reader thread

-

transformation thread

-

writer thread

***** RUN INFO FOR TGT LOAD ORDER GROUP [1], CONCURRENT SET [1] *****

Thread [READER_1_1_1] created for [the read stage] of partition point [SQ_X_T_CT_F_SITE_WK_ENROLL] has completed: Total Run Time = [31.984171] secs, Total Idle Time = [0.000000] secs, Busy Percentage = [100.000000].

Thread [TRANSF_1_1_1] created for [the transformation stage] of partition point [SQ_X_T_CT_F_SITE_WK_ENROLL] has completed: Total Run Time = [0.624996] secs, Total Idle Time = [0.453115] secs, Busy Percentage = [27.501083].

Thread [WRITER_1_*_1] created for [the write stage] of partition point [T_CT_F_SITE_WK_BSC] has completed: Total Run Time = [476.668825] secs, Total Idle Time = [0.000000] secs, Busy Percentage = [100.000000].

In this particular case it is obvious that the database can be considered as the main bottleneck. Both reading and writing use most of the execution time. The actual transformations only use a very small amount of time. If a reader or writer thread is 100% busy, consider partitioning the session. This will allow the mapping to open several connections to the database, each reading/writing data from/to a partition thus improving data read/write speed.

INFO 23/Dec/2008 09:02:22 node_etl02 MANAGER PETL_24031

***** RUN INFO FOR TGT LOAD ORDER GROUP [1], CONCURRENT SET [1] *****

Thread [READER_1_1_1] created for [the read stage] of partition point [SQ_T_CT_F_SITE_WK_BSC] has completed. The total run time was insufficient for any meaningful statistics.

Thread [WRITER_1_*_1] created for [the write stage] of partition point [T_CT_F_SITE_WK_BSC] has completed: Total Run Time = [30.937302] secs, Total Idle Time = [20.345600] secs, Busy Percentage = [34.23602355].

In the example above, the transformation thread poses the largest bottleneck and needs to be dealt with first. The reader thread finished so quickly no meaningful statistics were possible. The writer thread spends the majority of time in the idle state, waiting for data to emerge from the transformation thread. Perhaps an unsorted aggregator is used, causing the Integration Service to sort all data before releasing any aggregated record ?

Establishing a baseline

To be able to benchmark the increase or decrease of performance following an action it is important to establish a baseline to compare with. It is good practice to log in detail every iteration in the tuning process. This log will enable the developer to clearly identify the actions that enhanced or decreased performance and serve as later reference for future tuning efforts. Never assume that an action will improve performance because the action is a best practice or worked before: always test and compare with hard figures. The thread statistics are used to build this log.

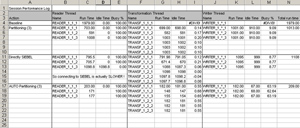

Figure 1: An example log file

Optimally reading sources

A homogeneous join is a join between relational sources that combine data from a single origin: for example a number of Oracle tables being joined.

A heterogeneous joins is a join between sources that combine data from different origins: when for example Oracle-data is joined with a flat file.

Consider pre-sorting the data in the database, this will make further sorting for later aggregators, joiners, by the Integration Service unnecessary. Make sure the query executed on the database has a favourable execution plan. Use the explain plan (Oracle) facility to verify the query's execution plan if indexes are optimally used. Do not use synonyms or database links unless really needed as these will slow down the data stream.

In general it is good practice to always generate keys for primary key and foreign key fields. If no key is available or known a dummy key should be used. In the reference table an extra dummy record should be inserted. This method will improve join performance when using homogenous joins. In general three dummy rows should be included:

-

999999 Not applicable

-

999998 Not available

-

999997 Missing

Optimally writing to targets

Indexes are usually the root cause of slow target behaviour. In Oracle, every index on a table will decrease the performance of an insert statement by 25%. The more indexes are defined, the slower insert/update statements will be. Every time an update/insert statement is executed, the indexes need to be updated as well. Try dropping the indexes on the target table. If this does not increases performance, the network is likely causing the problem.

In general avoid having too many targets in a single mapping. Increasing the commit interval will decrease the amount of session overhead. Three different commit types are available for targets:

-

target based commit: fastest

-

source base commit: in between

-

user defined commit: slowest , avoid using user defined commit when not really necessary

Session is only inserting data

Session is mainly updating a limited set of data

Clever mapping logic

Now data is being read and written in the most optimal way, it is time to focus our attention to the actual mapping. The basic idea is simple: minimize the incoming data stream and create as little as possible overhead within the mapping. A first step in achieving this goal is to reduce the number of transformations to a minimum by reusing common logic. Perhaps the use of the same lookup in different pipes could be redesigned to only use the lookup once? By using clever caching strategies, cache can be reused in the mapping or throughout the workflow. Especially with active transformations (transformations where the number of records is being changed) the use of caching is extremely important. Active transformations that reduce the number of records should be placed as early as possible in the mapping.

Data type conversions between transformations in the mapping are costly. Be sure to check if all explicit and implicit conversions really are necessary. When the data from a source is passed directly to a target without any other actions to be done, connect the source qualifier directly to the target without the use of other transformations.

Single pass reading allows multiple targets being populated using the data from the same source qualifier. Consider using single pass reading if there are multiple sessions using the same source: the existing mapping logic can be combined by using multiple pipelines. Common data manipulations for all pipelines should be done before splitting out the pipeline.

Transformation Mechanics

Every transformation has its specifics related to performance. In the section below the most important items are discussed.

A joiner transformation should be used to join heterogeneous data sources. Homogeneous sources should always be joined in the database by using the user defined join in the source qualifier transformation.

If not sorted at database level, always use a sorter transformation to sort the data before entering the joiner transformation. Make sure the sorter transformation has sufficient cache to enable a 1-pass sort. Not having sufficient cache will plummet performance. The data could be sorted in the joiner, but there are three advantages of using the sorter transformation:

-

The use of sorted input enables the joiner transformation to start passing data to subsequent transformations before all data was passed in the joiner transformation. Consequently, the transformations following the joiner transformation start receiving data nearly immediately and do not have to wait until all the data was sorted and joined in the joiner transformation. This logic is only valid when the source can be sorted in the database: for example when joining SQL-Server and Oracle. Both sources can be sorted in the database, making additional sorting using sorters superfluous. When a sorter is needed, for example when joining Oracle and a flat file, the sorter will have to wait until all data is read from the flat file before records to the joiner transformation can be passed.

-

The sorting algorithm used in the sorter is faster then the algorithm used in joiners or aggregators.

-

The use of sorted input in the joiner transformation, allows for a smaller cache size, leaving more memory for other transformations or sessions. Again, when a flat file is used, a sorter will be needed prior to the joiner transformation. Although the joiner transformation uses less cache, the sorter cache will need to be sufficiently large to enable sorting all input records.

Figure 2: Example of an unnecessary sort. The data should be sorted in advance at database level.

Only use a filter transformation for non-relational sources. When using relational sources, filter in the source qualifier. Filter as early as possible in the data stream. Try filtering by using numeric lookup conditions. Numeric matching is considerably faster then the matching of strings. Avoid complex logic in the filter condition. Be creative in rewriting complex expressions to the shortest possible length. When multiple filters are needed, consider using a router transformation as this will simplify mapping logic.

A lookup transformation is used to lookup values from another table. Clever use of lookup caches can make a huge difference in performance.

By default, lookup transformations are cached. The selected lookup fields from the lookup table are read into memory and a lookup cache file is built every time the lookup is called. To minimize the usage of lookup cache, only retrieve lookup ports that are really needed.

However, to cache or not to cache a lookup really depends on the situation. An uncached lookup makes perfect sense if only a small percentage of lookup rows will be used. For example if we only need 200 rows in a 10 000 000 rows table. In this particular case, building the lookup cache would require an extensive amount of time. A direct select to the database for every lookup row will be much faster on the condition that the lookup key in the database is indexed.

Sometimes a lookup is used multiple times in an execution path of a mapping or workflow. Re-caching the lookup every time would be time consuming and unnecessary, as long as the lookup source table remains unchanged. The persistent lookup cache property was created to handle this type of situation. Only when calling the lookup the first time, the lookup cache file is refreshed. All following lookups reuse the persistent cache file. Using a persistent cache can improve performance considerably because the Integration Service builds the memory cache from the cache files instead of the database.

Use dynamic lookup cache when the lookup source table is a target in the mapping and updated dynamically throughout the mapping. Normal lookups caches are static. The records that were inserted in the session are not available to the lookup cache. When using dynamic lookup cache, newly inserted or updated records are update in the lookup cache immediately.

Ensure sufficient memory cache is available for the lookup. If not, the Integration server will have to write to disk, slowing down.

By using the Additional Concurrent Pipelines property at session level, lookup caches will start building concurrently at the start of the mapping. Normal behaviour would be that a lookup cache is created only when the lookup is called. Pre-building caches versus building caches on demand can increase the total session performance considerably, but only when the pre-build lookups will be used for sure in the session. Again, the performance gain of setting this property will depend on the particular situation.

An aggregator transformation is an active transformation, used to group and aggregate data. If the input was not sorted already, always use a sorter transformation in front of the aggregator transformation. As with the joiner transformation the aggregator transformation will accumulate data until the dataset is complete and only starts processing and sending output records from there on. When sorted input is used, the aggregator will process and sent output records as soon as the first set of records is complete. This will allow for much faster processing and smaller caches. Use as little as possible functions and difficult nested conditions. Especially avoid the use of complex expressions in the group by ports. If needed use an expression transformation to build these expressions in advance. When using change data capture, incremental aggregation will enhance performance considerably.

Sometimes, simple aggregations can be done by using an expression transformation that uses variables. In certain cases this could be a valid alternative for an aggregation transformation.

Expression transformations are generally used to calculate variables. Try not to use complex nested conditions, use decode instead. Functions are more expensive than operators; avoid using functions if the same can be achieved by using operators. Implicit data type conversion is expensive. Try to convert data types as little as possible. Working with numbers is generally faster then working with strings. Be creative in rewriting complex expressions to the shortest possible length.

The use of a sequence generator transformation versus a database sequence depends on the load method of the target table. If using bulk loading, database sequences cannot be used. The sequence generator transformation can overcome this problem. Every row is given a unique sequence number. Typically a number of values are cached for performance reasons.

There is however a big catch. Unused sequence numbers at the end of the session are lost. The next time the session is run, the sequence generator will cache a new batch of numbers.

For example: a sequence generator caches 10000 values. 10000 rows are loaded, using the cached values. At row 10001, a new batch of sequence values is cached: from 10000 to 20000. However the last row in the session is 10002. All values between 10002 and 20000 are lost. Next time the session is ran, the first inserted row will have a key of 20001.

To avoid these gaps use a sequence generator in combination with an unconnected lookup. First look up the latest key value in the target table. Then use an expression that will call the sequence generator and add one to the key value that was just retrieved. The sequence generator should restart numbering at every run. There are some advantages to this approach:

-

a database sequence is emulated and bulk loads remain possible.

-

if rows are deleted, gaps between key values will be caused by deletes and not by caching issues.

-

the limits of a sequence will not be reached so quickly

Clean up error handling

Collecting Advanced Performance data

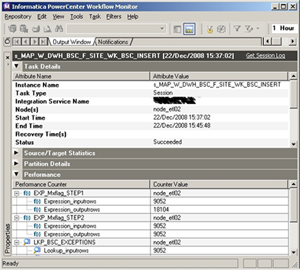

To really get into a session and understand exactly what is happening within a session, even more detailed performance data than available into the session log can be captured. This can be done by, at session level, enabling the checkbox Collect performance data'.

This option will allow the developer to see detailed transformation based statistics in the Workflow Monitor while running the session. When finished a performance file is written to the session log folder. For every source qualifier and target definition performance details are provided, along with counters that show performance information about each transformation.

A number of counters are of particular interest to the developer:

-

errorrows: should always be zero, if errors occur, remove the cause

-

readfromdisk/writetodisk: indicates not enough cache memory is available. Increase the cache size until this counter is no longer shown.

Figure 3: Session statistics while using Collect Performance Data

Memory Optimization

Memory plays an important role when the Integration Service is running sessions. Optimizing cache sizes can really make a huge difference in performance.

You can configure the amount of buffer memory yourself or you can configure the Integration Service to automatically calculate buffer settings at run time. In stead of calculating all values manually or by trial and error, run the session once on auto and retrieve the correct values from the session log:

INFO 12/30/2008 1:23:57 AM INFO8_ASPMIS003 MAPPING TM_6660 Total Buffer Pool size is 90000000 bytes and Block size is 65536 bytes.

The easiest way of calculating the correct cache sizes is by keeping the defaults on auto and examining the session log. In the session log a line is written for every lookup that looks like this:

INFO 12/30/2008 1:26:11 AM INFO8_ASPMIS003 LKPDP_2:TRANSF_1_1 DBG_21641 LKP_VORIG_RECORD: Index cache size = [12000800], Data cache size = [24002560]

Copy these values into the session properties. Be sure to verify the performance counters to validate no disk reads/writes are done.

Sorter transformations need special attention concerning cache sizes as well. If not enough cache is available, the sorter will require a multi pass sort, dropping the session performance. If so, a warning will be displayed in the session log:

TRANSF_1_1_1> SORT_40427 Sorter Transformation [srt_PRESTATIE] required 4-pass sort (1-pass temp I/O: 19578880 bytes). You may try to set the cache size to 27 MB or higher for 1-pass in-memory sort.

The maximum amount of memory used by transformation caches is set by two properties:

-

Maximum Memory Allowed for Automatic Memory Attributes

-

Maximum Percentage of Total Memory Allowed for Automatic Memory Attributes

Further session optimization

High precision

Concurrent sessions

Depending on the available hardware, sessions can be run concurrently instead of sequential. At Integration Service level the number of concurrent sessions can be set. This value is set default to 10. Depending on the number of CPU's at the PowerCenter 8 server and at source and target database this value can be increased or decreased. The next step is in designing a workflow that will launch a number of sessions concurrently. By trial and error an optimal sampling can be achieved.

Session logging

The amount detail in a session log is determined by the tracing level. This level ranges from Terse' to Verbose Data'. For debugging or testing purposes the Verbose Data' option will trace every row that passes in the mapping in the session log. At terse, only initialization information, error messages, and notification of rejected data are logged. It is quite clear the Verbose Data' option causes a severe performance penalty.

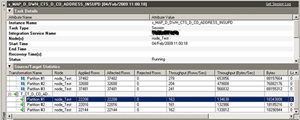

Partioning

If a transformation thread is 100% busy, consider adding a partition point in the segment. Pipeline partitioning will allow for parallel execution within a single session. A session will have multiple threads for processing data concurrently. Processing data in pipeline partitions can improve performance, but only if enough CPU's are available. As a rule of thumb, 1.5 CPU's should be available per partition. Adding a partition point will increase the number of pipeline stages. This means a transformation will logically be used a number of times, so remember to multiply the cache memory of transformations, session,.. by the number of partitions. Partitioning can be specified on sources / targets and the mapping transformations.

Using partitioning requires the Partition Option' in the PowerCenter 8 license.

Figure 4: The execution time of this mapping was reduced from 400 to 90 seconds using partitioning and advanced mapping tuning.

Pushdown Optimization

-

Partial pushdown optimization to source: one or more transformations can be processed in the source

-

Partial pushdown optimization to target: one or more transformations can be processed in the target

-

Full pushdown optimization: all transformations can be processed in the database A number of transformations are not supported for pushdown: XML, ranker, router, Normalizer, Update Strategy,

Architecture

For optimal performance, consider running the Integration service in ASCII data movement mode when all sources and targets use 7 or 8-bit ASCII as UNICODE can take up to 16 bits.

The repository database should be located on the PowerCenter 8 machine. If not, the repository database should be physically separated from any target or source database. This will prevent the same database machine is writing to a target while reading from the repository. Always use native connections over ODBC connections as they are a lot faster. Maximize the use of parallel operations on the database. The use of parallelism will cut execution times considerably. Remove any other application from the PowerCenter 8 server apart from the repository database installation.

Increase the database network packet size to further improve performance. For Oracle this can be done in the listener.ora and tnsnames.ora. Each database vender has some specific options that can be beneficial for performance. For Oracle, the use of IPC protocol over TCP will result in a performance gain of at least by a factor 2 to 6. Inter Process Control (IPC) will remove the network layer between the client and Oracle database-server. This can only be used if the database is residing on the same machine as the PowerCenter 8 server. Check the product documentation for further documentation.

The tuning of servers and database is just as important as delivering an optimized mapping and should not be ignored. Tuning a system for a datawarehouse poses different challenges then tuning a system for an OLTP-application. Try to involve DBA's and admins as soon as possible in this process so they fully understand the sensitivities involved with data warehousing.

Conclusion

Increased competition and tighter budgets force IT managers to look for alternatives to decrease data centre costs while providing more services to a growing and demanding user base. One way in accomplishing this is by reducing the load on existing servers by optimizing the execution of workflows. The reduced execution times of optimized sessions increase the amount of free space in an often crowded ETL load window.

Using a number of simple guidelines, sessions can be tuned to perform at their best. In typical situations, the total ETL time can be reduced by 10 to 40% without making any structural investments in hardware. Performance tuning of sessions however is not an exact science and often needs trial and error to find an optimum. Tuning sessions can be a labour intensive, even tedious, as the tuning process tends to repeat itself as time passes by.

Tuning is not a job solely done by the developer. Tuning is a team effort. To ensure optimal performance, developers, administrators and DBA's need to be actively and continuously involved. Care for optimization and tuning is not something that needs to be taken care off when development has completed. The guidelines above should be used as soon as the project starts, to ensure optimal performance of the PowerCenter 8 system from the start to end of the system lifecycle.