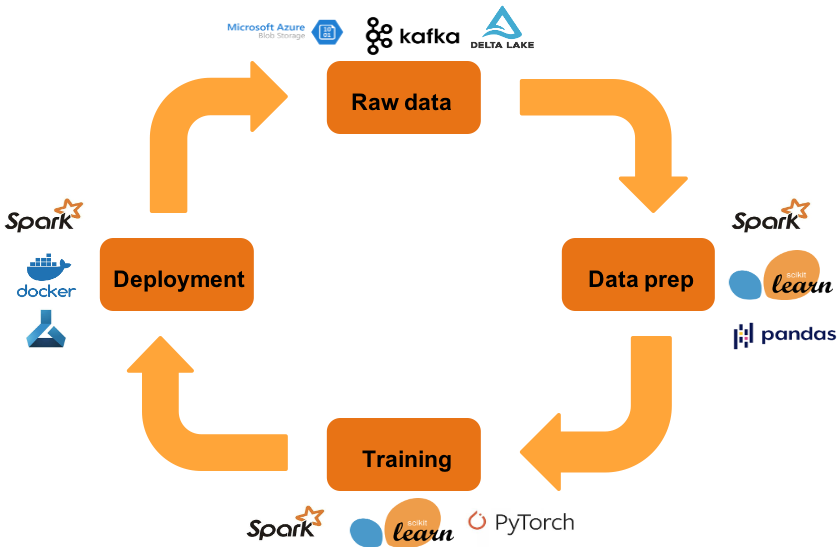

Machine Learning (ML) projects typically follow a specific lifecycle, from ingesting and preprocessing the raw data, to model training and deployment. In all these stages data scientists are using a myriad of different frameworks, libraries, tools etc. which provide best-practice functionalities. In this context there is a growing need to manage the ML lifecycle and to make sure that all stages fluently connect to each other.

In this myriad, the concept of an ML pipeline comes into play: an end-to-end workflow as a sequence of steps that comprises the whole ML lifecycle from start to end. In recent years many different lifecycle management tools have been developed. Kubeflow is one of these tools, offering promising features in the field of creating and deploying ML pipelines.

What is Kubeflow?

Kubeflow is an open-source Machine Learning toolkit, created by developers of Google, Cisco, IBM and others and first released in 2017. It offers a Machine Learning stack orchestration toolkit to build and deploy pipelines on Kubernetes, an open-source system for automating deployment, scaling, and management of containerized applications.

Kubeflow and Kubernetes

If you are new to Kubernetes, you can look at it as a platform to schedule and run containers on clusters of physical or virtual machines, so-called Kubernetes clusters. In this sense Kubernetes and Kubeflow are both orchestration tools.

"Kubernetes is more generally used for all kinds of containerized applications, while Kubeflow is specifically developed to create and deploy Machine Learning pipelines."

Jasper Penneman - Data Science Intern

Kubeflow is specifically dedicated to making deployments of ML workflows on a Kubernetes cluster simple, portable and scalable. With Kubeflow, data scientists and engineers are able to develop a complete pipeline consisting of segmented components, whereby each component corresponds to a separate Docker container. In short, Kubeflow is useful in the following ways:

- It offers a way to manage the ML lifecycle as you can build and deploy ML pipelines.

- It is based on the containerization principle, which offers gains in efficiency for memory, CPU and storage compared to traditional virtualization and physical application hosting.

- It is developed to deploy pipelines on Kubernetes, one of the most well-known orchestration systems for containerized applications.

Getting started with Kubeflow on Azure

The basic principle is as follows: anywhere you are running Kubernetes, you should be able to run Kubeflow. Therefore, when installing Kubeflow, you will first need a Kubernetes cluster on the environment of your choice (e.g. public cloud, existing Kubernetes cluster, desktop). To deploy Kubeflow on Azure, an Azure Kubernetes Services (AKS) cluster should be created or selected on which you will deploy Kubeflow.

Furthermore, you will also need a container registry to store the container images of the pipeline components. When working with Azure, an Azure Container Registry (ACR) should be created or selected in the same resource group as where your AKS cluster is deployed. This container registry also needs to be connected with the AKS cluster in order for the Kubeflow Pipelines agent to pull the images from the container registry as they are needed in the pipeline.

You can find more general information on the installation of Kubeflow and specific instructions to deploy Kubeflow on Azure. Here are the essentials:

- The easiest way is to create a Kubernetes cluster using Azure CLI:

az aks create -g <RESOURCE_GROUP_NAME> -n <NAME> -s <AGENT_SIZE> -c <AGENT_COUNT> -l <LOCATION> --generate-ssh-keys

#Note: NAME is the name for your Kubernetes cluster. For demo purposes, AGENT_SIZE can be set to Standard_B2ms and AGENT_COUNT to 3.

Manage your Azure cost wisely by consciously choosing the appropriate size and the number of nodes for your Kubernetes cluster. For example, for demo purposes you can work with a Standard_b2ms cluster with 2 or 3 nodes.

- Next, connect to the Kubernetes Cluster and install the downloaded Kubeflow binary:

#Create user credentials to connect

az aks get-credentials -n <NAME> -g <RESOURCE_GROUP_NAME>

#Add binary kfctl to your path

export PATH=$PATH:<path to where kfctl was unpacked>

#Install Kubeflow (note that your working directory should be where you unzipped the downloaded Kubeflow tar)

export CONFIG_URI="https://raw.githubusercontent.com/kubeflow/manifests/v1.0-branch/kfdef/kfctl_k8s_istio.v1.0.2.yaml"

kfctl apply -V -f ${CONFIG_URI}

- Finally, connect to your Kubeflow running on AKS: The default installation does not create an external endpoint but you can use port-forwarding to visit your cluster. Run the following command and visit http://localhost:8080.

kubectl port-forward svc/istio-ingressgateway -n istio-system 8080:80

Kubeflow Pipelines – An example

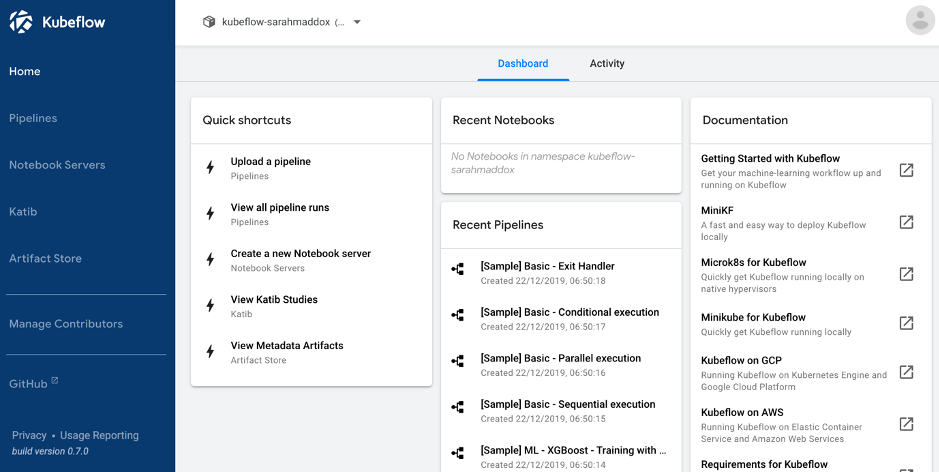

Kubeflow Pipelines are a major component of Kubeflow. It is a platform for building and deploying portable, scalable ML workflows based on Docker containers. It can be accessed in Kubeflow’s Central Dashboard by clicking on the ‘Pipelines’ tab in the left-side panel of the dashboard:

When using Kubeflow Pipelines, you always follow the same steps:

Build your pipeline >> Compile your pipeline >> Deploy your pipeline >> Run your pipeline

Before moving on to the concrete pipeline building, you should first understand the following two basic concepts in Kubeflow: pipeline and component.

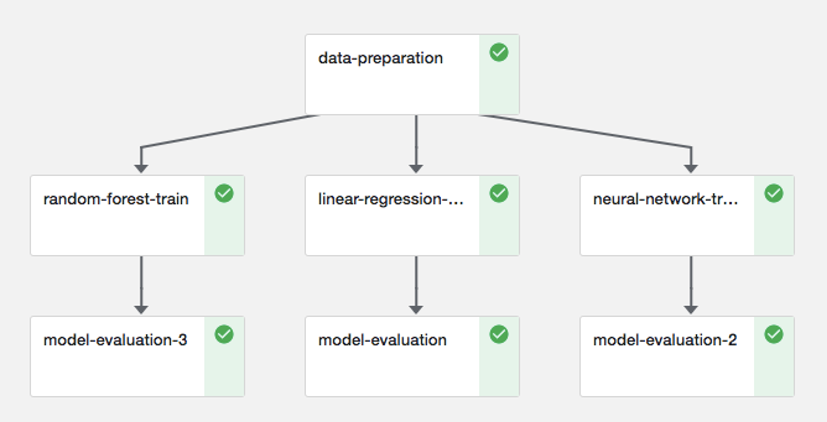

- A pipeline is a description of an ML workflow, including all the components in the workflow and how they combine in the form of a graph. The pipeline includes the definition of the inputs (parameters) required to run the pipeline and the inputs and outputs of each component. The screenshot below shows the execution graph of our example pipeline.

- A component is a self-contained set of user code, packaged as a Docker image, that performs one step in the pipeline. For example, a component can be responsible for data preprocessing, data transformation, model training, and so on.

In below example pipeline we have components for data preparation, training of three models and model evaluation for each model. They each run in a separate Docker container.

Pipeline execution graph

Let’s now see how to build such a pipeline.

-

Build your pipeline

You can build your pipeline using the Kubeflow Pipelines SDK, which provides a set of Python packages that you can use to specify and run your ML workflows and components. Remember: a component is a self-contained set of user code, packaged as a Docker image, that performs one step in the pipeline. As such, let's build a component:

Building one component

A component is build using a Python function that returns a ContainerOp object, which is an operation that will run on a specific container image. In order to build the ContainerOp object, you need to reflect on the following arguments:

- name: name of the component/step in your Kubeflow pipeline

- image: the Docker image that packages the component code: this image argument refers to the Docker image that you have built and pushed to the container registry that is connected with the Kubernetes cluster.

- Note: a Docker image is based on a Dockerfile, which is a text document that contains all the necessary requirements (e.g. packages, code, commands etc.) to run the component as an independent container.

- command: the command to start up the Docker container

- arguments: the parameters needed to run the python script

- file_outputs: the output_files argument is used to pass outputs between different components in the pipeline. To prevent big datasets to be passed directly between components, we recommend to exchange the path where the dataset is stored through output_files. In our example pipeline, the outputs (data and models) are stored in Azure Blob Storage, so only the blob name for each output will be exchanged. As you can see in the example code below, an output is specified as follows:

Example of how a preprocessing component/step could look like:

# preprocess data

def preprocess_op(blobname_customers, blobname_spending):

return dsl.ContainerOp(

name='preprocess',

image='<REGISTRY_NAME>.azurecr.io/<IMAGE_NAME>:<VERSION_TAG>',

command=[ "python", "/kubeflow_demo/data_prep.py" ],

arguments=[

'--blobname_customers', blobname_customers,

'--blobname_spending', blobname_spending

],

file_outputs={

'blobname_data': '/blobname_data.txt'

}

)

When we will actually build the pipeline we will create tasks based on the Python build functions: for example

preprocess_task = preprocess_op(

blobname_customers='/raw/customer_data.csv',

blobname_spending='/raw/customer_spending_data.csv'

)

Connecting with other components

A connection between components can be implicitly implemented by the mechanism for data passing between components: each component in the pipeline uses the output(s) of previous component(s) as input for its execution. For example, each training component in our example pipeline uses the preprocessed data that was produced by the preprocessing component. Referring to an output of a previous task can be done as follows:

task.outputs['output_label']

where the ‘output_label’ is the same as when you specify the outputs for each component (see above). For example, in order to refer to the preprocessed data produced by the preprocessing component, the output label ‘blobname_data’ should be used.

regression_task = regression_op(

blobname_customers=preprocess_task.outputs['blobname_data']

)

An alternative to define the sequence of components is by explicitly specifying which components come after each other:

regression_task.after(preprocess_task)

Example pipeline for predicting Adventure Works’ customer spend

We’ll explain and illustrate an example pipeline for a fictitious bike manufacturing company Adventure Works who wants to predict the average monthly spending of their customers based on customer information. Their data resides on an Azure Data Lake and we’ll need components to prepare, model and evaluate our Machine Learning.

A few notes in our pipeline build:

- We assume you have already build various components incl. preprocess_op, linear_reg_op, neural_net_op, etc. Note how the outputs of previous components are passed to the next components in the pipeline following the data passing mechanism described before (e.g. “preprocess_task.output”).

- The @dsl.pipeline decorator is a required decoration including the name and description properties of your pipeline.

- The variables storage_account, connect_str, container_name, blobname_customers and blobname_spending are defined and passed as parameters to the components to interact with the Azure Blob Storage (Azure Data Lake Store Gen2)

def aw_pipeline(

hidden_layers,

activation,

learning_rate,

max_iter,

learning_rate_init,

n_estimators

):

# Define Azure Blob Storage used for saving outputs (data and models)

## Specify connection string of storage account: Storage account > Access keys > key1 - Connection string

storage_account=<STORAGE_ACCOUNT>

connect_str=<CONNECTION_STRING>

## Specify container name

container_name=<CONTAINER_NAME>

## Specify blob names of raw data

blobname_customers=<BLOBNAME_CUSTOMERS_DATA>

blobname_spending=<BLOBNAME_SPENDING_DATA>

# Pipeline tasks

preprocess_task=preprocess_op(connect_str, container_name, blobname_customers, blobname_spending)

linear_reg_task=linear_reg_op(connect_str, container_name, preprocess_task.output)

linear_reg_task.after(preprocess_task)

neural_net_task=neural_net_op(connect_str, container_name, preprocess_task.output, hidden_layers,

activation, learning_rate, max_iter, learning_rate_init)

neural_net_task.after(preprocess_task)

random_forest_task=random_forest_op(connect_str, container_name, preprocess_task.output, n_estimators)

random_forest_task.after(preprocess_task)

evaluation_linreg_task=evaluation_op(connect_str, container_name, linear_reg_task.outputs['blobname_X_test'],

linear_reg_task.outputs['blobname_y_test'], linear_reg_task.outputs['blobname_model'])

evaluation_neuralnet_task=evaluation_op(connect_str, container_name, neural_net_task.outputs['blobname_X_test'],

neural_net_task.outputs['blobname_y_test'],neural_net_task.outputs['blobname_model'])

evaluation_randomforest_task=evaluation_op(connect_str, container_name, random_forest_task.outputs['blobname_X_test'],

random_forest_task.outputs['blobname_y_test'], random_forest_task.outputs['blobname_model'])

evaluation_linreg_task.after(linear_reg_task)

evaluation_neuralnet_task.after(neural_net_task)

evaluation_randomforest_task.after(random_forest_task)

After building the pipeline in a Python script, you must compile the pipeline to an intermediate representation before you can submit it to the Kubeflow Pipelines service. The compile is basically a YAML file compressed into a .tar.gz file This can be done as follows:

compiler.Compiler().compile(aw_pipeline, __file__ + '.tar.gz')

-

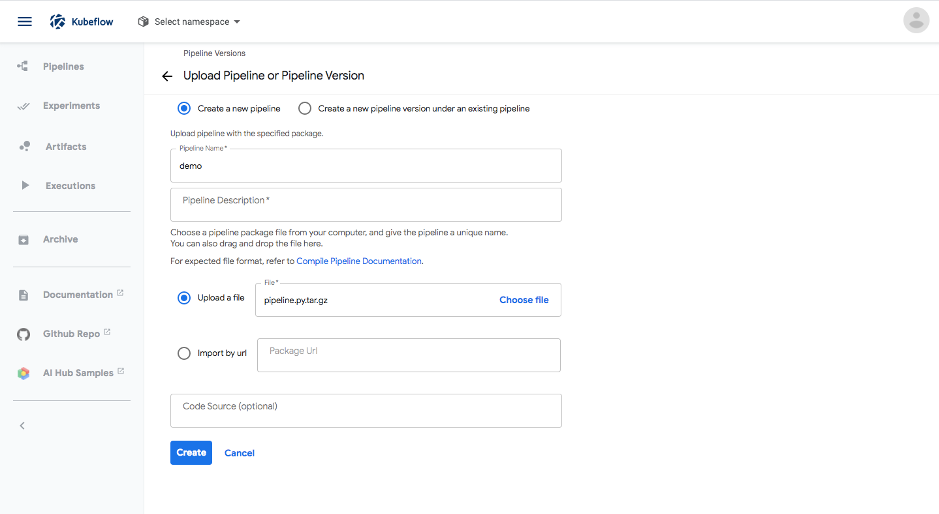

Deploy your pipeline

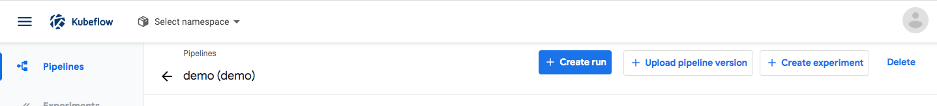

After compiling you can deploy the pipeline by uploading the generated .tar.gz file through the Kubeflow Pipelines UI:

Upload the pipeline

After clicking on ‘+ Upload pipeline’, you need to specify a name for your pipeline, upload the pipeline file and click on ‘Create’ (see the screenshot below). Now the pipeline is available in the Kubeflow Pipelines UI to create an experiment and start a pipeline run.

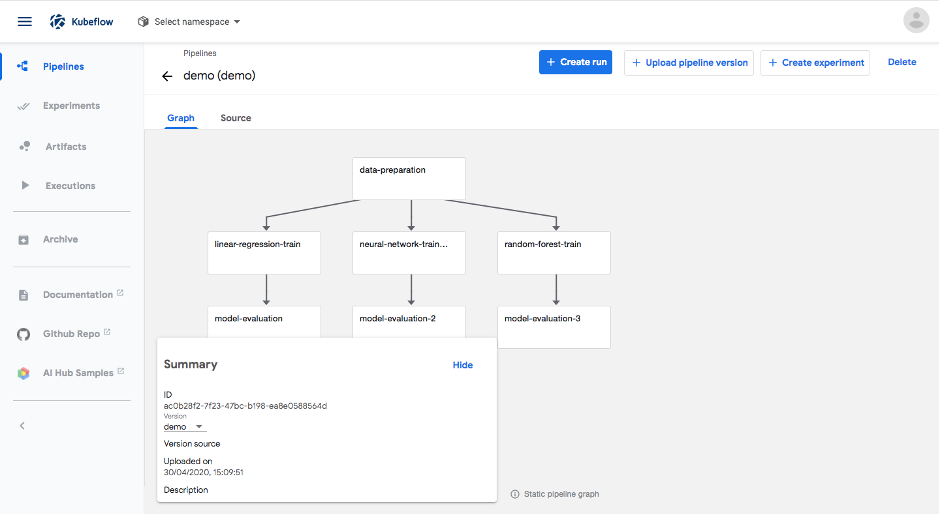

When the pipeline is uploaded successfully, you can access it under the ‘Pipelines’ tab in the left-side panel of the dashboard. When you then click on the pipeline, you get the following template graph:

Template graph of uploaded pipeline

-

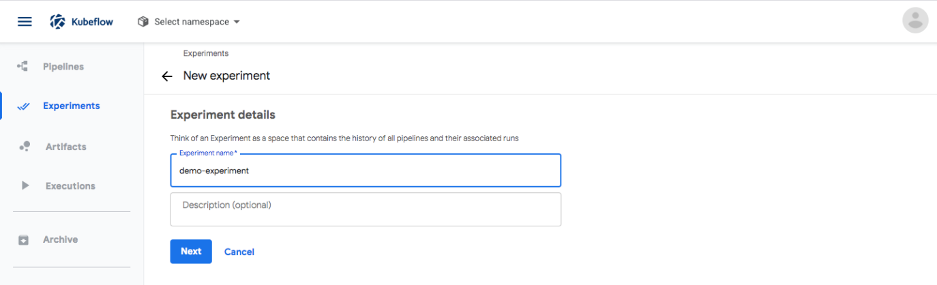

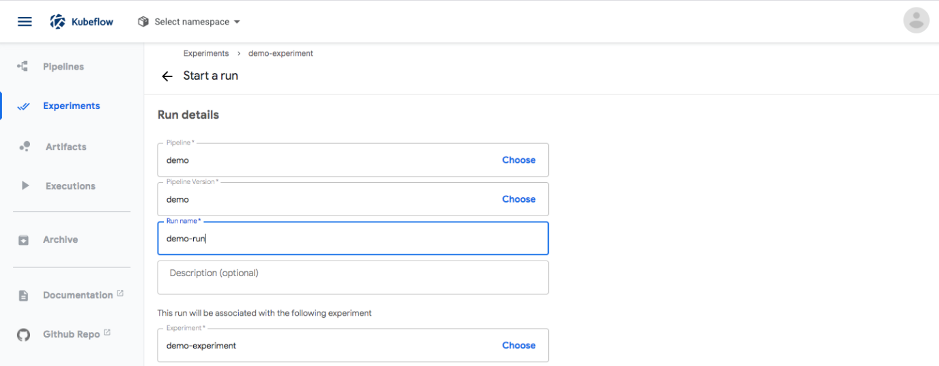

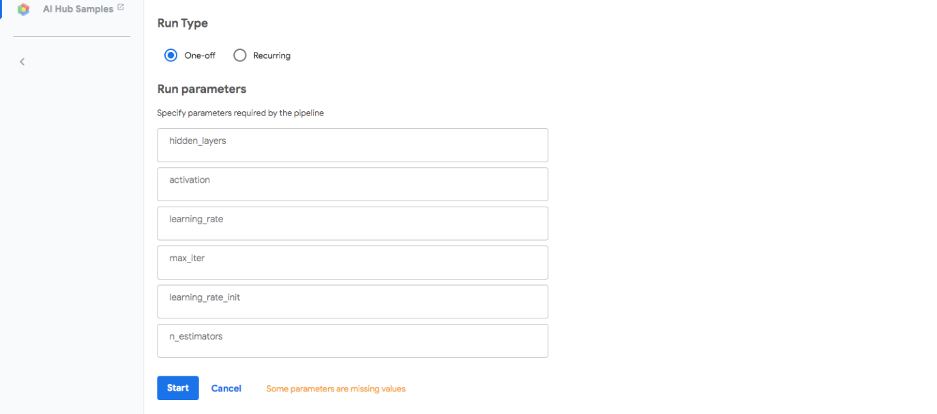

Run your pipeline

After uploading the pipeline through the Kubeflow Pipelines UI, you can create an experiment for the pipeline. Within an experiment you can start a pipeline run. These steps are very straightforward in the Kubeflow Pipelines UI (see screenshots below). When starting a run, you need to specify the pipeline parameters in the UI. This is a useful feature because it allows you to start runs with different parameter settings from within the Kubeflow Pipelines UI, without needing to adapt the code itself.

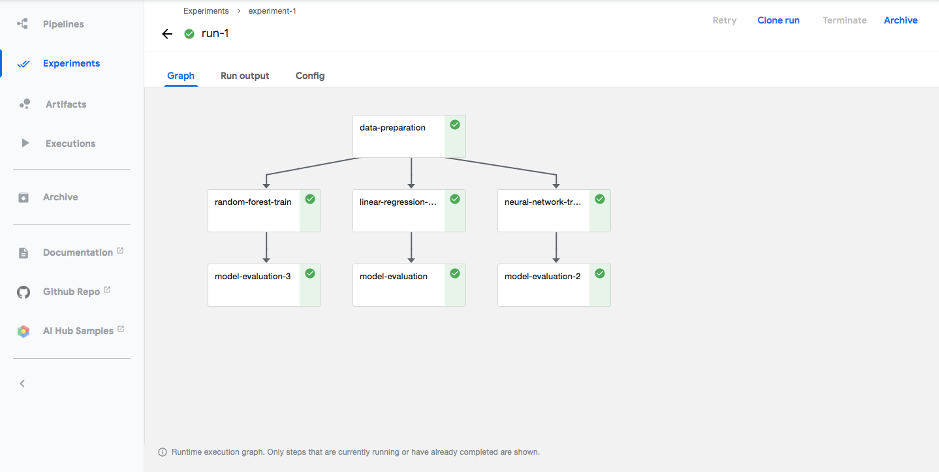

When a run is completed successfully, you can access the resulting pipeline execution graph in the Kubeflow Pipelines UI (see the screenshot below). In this dashboard, you can check the exported metrics and visualizations (e.g. ROC curve) under the ‘Run output’ tab and the more general information about the run (e.g. duration, parameters etc.) under the ‘Config’ tab. Further, you can also select a component in the graph and check the inputs/outputs as well as the logs for that component. This provides a simple way to have a clear overview of the different components in the pipeline.

Pipeline execution graph of a successful run

For more information about Kubeflow Pipelines, including more examples, you can check the following links:

When is Kubeflow useful?

In which scenarios can Kubeflow be a useful tool for a data scientist?

Kubeflow provides a way to run and use Kubernetes optimized for machine learning. A common problem to tackle in ML projects nowadays is the amount of data and a lack of processing power to train models, which is a computationally very intensive task. With Kubernetes, these workloads can more easily be parallelized, reducing the time to train models significantly. However, Kubeflow does not only provide a platform for model training but tackles the whole machine learning process and allows to execute the whole ML pipeline on Kubernetes. In this sense, Kubeflow's main focus is on orchestration and with Kubernetes in the background it shines at it.

So, when you are considering working with Kubernetes for your ML project, Kubeflow is definitely a valuable tool to build and deploy your ML pipelines. However, for data scientists without a software engineering background, it is necessary to first gain some basic knowledge about Docker and Kubernetes, as these are two important tools on which Kubeflow is based. When you understand the value of these two tools, it will become easier to work with Kubeflow.

To conclude, Kubeflow looks as a very powerful tool, backed by a large community of prominent technology companies. It offers a way to manage the ML lifecycle by creating and deploying pipelines on Kubernetes, one of the most well-known orchestrating platforms nowadays.