There are many different Business Intelligence solutions on the market. Many organisations struggle in selecting their optimal solution and – more importantly – fitting this in the right architecture. Many concerns are raised such as functionalities, budget, timing and sustainability of the tool.

Many companies already have a data warehouse, others don’t. But best practices recommend to build the end user application on top of a data warehouse (DWH). It avoids overloading the operational database(s), many sources can be involved and linked altogether, and most likely KPI calculation is already made in the backend in order to improve quick results on the frontend.

As a Business Analyst, I am focused on a few technologies such as SAP BI Suite, Microsoft SSIS/SSRS and most importantly Qlik with QlikView and Qlik Sense. For this insight, I will concentrate on the latter, as it has a very peculiar way of dealing with these challenges. Qlik is based on in-memory technology to create internal files that can function as a regular database-like data warehouse. Most developers that don’t use Qlik technologies have seen how Qlik works as a frontend solution, but most likely not in the backend. They like to consider it as a reporting tool that “looks good”, and position Qlik as a simple in-memory BI software.

However, it is worth to have an expert point of view of this hybrid solution to be able to consider it as a “all-in-one” data warehousing solution and reporting tool that is flexible and future-proof. In the context of this insight, I will focus on the possibilities that Qlik has to offer to the various end users with a solid data model, that can be used for traditional “guided analytics” as well as “data discovery”, in order to respond to all user requirements and replace all the features of a classic data warehouse.

Why a data warehouse

In earlier times, when each employee or manager maintained its own set of data, spreadsheets where populated manually from various sources. This process was extremely vulnerable to human errors and time consuming.

Spreadsheets are enjoyed by decision makers as they feel comfortable using them. With little training, they are extremely powerful and flexible, and inexpensive license-wise. Many issues arise when using spreadsheets, in terms of privacy, data redundancy and currency issues for example. Each decision maker retains its own “version of truth”, a copy of sensitive data on their own computer/laptop.

The need to share data with other collaborators, add security for each user or group of users, as well as accessing up-to-date data via multiple devices is nowadays a must-have feature to productivity. This cannot be offered by simple spreadsheets in a corporate environment.

Adding macros to Excel to respond the previous challenges is possible but creates other problems, such as poor data quality, security and is very time-consuming for little result. Investment made by a company in spreadsheets has been pretty important, and the result not very flexible.

Some companies have decided to use a dashboarding application, on top of an excel or directly on top of the transactional database, such as Qlik, Tableau or Spotfire. This resolves some of the problems, such as security, user-friendliness, and allows to have a better overview of the data. But such data are not cleansed, can hardly be linked to each other, can create duplicates, cannot keep a history, and will most likely show wrong numbers as this could be very complex to verify in detail.

What is a data Warehouse

A data warehouse is a centralized repository of consolidated data coming from one or various sources, and stored on a server, on-site or in the “cloud”. You can see it as the “single version of the truth”. Consolidated data is a grouping of data that are coherent. Much of the information can be found within the operational data, the remaining information must be calculated. The information must be filtered and linked in a logical way for a greater accessibility of the data, following the business specifications in terms of expectations. This is called a data model.

This is the reason why, in order to build a data warehouse, a business analyst must understand the business logic and the data flow within a company. So to make a long story short, the data warehouse is a database used only for analysis and reporting that takes the operational data and aggregates, calculates and cleanses it in order to have a consolidated copy used to display high level and detailed information.

The logic of Qlik

As stated earlier, Qlik is often referred to as a great frontend tool, ignoring the power that is hidden in the backend. A Qlik application is a script (in developer terms), written in its own language (an enhanced version of SQL) that runs in-memory, on top of a server. The frontend specificities of Qlik are multiple and are well documented online, and the data cleansing is well recognized within the world of Business Intelligence.

What is less documented is the possibility to use Qlik as a data warehouse. Basically, Qlik has created flat files called QVDs, that work with pointers and a hash table. They are very much compressed (10:1 in average) and are 10 to 100 times faster than any database access. The compression results will vary depending on data content, but is typically in the range of 2-5 times. For example, a document that requires 200MB of RAM will require somewhere between 40MB and 100MB of Disk storage.

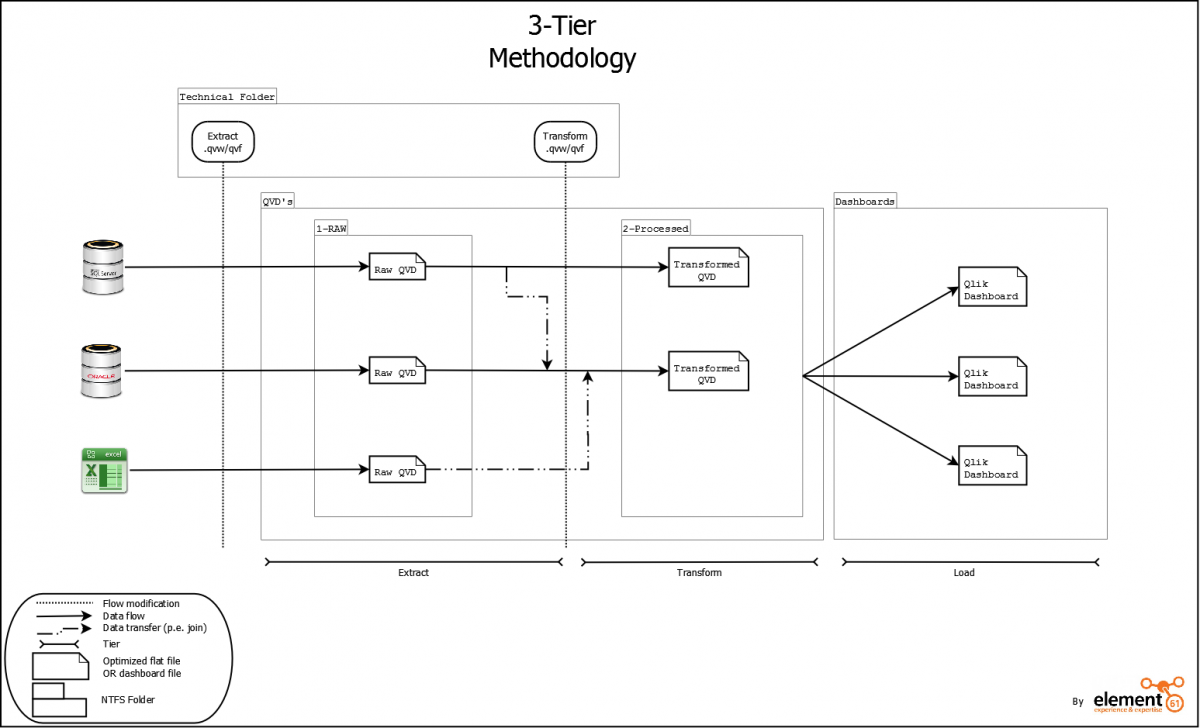

Figure nr. 1

Can Qlik technology be considered as a full data warehouse?

Saving into a flat file means as well that it is possible to save historical data, which is one of the issues stated earlier. We can therefore think about using the QVDs at each level to build a full data warehouse.

The logic of a data warehouse

Business Intelligence best practices recommend to go through 3 main steps: Extract, Transform and Load, most commonly known as ETL.

Extraction

An analyst, with the help of the business people, has to choose the necessary data to import in the data warehouse from the transactional database source. This is usually the slowest step as the transactional database is most likely not on-site (network issue) and has an exploded data model (normalized or 3NF). The data is connected and loaded thanks to built-in connectors that connect to most (not to say all) operational databases (or previously built data warehouses).

This step will be the only access to the database for a Qlik application. It connects to the different data sources and queries the necessary data in order to save them exactly as they are into QVD files. It will take only the latest data as the older data will be taken only the first time. Once it is saved in the QVDs, Qlik takes advantage of the compression and the disk speed.

Transform

This step is very important and is composed of a few small steps. Data first must be cleansed using the scripting and various functions given by Qlik. The cleansing in Qlik is very powerful thanks to the many built-in functions, then renamed in order to be understood by business users. The transformation can include a big part of data cleansing that I will not approach in detail in this step.

It is then transformed thanks to a flexible and very powerful script, that is based on SQL, with many functions added which help the manipulation of data. Therefore, it becomes very easy to manipulate data to achieve the desired data model. Transformation allows a batch manipulation of the data in order to make the necessary calculations following the formulas given by the business users. This allows the creations of new fields used in the frontend, but also merges and creates the links between the tables, in order to create the data model. The datamodel in Business Intelligence is de-normalized, which means that a lot of tables are merged in order to create a comprehensible data model for the end users or report creators.

Then, every single table is saved into a QVD. Loading all the tables created after the transformation creates the full data model in memory. This is why we can use Qlik as a data warehouse. Thanks to the server or the frontend application, the files will be refresh and loaded in memory in order to be used. Now, the data are ready to be consumed by one or many QlikView or Qlik Sense applications as both can read the same QVD files.

Load

This last step is used only by the frontend. The developer/end user selects all or part of the tables needed in order to create the dashboard, either for guided analytics or data discovery. The data can be downloaded at a pace of 1 second for a million rows if no modification is made to the data.

After the preceding steps, the data is then stored into the server as a relational database and can be accessed by a Business Intelligence tool for further reporting. This is great for data discovery as the data is already prepared and ready to be consumed by the end user, with no risk of error, and the pacerapidity of an in-memory tool.

Leveraging data in the Data Warehouse helps identify business issues quickly and provide immediate notification to the appropriate decision maker, serious business problems can be avoided and found. Qlik allows you to see the results of a selection across the entire schema not just a limited subset of tables.

What are the benefits

Besides the numerous frontend benefits of QlikView and Qlik Sense such as great data visualizations, associativity, Responsive Design (Sense) and low RAM used due to the pointers, there are many benefits of using Qlik as a modern data warehouse.

The transactional database is accessed only once per table, which reduces drastically the load that can occur with other technologies such as SAP BusinessObjects or Lumira for example. Qlik can extract data from various sources of data, such as Excel, xml, web pages, OLE DB databases, and has special connectors for SAP and Salesforce, maintained by Qlik itself.

The core technology is not based on fixed cubes and queries, but on association. It does not require any change to view data with a different selection. The user is free to create his own selection and charts as the model automatically maintains the associations among every piece of data in the entire data set used in an application — neither developers nor end-users have to maintain the associations. Qlik technology is very economical, as an “All-in-One” tool. Only one license for the whole product with all the functionalities, backend and frontend.

Conclusion

Qlik technology is very powerful. It allows the user to create a full data model based on fast flat files, loaded in-memory.

Using Qlik as the only data warehouse in your company has a lot of benefits for the end user as well as for the company, such as the speed of development, responsiveness of the product thanks to the associativity and the reusability in many applications, which reduces the risk of errors.

This is a huge advantage compared to other technologies. Qlik has the ability to access many sources as input and allow strong transformations and links between tables. The access to the database is reduced. While others copy the full database with no compression, Qlik requires only one access per table, which reduces considerably the load time on the transactional database. The rest of the time, Qlik will only take the changed data and include them to the data model. The granularity is much improved compared to any SQL database, and requires a lot less work for the same result.

As a downside Qlik is proprietary, and the data source can only be used by a Qlik product, whereas an open Data Warehouse (e.g. in Oracle or SQL Server) can be accessed by any BI tool. The lock-in will be bigger than in a traditional Data Warehouse architecture. Next to that, it should also be mentioned that the ETL development in Qlik is mostly done through scripting, whereas other ETL tools may offer a Graphical User Interface, thus reducing the learning curve. Qlik is always improving its products with a strong backwards compatibility, and with the launch of Qlik Sense in the last years, the reliability and the advance of the technology has been more than proven.

Recently, Gartner has pushed the product as the leader of updated modern BI and analytics platforms, between 2 other products that don’t provide ETL or require a much longer building time. Thus proves that investing in Qlik is an investment in the future of your company. For more detail on this matter visit "Qlik continues to lead while Gartner get tough".