What is dbt?

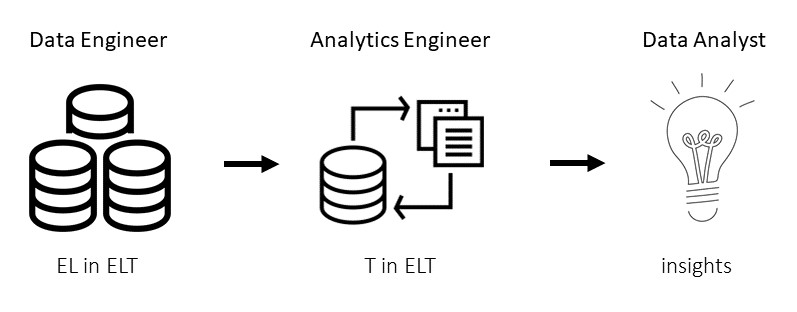

dbt is an open-source transformation tool that aims to simplify the work of the analytic engineer in the data pipeline workflow. It specifically implements only the T in the ETL process. The greatest feature is that it focuses on implementing the software principles normally only seen in the software industry, but now also introduced in the analytic world.

Main concepts:

- Model: a SQL select-statement that takes tables as input, transforms it and outputs the result as a table.

- Package: a dbt project that is a collection of SQL functions.

- Source: a source is a table in a data warehouse from which the transformations start.

- Exposure: a representation of a downstream use of a dbt project.

Why dbt?

In most of the data pipeline workflows, not much importance is attached to version control, testing and documentation, while this is an important aspect in maintaining the pipeline. A great improvement in time management is experienced when a fault in a dashboard can be quickly found through the thorough testing and data lineage graph instead of hours lost in seeking out the problem. The tests will be the first to notify the developers that something has failed instead of the client that makes use of the dashboard and notices that something is off.

Who are the targeted users?

-

dbt has two versions: dbt Cloud and dbt Command Line Interface (CLI). While the dbt Cloud has a UI that takes care of a few of the configuration and a preview of the queried data can be seen, still the same commands as in the CLI need to be run in dbt Cloud. Because of the lack of UI, dbt is not for business analysts that prefer to use tools and do as little programming as possible. dbt is more for the technical part of the analytic world that focuses on the transformations.

- dbt is the most useful in large data teams since it is designed for a particular role in the data pipeline process: the analytics engineer. This is a new special role in large data teams that solely does the transformation. dbt can then be used to satisfy all the needs of the analytics engineer. Other roles such as the data engineer and the data analyst then take care of data lake management, extraction and loading of the data into the data warehouse and the business insights.

Key functionalities

(1) Documentation

Documentation is essential when updating the data pipeline with a new feature or if another developer takes over the project. Meta data from the different tables and their relations is extracted by dbt and summarized in a clear overview.

- Possible bugs: if sources are part of the project, the generating of the documentation might stall and never finish. Just closing the terminal and then serving the documentation anyway with a new terminal will have saved the changes.

What can be documented:

- Different models that contain the transformations with information about certain details such as type and package, a description about the table, the different columns with their names, types and descriptions and which tests are present. Also the dependencies on other tables or references from other tables can be found. Both the source code and the compiled code are stored here.

- The different packages that are installed with their extra functionalities. Note: the open source community in dbt Hub already has a lot of solutions for analytic problems that are available with packages.

- The sources from which the first staging model is made, can be specified and documented. This source could for example be an Databricks-loaded Delta table.

- The different dashboards that make use of the fact and dimension tables, can be specified and defined in exposures. Note, this is just a representation, the data will not automatically flow to the dashboard.

(2) Data Lineage

a Data lineage graph is an important feature to show where your data comes from & where does it go to. A lot of data teams look for visual data lineage to display the flow of data from the source table in the data warehouse, to the different tables after transformation, to finally the dashboard that brings the insights into the data.

Limitations: you are not able to follow the data flow on column level, only on table level.

(3) Version Control

Version control with git with a chosen git repository to store the code. Models, tests, exposures, sources, configurations of the project and the different used packages are versioned.

(4) Incremental transformations

Incrementally transforming the data is a must in handling big data.

- A table can be set as incremental and then some jinja code can be added to only apply a filter on an incremental run.

- To avoid duplicate rows, a unique key can be set. To not lose too much data with a strict cut-off, a window can be used. But if the data arrives later than the time window, the data will get lost.

Important things to consider when using incremental tables:

- Column that represents time or that counts up such as a serial key.

- A row with a unique key, or a combination of rows that are unique to avoid duplicate rows.

- If updates are done to rows, a column that represents a time when the row was updated to use this row as cut-off point for which data needs to be transformed.

(5) Testing

Testing is important in ensuring data quality and to know about problems in advance. dbt provides ways to integrate testing in a data pipeline. Some tests are already shipped with dbt, while other can be found in open source package on dbt Hub. There are two kinds of tests: bespoke tests and generic tests.

- Bespoke tests are scalable tests that can be reused on different models. Small tip: the number of allowed failed rows can be set before it gives an error. It is also possible to write the failed rows to a table to observe.

- Generic tests are tested against certain models and if no rows are returned, the test passes. Any number of tables can be combined to test a certain table.

Note the testing is also documented in the data lineage graph.

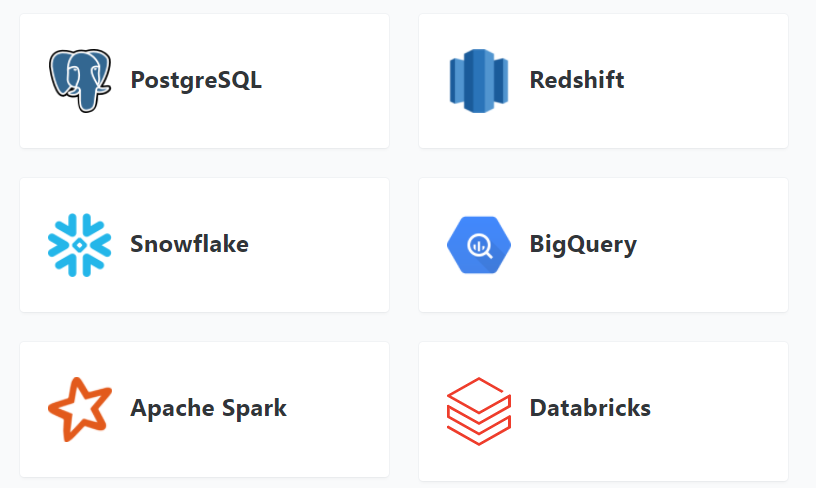

(6) Re-use

dbt can be used in combination with multiple data warehouses. A few are supported by dbt, while there are a lot more connectors to other data warehouses that are community supported.

However, a connection can be made only with one data warehouse at once. There can be switched between different data warehouses by changing environments, but no two data warehouses at the same time can be connected.

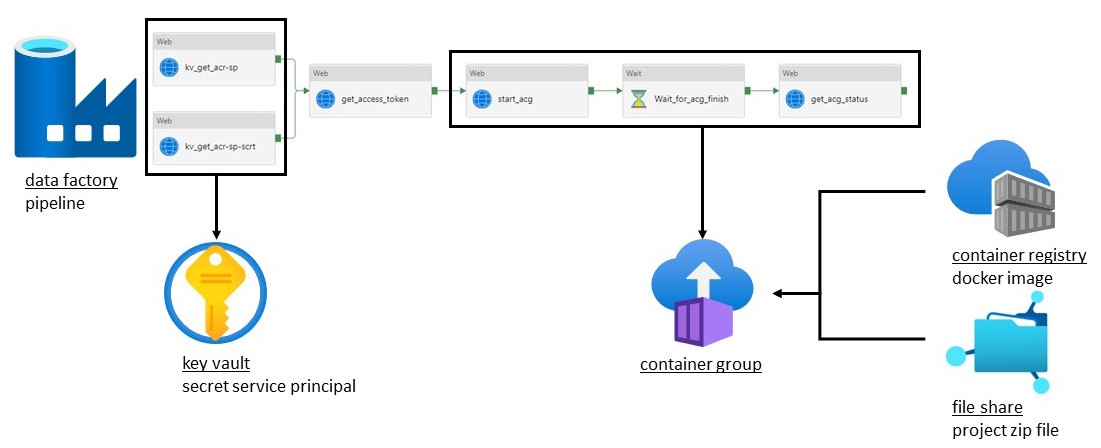

Our experience with dbt in Azure

- Compute - Spark cluster of Databricks — The queries of dbt are run against the spark cluster of Databricks.

- dbt script & project files on Data Lake File Share — The dbt project and the script with the dbt commands that need to be run are stored in the file share.

- Docker image of dbt stored on Container Registry — dbt and all its dependencies are installed in the image and pushed to a container registry.

- Running dbt on a Container Instance — dbt is run in a container instance. When starting a container instance, the docker image is pulled from the container registry and the project files come from the file share.

- Orchestration via Azure Data Factory — A trigger is sent from the Data Factory when the container instance needs to be started.

This set-up has certain limitations in our experience:

- In our case a lot of data pipelines are already in place and we have developed different acceleration packages in python to drive up the performance. To be able to use dbt, these functions needed to be written in SQL since dbt does not support python. This was not possible for a great part of the functions. The simple transformations performed when going from the raw to the clean data could directly be applied in dbt, but the optimized python functions when the data is transformed to aggregate data could not be rewritten.

- To support collaboration, a cloud environment is needed to work in teams. This is not possible with the current deployment of dbt in Azure, the dbt project needs to be developed locally before deploying it on the cloud. Another problem with this deployment is that the CLI commands such as the running of the models and the tests need to be run one after each other since they all exist in the same script and cannot be scheduled separately. This is inadequate for the requirements we have.

Conclusion

When to use dbt?

- Version control

- Automatic generated documentation and data lineage graph

- Testing and data quality

- Big data

- The developer only knows SQL select statements

- Collaboration with a git repository

- Local development

When not to use dbt?

- Transformations cannot be written in only SQL

- If a tool is needed to do the whole ELT process since dbt only does the transformation

- Collaboration with simultaneous updates is required

- Development on the cloud

(written by Kyana Bosschaerts - element61 Trainee in 2021)

Keen to know more?

Continue reading or contact us to get started:

- When to use dbt or Delta Live Tables?

- Automate your ETL testing and deliver quicker and better results