Finding the Problem

Before we can fix a problem, we need to figure out what is causing it. For this we can use standard Power BI tools or other more advanced tools - that are free to use - for a more detailed breakdown.

Power BI Performance analyzer

If certain reports or report pages are loading slowly best thing to do first is search for the root cause. Is it the way the model is set-up? Are we using too many visuals? Or is one measure taking a long time to calculate? We can start looking for our root cause by using Power BI Performance analyzer.

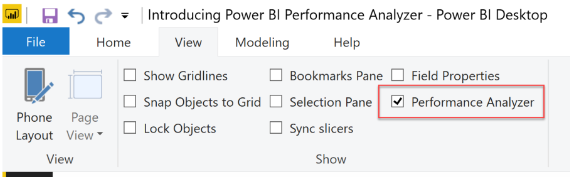

Open the report that has issues in Power BI desktop and activate “Performance Analyzer”.

A new sidebar will open that allows us to analyze the speed of visuals. Once you’re on the right page, click on “Start Recording” and then “Refresh Visuals”. Now Power BI will refresh the whole page while tracking the time it takes to update all visuals on this page. The time is split up per visual and divided into three parts.

Here is a short description of the three timing categories:

- DAX query: The time it takes between a DAX query being sent to a Tabular engine and the first row received by Power BI. The DAX query can be copied from here for later use in DAX Studio

- Visual display: Time spent to generate the visual

- Other: Time spent waiting for other operations. This is almost never the bottleneck of a visual.

If there is a specific visual that is taking a long time to load, I would suggest copying it to a new page and test this visual by itself. By isolating the visual we are sure no other components are having an impact on the performance. You can then remove fields/measures from the visual to see if one field/measure is causing the performance delay.

DAX Studio

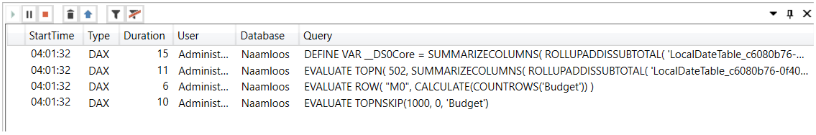

DAX Studio is a nice way to analyze a specific DAX query and break it down further than Performance Analyzer can. It will show what a DAX query is asking from our database and how it is calculating its results. DAX studio can be connected to an Analysis Server or a Power BI report with imported data. Once a connection is made, “All Queries” can be clicked to catch all queries executed from a connection.

A new tab at the bottom will open. Refresh (or refilter) Power BI to catch new queries. These will be shown in the “All Queries” tab.

Here we can clearly see which queries took longest to execute. Double clicking on a line will show the query in the DAX editor. It is a more detailed way to analyze queries compared to the standard Power BI performance analyzer. Copying a query from the performance analyzer and pasting it into DAX editor should show the same query.

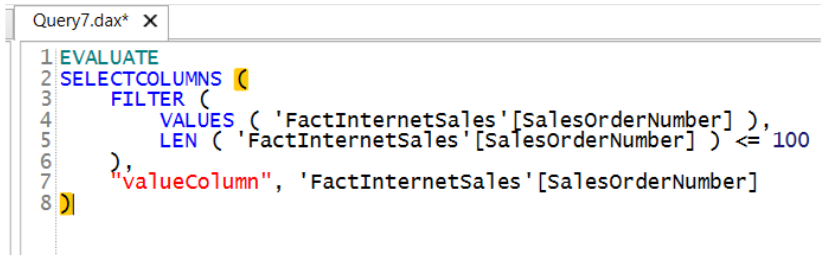

DAX studio allows us to edit DAX code. Once edited “RUN” can be used to see what effect the change has on timings. Disable “All Queries” and enable “Server Timings” to see detailed timing information about the query that is run in DAX Studio.

The timings are also split between FE (Formula engine) and SE (Storage engine). The FE will interpret a DAX formula to single logic SQL statements that are send to the SE. This SE produces data caches which will be sent back to the FE to be combined into a result set. Usually high FE can be solved by reviewing the DAX statement and SE can be solved by improving model performance. The SE will always be faster because it can run multi-threaded, so it is best to try and reduce FE usage by increasing SE usage.

VertiPaq Analyzer

VertiPaq Analyzer is mostly used to analyze a model on a more global level. For example, it can be used when bad performance is noticed on a certain table. But sometimes it can help identify problems of slow measures as well, as shown in the example below.

Let’s say we have a measure which does a distinct count on a code field but is running very slow. The slow loading time will likely be caused by the cardinality of the code column. VertiPaq analyzer can be used to easily see the exact cardinality or amount of unique values in this column. It is best to avoid distinct counts on columns with millions of distinct values.

Fields with high precision and thus usually a high cardinality can also slow down the model. Round up if a very low precision is not needed. It is also recommended to split a datetime field into date and time fields. By doing this we split a high cardinality column into two lower cardinality columns and allow a report maker to only use the columns that are necessary, thereby creating better performing reports. On the same note, try to use integers instead of strings if possible, they are stored more efficiently, which means better performance.

These are some interesting measures retrieved by the analyzer:

Optimization tips

There are a lot of ways to optimize a model and/or DAX measures. Some of them require a good understanding of the FE/SE and DAX query plan, while others can be solved by following some optimization tips and tracking down the objects that cause problems. This section contains some easy to implement optimization tips.

Nested Measures

When analyzing a slow visual using the tools shown earlier, it can become clear that a specific measure is causing the delay. DAX allows using multiple measures inside other measures. This nesting can sometimes cause a real loss in performance. Removing all the nested measures and persisting them into one measure can improve the loading speed by a substantial amount. Here is an example:

I created two measures, one calculates total gross sales based on the currency a user selects and a second one calculates quantity sold based on a unit of measure a user selects.

Gross Sales:

VAR vMeasure =

SWITCH (

MAX ( Select_Currency[CurrencyChoice_ID] ),

1, SUM ( Fact_SalesInvoices[M_SalesInvoiceGrossAmount_LC] ),

2, IF (

MIN ( Fact_SalesInvoices[ExchangeRate_Eur] ) = -9999,

"No Conversion Rate Available",

SUM ( Fact_SalesInvoices[M_SalesInvoiceGrossAmount_EUR] )

),

3, IF (

MIN ( Fact_SalesInvoices[ExchangeRate_USD] ) = -9999,

"No Conversion Rate Available",

SUM ( Fact_SalesInvoices[M_SalesInvoiceGrossAmount_USD] )

)

)

RETURN

IF (

SUM ( Fact_SalesInvoices[M_SalesInvoiceGrossAmount_LC] ) = 0,

BLANK (),

IF (

HASONEVALUE ( Select_Currency[CurrencyChoice_ID] ) = FALSE (),

"Wrong Filter Selection => Select a Currency",

IF (

DISTINCTCOUNT ( Fact_SalesInvoices[SalesInvoiceLocalCurrencyCode] ) > 1

&& MAX ( Select_Currency[CurrencyChoice_ID] ) = 1,

"Multiple Currencies Cannot Be Summated",

vMeasure

)

)

)

Quantity:

VAR vMeasure =

SWITCH (

MAX ( Select_UnitOfMeasure[UnitOfMeasure_ID] ),

1, SUM(Fact_SalesInvoices[M_SalesInvoiceQuantity_TO]),

2, SUM(Fact_SalesInvoices[M_SalesInvoiceQuantity_USTON]),

3,SUM(Fact_SalesInvoices[M_SalesInvoiceQuantity])

)

RETURN

IF (

SUM ( Fact_SalesInvoices[M_SalesInvoiceQuantity] ) = 0,

BLANK (),

IF (

HASONEVALUE ( Select_UnitOfMeasure[UnitOfMeasure_ID] ) = FALSE (),

"Wrong Filter Selection => Select a Unit Of Measure",

IF (

DISTINCTCOUNT ( Dim_OriginalUnitOfMeasures[OriginalUnitOfMeasures_ID] ) > 1

&& MAX ( Select_UnitOfMeasure[UnitOfMeasure_ID] ) = 3,

"Multiple Unit Of Measures Cannot Be Summated",

IF ( vMeasure = BLANK (), "No conversion factor available", vMeasure )

)

)

)

Next, a second level measure was created which divides these two to get a Unit Price:

IF (

ISTEXT ( [Gross Sales] ) = TRUE ()

|| ISTEXT ( [Quantity Sold] ) = TRUE (),

"Calculation not possible",

( DIVIDE ( [Gross Sales], [Quantity Sold] ) )

)

This all works very well but the Unit Price measure can get slower if the model size grows. By persisting both measures into Unit Price we saw a performance gain x10. Hopefully this is something a newer DAX engine will solve.

Unit Price (persisted):

VAR GrossSales =

SWITCH (

MAX ( Select_Currency[CurrencyChoice_ID] ),

1, SUM ( Fact_SalesInvoices[M_ SalesInvoiceGrossAmount _LC])),

2, IF(Min(Fact_SalesInvoices[ExchangeRate_Eur_T2001]) = -9999, "No Conversion Rate Available"

,SUM ( Fact_SalesInvoices[M_ SalesInvoiceGrossAmount _EUR])),

3, IF(Min(Fact_SalesInvoices[ExchangeRate_USD_T2001]) = -9999, "No Conversion Rate Available"

,SUM ( Fact_SalesInvoices[M_SalesInvoicesGrossAmount_USD]))

)

VAR QuantitySold =

SWITCH (

MAX ( Select_UnitOfMeasure[UnitOfMeasure_ID] ),

1, SUM(Fact_SalesInvoices[M_SalesInvoicesQuantity_TO]),

2, SUM(Fact_SalesInvoices[M_SalesInvoicesQuantity_USTON]),

3, SUM(Fact_SalesInvoices[M_SalesInvoicesQuantity])

)

RETURN

IF (

SUM ( Fact_SalesInvoices[M_SalesInvoicesGrossAmount_LC]) = 0

|| SUM ( Fact_SalesInvoices[M_SalesInvoicesQuantity] ) = 0,

BLANK (),

IF (

HASONEVALUE ( Select_Currency[CurrencyChoice_ID] ) = FALSE (),

"Wrong Filter Selection => Select a Currency",

IF (

HASONEVALUE ( Select_UnitOfMeasure[UnitOfMeasure_ID] ) = FALSE (),

"Wrong Filter Selection => Select a Unit Of Measure",

IF (

DISTINCTCOUNT ( Fact_SalesInvoices[SalesInvoicesLocalCurrency_ID] ) > 1

&& MAX ( Select_Currency[CurrencyChoice_ID] ) = 3,

"Multiple Currencies Cannot Be Summated",

IF (

DISTINCTCOUNT ( Dim_OriginalUnitOfMeasures[OriginalUnitOfMeasures_ID] ) > 1

&& MAX ( Select_UnitOfMeasure[UnitOfMeasure_ID] ) = 3,

"Multiple Unit Of Measures Cannot Be Summated",

GrossSales / QuantitySold

)

)

)

)

)

Tabular Model Optimizations

Aggregation

If a model is becoming too large and the query duration rises as the model size grows it can become interesting to add an aggregation table. Such a table will be aggregated before being loaded into a tabular database and will give a more high-level view of the data. This way reporting will be faster because a lot of the calculations are done beforehand. As a downside model processing will take longer.

Pre-Calculation

This can also be done for calculated tables and columns. These increase model size and result in longer refresh times. If possible, it is always best to limit the use of calculated objects and create/calculate them in the data source. Even if the calculation of this table or column happens in a view, it only needs to be calculated when the model is processed.

Keep your model slim

Remove unnecessary tables and columns. They take up resources and raise processing time. It is better to add them later. Adding something is fairly easy.

Multi-model design

Avoid using only one model when multiple models make more sense. This will allow you to optimize each model for its specific purpose. Optimizing one model for recent detailed data and another model for a global high-level overview will result in a much better performance than trying to fit it all into one model.

Properly set meta-data properties

Change the “Summarize By” property for all fields that do not need to be summarized. A field like Year will automatically be recognized as a numeric field and Power BI will summarize this field. Which is annoying for users and has a serious negative impact on performance if data is shown on a low level of granularity. For big models it is advised to avoid implicit “Summarize By” measures and always create an explicitly defined measure.

Power BI Optimizations

A visual gets slower as it has to show more data or do complicated calculations. While this is obvious, it is best to not look at optimizing the model alone. Reports can be optimized as well. For example, a report can have a few slicers and a table visual that shows data from a table. A user viewing this report will only be interested in a limited number of rows. It is always advised to add a top N filter so that not the whole unfiltered table can be selected. The data will be loaded and uncompressed into memory at every refresh. A filter like top 10.000 will not change the experience for the user but will have a big effect on performance, especially for tables with millions of rows. Just make sure to always add a visual indicator stating that you have limited the dataset.

This should be done for all visuals, but especially tables and matrixes. Always ask the question are all rows really needed? Or can we add a filter without having a big impact on the user experience? This also means limiting the number of visuals. Only show necessary visuals on one report page or split them into different pages, possibly linked with a Drill-trough.

While slicers are a great way to filter visuals and limit the size of a table visual, they should be used with some caution. Each slicer will have to filter other slicers, and this can cause slow performance if the slicers themselves are large (=referencing a column with high cardinality). Hierarchical slicers can be a possible solution if a high cardinality slicer is needed.

Though this is certainly a high-level look into Power BI Tuning, it should get you started on the correct path. For a deeper understanding of Performance Tuning of Tabular Models I advise to check out the Microsoft whitepaper Performance Tuning of Tabular models in SSAS 2012.