Predictive Analytics is becoming mainstream. In recent studies, 75% of business people confirm that they understand the concept & what it entails. At the same time, only 25% of them actually use it. It seems that the buzzword struggles to deliver the business value it could create.

Technology & skills are, among others, the reason why Predictive Analytics are not adopted by all organizations. To prevent technology & skills to be a blocker for using Predictive Analytics, we see that more & more predictive tools emerge.

element61 identifies three types of predictive tools. We define a predictive tool as "a tool used for building the statistical predictive model".

(1) Predictive Coding tools

The most known & most used set of predictive tools are coding tools where a tool - also called IDEs or Integrated Development Environments - allows a certain code language to be written, tested and developed.

Some frequently used tools or IDEs are:

These development environments enable data scientists to leverage their coding language by preference. Most known & used languages are R, Python and Scala. SAS is also broadly used but comes with an end-to-end approach which means SAS needs to run through a SAS IDE and requires a licence.

There are unique benefits in taking a coding tool for predictive modeling:

- Documentation: as most coding languages are open-source (e.g. R or Python) they are supported by a strong open-source community and multiple fora

- Flexibility: through coding a data scientist can run almost any transformation and modeling

- Innovation: innovative approaches are often published as open-source packages or code snippets; these are instantly available for those using a coding tool

Main disadvantages for coding tools are:

- Knowledge & skills needed to work with these coding tools. Although R & Python are getting mainstream for most graduating students, experience has proven very important (yet rare to find).

- Stability: driven by the open-source community, coding functions and packages are continuously updated. This means that certain coding functions might be deprecated (although you use them) and/or are rebuilt with a different logic (without you knowing). Once in a while a big update, such as moving to Spark 2, requires a full rebuild of any coding done before.

In recent years, software vendors have tackled above disadvantages by embracing open-source within their commercial tools (see further) yet focussing on stability and ease-of-access: e.g., Microsoft has released an versioned enhancement of R - called Microsoft R Open & SAP has embedded Python and R within their SAP Predictive Analytics tool (see further)

(2) Self-service Predictive modeling tools

But coding is coding and as a technology matures, 80% of the use-case can be built using 20% of the functions. A coding data scientist would typically re-use various parts of his code from one project to another; after all, all data science use-cases need to clean data, transform data, normalize data, etc.

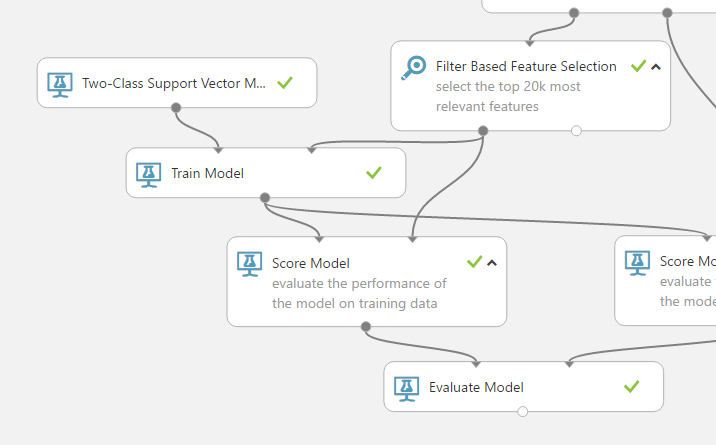

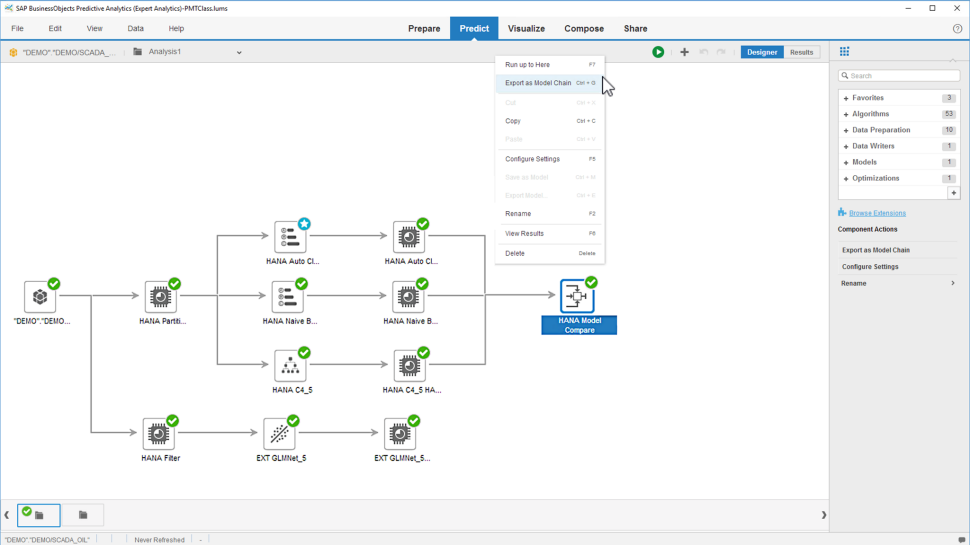

For this reason, we see the emerge of self-service tools, such as SAP Predictive Analytics or Azure Machine Learning. They are dedicated & focused to facilitating the Predictive modeling process through a UI rather than a code-base interface. Coding snippets are replaced by functions which can be dragged & dropped in a data science flow (see below). These tools are emerging as software vendors are offering the potential of Machine Learning without demanding that everyone is a coding data scientist.

These self-service predictive modeling tools enable a Citizen Data Scientist who can now, with a full-blown toolkit, deliver Predictive Modeling in an efficient yet accurate manner.

Figure 1 - Example of building, training and scoring a model within Azure Machine Learning

click to enlarge

Figure 2 - Building a ML model within SAP Predictive Analytics

click to enlarge

A coding data scientist might quickly refrain from using a self-service tool as it doesn't offer the same flexibility as they are used to. After all, the commercial self-service tools won't cover all data science functions and use-cases (they are designed with the principle that 80% of the use-case can be built using 20% of the functions). Self-service tools do, however, allow for speed & thus rapid innovation: a predictive model can be built faster with less 'copy-paste'.

At element61 we believe self-service tools have a true potential. As self-service tools are embracing open-source coding language such as R and Python, they allow a data scientist to do whatever they would want to do in their coding tools as well as to benefit from a handy UI where applicable.

(3) Embedded Predictive modeling

Some software vendors take it one step further: more and more software tools embed a flavor of predictive analytics as an add-on functionality within their existing software. The tools deliver specific predictive use-cases for certain functional areas, such as Digital Marketing, or for certain data-rich industries such as Media, Telecom or Banking. Examples are:

- Most Personalization tools leverage their embedded Next-Product-To-Buy Recommendation Engine - e.g. Dynamic Yield

- Most Data Management Platforms (DMPs) deliver Look-a-like modeling out-of-the box - e.g. Cxense

- CRM tools such as Salesforce are embedding predictive insights through Salesforce Einstein Discovery

In general, we cheer the embedding of predictive analytics within tools: by doing this data & predictive analytics are used to create more value. However, while these embedding modeling solutions create an exciting set of opportunities, businesses should be informed to understand the tradeoffs before jumping in feet-first in embedded predictive tools. We, as element61, ask for caution on four dimensions:

Trust in the model

In most use-cases, trust is more important than model accuracy. It’s clear that no matter how good the data science might be, it is rendered useless if the sales or marketing team doesn’t understand or trust the scores coming from the model. An embedded predictive modeling tool is often not built to give that transparency on drivers of the model or model performance.Nuances in modeling - e.g. correlation vs. causation

A common data modeling pitfall that’s often overlooked is overfitting which can produce predictions that are random. Similarly, correlation doesn't mean causation (read here for examples). An embedded predictive model doesn't take this nuances, specific to your data, into account. Only if the data is so straightforward, embedding predictive models can be of value (e.g. product recommendations).Business-wide use of the model

An embedded model is only part of one tool and might therefore not allow for business-wide use of the model. A recommendation engine embedded within your website personalization tool won't allow you to leverage the same recommendation engine in your printed folders.Tailored to your need and use-case

Embedded predictive models are out-of-the-box: they often allow for almost no parametrization. This limits flexibility: e.g., imagine you want to exclude a certain region from your sales forecast, imagine you want to exclude a certain product offering in your product recommendation, etc.

While the concept of embedded predictive modeling sounds promising, I'm not sure people really want (or are ready for) it. At this stage, I think we all just want more control and both self-service tools and coding tools provide this to us. This being said, every organizational context is specific: it's important to understand the various types of tools before pinpointing to one or another.