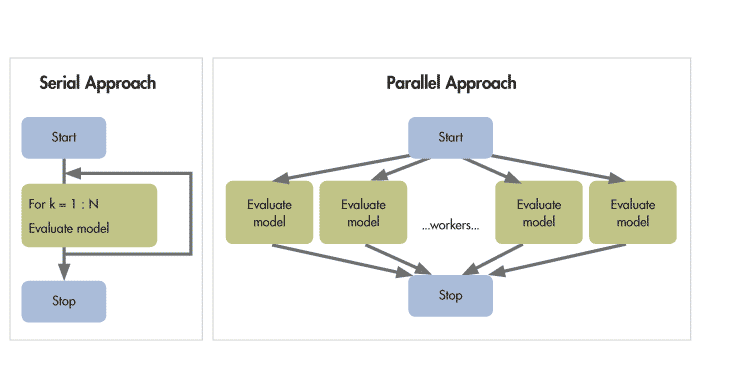

What is Parallel Computing?

Parallel Computing is the process of running different tasks at the same time. In training and building AI and Machine Learning, algorithms parallelism is often crucial for the following use-cases:

- To train and handle a big dataset: i.e. we might need to work with huge datasets. Luckily, they are techniques and frameworks available (e.g. Spark) allowing us to run computations in parallel by splitting 1 big task in multiple parallel small tasks. For this we need parallel computing abilities.

- To find the optimal parameters for a model: i.e. we might need to iterate through all possible parameters and compare the performance impact. Parallelizing this process speeds-up our time to deliver.

- To train different models: i.e. we might need to build multiple models for comparison and/or for different scopes. Using parallelization, we can build different models at the same time: e.g. running different forecasting models for every product.

It thus goes without saying that parallel computing is key for any Big Data and AI development.

Illustrative example of Parallel computing principle

click to enlarge

What services does Azure offer for Parallel Computing?

Azure has two core products offering parallel computing as a PaaS: Azure Batch and Azure HDInsight. Azure HDInsight has been around for multiple years while Azure Batch is pretty new and offers exciting new functionalities.

Note that to further enable Azure Batch as a product, Microsoft is also offering an open-source add-on on Azure Batch called AZTK, which allows to run a Spark Cluster on top of Azure Batch. As such, Azure Batch has true equivalent features vs. HDInsight for certain Parallel Computing use-cases.

So, what is what?

Azure HDInsight is a cluster product designed to offer distributed computing within Azure. It offers both a Spark cluster as well as an R Server, Kafka and a Hive Cluster. When working with HDInsight, you get a fully-managed cluster with everything pre-configured.

Azure HDInsight is a cluster product designed to offer distributed computing within Azure. It offers both a Spark cluster as well as an R Server, Kafka and a Hive Cluster. When working with HDInsight, you get a fully-managed cluster with everything pre-configured.

Azure Batch is designed to run general purpose parallel computing in the cloud; it doesn’t provide a fully managed ready-to-use setup as HDInsight would, but it provides the building blocks to configure it yourself. It offers easy-to-set-up pools where you can use and configure many VMs/nodes that can scale based on the workload being executed. A perfect scenario where Azure Batch can be used is when you are running tasks which work independently from each other.

AZTK is an open-source layer on top of Azure Batch to run a Spark Cluster. Through AZTK, you can use Azure Batch to run Spark jobs. Aside from the command line interface and the python SDK to submit jobs, you can also develop in a Jupyter Notebook plugged directly on the master node.

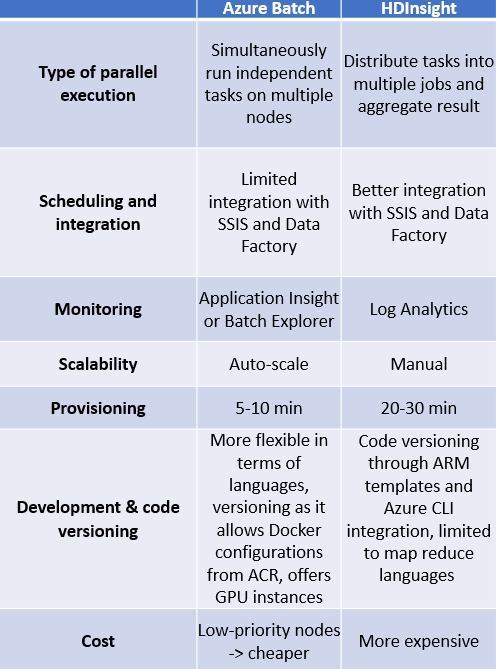

How to define the best tool for your use-case?

All support parallel computing. Although there are similarities and some workloads can run on either, one is often preferred over the others for specific use-cases. Therefore, the right tool should be used for the right purpose.

Consider the following dimensions in making your choice:

- Type of Parallel execution

All tools mentioned above can be used to execute parallel workloads. Both AZTK and HDInsight can distribute the tasks into multiple jobs and aggregate the results. On the other hand, by default, Azure Batch can simultaneously run independent tasks (!) on multiple nodes.

AZTK is focused on providing a Spark cluster. HDInsight offers Hadoop, HBase, Interactive Query, Kafka, ML Services, Spark, Storm, R Server cluster.

Depending on your use-case and thus type of parallel execution we recommend the following:

- When you are in need to run independent tasks you defined yourself in parallel, use Azure Batch.

- When you are looking for a Spark Cluster to speed up your ETL or AI jobs using parallelization, use AZTK or HDInsight

- When you are looking for a Hive or R Server cluster to speed up your big data jobs use HDInsight

- Scheduling and integration

HDInsight is a perfect solution for ETL and Big Data processing/analytics especially if the data processing can be queried with one of the Hadoop query languages Pig, Hive or Spark. Azure HDInsight is better integrated with SSIS and Azure Data Factory and can thus be broader used as part of ETL or automation pipelines.

While we write this article, integration of Azure Batch in Azure Data Factory or SSIS is limited. Triggering jobs with a custom Docker Container is for example not possible.

- Monitoring

On this dimension, both are equally strong. HDInsight clusters can be monitored using Log Analytics, and for Azure Batch Application Insights or Batch Explorer can be used for monitoring the availability, performance and usage of jobs and tasks.

- Scalability

Azure Batch can auto-scale with a formula e.g increase the number of computing nodes during high CPU usage, decrease number of nodes if CPU usage is low etc. And the scaling of an HDInsight cluster can only be done manually by the users.

- Provisioning

Starting a cluster on HDInsight can take 20-30 minutes, and for Azure Batch the resource allocation can be done in 5-10 minutes.

Additionally, Azure Batch (and thus AZTK) allow you to use Docker images as nodes of a pool. This means that you have 100% flexibility, through the Dockerfile, to configure your own node with software and dependencies you want. Azure HDInsight doesn’t allow this but rather gives you a fully managed Spark, Hive or R Server Cluster with pre-configured nodes.

On provisioning flexibility and speed, Azure Batch is stronger. If you are however looking for a start-and-click Parallel compute service, use HDInsight.

- Development & Code versioning

For deep learning problems, Azure Batch would be the perfect solution because it also supports GPU workloads and it can significantly speed up the training of the deep learning models.

An advantage that Azure Batch has over HDInsight is that it doesn’t limit you to the usage of specific languages or libraries. HDInsight clusters have a default support for Java, Python, Scala and Hadoop-specific languages, for other languages you will have to install additional components.

Regarding code versioning, Azure Batch can be fully configured through Python or R. As such, it supports version control.

Additionally, regarding continuous integration and deployment, both support it. Azure HDInsight can be configured through ARM templates and Azure CLI integration in your build tool. Azure Batch allows you to use Docker images from Azure Container Registry (which has a support for CI/CD pipelines) and to automate pool creation, through R or Python, as part of your CI/CD pipeline. There is, however, currently not enough functionality of the integration with Azure Data Factory.

- Cost

Azure Batch was designed to be more versatile for spinning up and terminating clusters. As a result, there is no need to keep long-running clusters alive, as is the case with HDInsight. Moreover, Azure Batch offers the possibility of using Low-Priority VMs to further reduce costs when completion time is more flexible.

Use Azure Batch for short-living use-cases (ETL, AI jobs for training and scoring) and use HDInsight for long-term set-up (e.g. Hive querying)

Conclusion

Azure is offering various services for parallelizing your AI. With the recent additions of AZTK and new features of Azure Batch, parallelization is accessible at low costs with full ability to customize.

We recommend all readers to reflect about their use-cases and use the right tool for the right purpose. Through above framework we hope we helped clarify the differences, structure your comparison and clarified use-cases.

Our expertise

element61 has worked with all parallel computing services in Azure in a production-environment. We have a solid understanding to define which tools would be the best solution for your organization’s use-cases and to help implement these tools end-to-end.

Contact us for more information.