Apache Spark is an open source cluster computing framework released in 2014. It serves as an alternative to (part of) Apache Hadoop as it was originally developed in response to limitations in the Hadoop MapReduce cluster computing paradigm (read more on Hadoop). As such, Apache Spark complements Apache Hadoop as Spark replaces the use of Hadoop MapReduce but still requires (if used on scale) the other Hadoop elements: i.e., a file system (e.g. Hadoop Distributed File System) and a cluster manager (e.g. Hadoop YARN).

Continue reading here about Azure Databricks - thé most used Spark platform today.

The main revolution of Apache Spark (vs. Hadoop MapReduce) is the in-memory processing of data: this means that the data doesn't need to be written to/read from disk which saves time. As such, Spark can outperform MapReduce in for example iterative operations (think on machine learning algorithms): the Spark framework will cache the intermediate dataset after each iteration and runs multiple iterations on this cached dataset: this will significantly reduces the I/O and helps to run the algorithm faster.

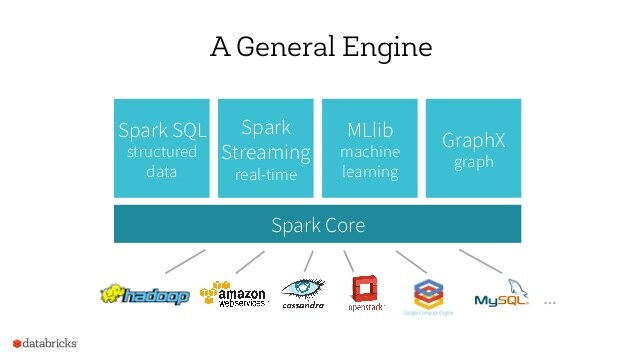

Spark is thus a computing engine and can as such be an engine on any file system: Hadoop HDFS, Google File System, Amazon S3, Cassandra, etc. All these file systems have in common to work in a distributed way: i.e., data is spread across multiple nodes (envision it as smaller computers); this in contrast with a data lake or traditional SQL database.

Spark consists of five elements: Spark Core, Spark SQL, Spark Streaming, MLLib and GraphX.

- Spark Core allows to run basic functions on a Spark Engine. Spark Core will enable basic functionalities including filters, selections, joins and

mathematical operations. The Core interface will abstract the back-end for us and

invoke parallel operations to get the results asap. The Core has been exposed through programming interfaces with Java, Python, Scala, and R. - Spark SQL allows to run SQL on Spark

Engine. With Spark SQL, Spark was introduced

DataFrames which can be seen as a structured data-tables. - Spark Streaming allows to use Spark for streaming purposes. Simply put: it ingests data in mini-batches and performs transformations on those mini-batches.

- Spark MLLib allows to run pre-configured machine learning algorithms on Spark: this includes recommenders, regressions, clusterings and segmentations. Through Spark MLLib, a Spark Engine could now be used to speed up the building and running of algorithms.

- GraphX allowed to run Graph operations on the Spark Engine.

Contact us for more information on Spark or our Big Data support.

Continue reading here about Azure Databricks - thé most used Spark platform today.