Azure announced the rebranding of Azure Data Warehouse into Azure Synapse Analytics. Given it's always a bit unclear if a software vendor just changed the name or actually added features, we did your homework and summarized the key findings. Enjoy the read!

Some introductions into Azure SQL Data Warehouse

Before going into Azure Synapse, this is what you need to know about the older product Azure SQL Data Warehouse.

Released in Gen 1 in 2016, Microsoft released in 2018 Azure Data Warehouse Gen 2, a top-notch cloud-native OLAP datawarehouse. The core difference between SQL Data Warehouse and a SQL Database it that the first - as its competitors - splits compute and storage as it should be: data is filed in a filesystem and compute is used only when needed and with massive parallel scaling opportunities. Azure DW was Microsoft's answer to competitors like Google BigQuery, Amazon Redshift, Presto or Snowflake.

Azure SQL Data Warehouse becomes Azure Synapse Analytics

On Ignite 2019, Azure announced the rebranding of Azure Data Warehouse into Azure Synapse Analytics: a solution aimed to further simplify the set-up and use of Modern Data Platforms including their development, their use by analysts and their management and montoring.

What Azure Synapse Analytics adds new to the table

With Azure Synapse Analytics, Microsoft makes up for some missing functionalities in Azure DW or generally the Azure Cloud overall. Synapse is thus more than a pure rebranding.

On-demand queries

With Synapse we can finally run on-demand SQL or Spark queries. Rather than spinning up a Spark service (e.g. Databricks) or resuming a Data Warehouse for running query, we can now write our SQL or PySpark code and pay per query. On-demand queries make it so much easier for analysts to take a quick look at a .parquet file (just opening it in Synapse - see example below) or to analyse the Data Lake for some interesting data (using the integrated Data Catalog)

Picture: Opening a Data Lake parquet file directly in a Notebook

Integration of Storage Explorer

Data in your Data Lake isn't always easy to browse through and for sure not for a business user or analyst. Within Synapse, Azure now integrated their Storage Explorer interface: a way to easily browse through the Data Lake and access all folders.

With Data Explorer integrated, an analyst can - in one interface - see and access all the data in the Data Lake and Data Warehouse (which he/she has access to): no further connection strings to be created and shared and no need for local tool such as SQL Server Management Studio (for accessing the Data Warehouse) and Azure Storage Explorer for Data Lake browsing.

Picture: Browse through your Data Lake and Database datasets in 1 single interface

Notebooks and SQL workbench

Up to now, analysts or data scientists had to work with local notebook tools (Jupyter), Databricks and/or local SQL tools to access the different data from the Data Lake or Data Warehouse. Both Azure Data Warehouse and Data Lake Store had data explorer in preview but the functionalities where limited.

Within Synapse, Microsoft created an end-to-end analysis workbench accesible through the portal. One can write SQL queries and run them on the Data Warehouse compute or an on-demand SQL or Spark compute. We offcourse all hope we can access our Databricks cluster right from this interface.

SQL Analytics on Data Lake

Parquet-format is a great highly-compressed format commonly used in Data Lakes. It's great to store but a bit more cumbersome to read and analyse: you can't open a parquet file in Windows; you'll need a tool which can read parquet (Spark or a parquet-tool like ParquetViewer).

Within Synapse, Microsoft integrated SQL Analytics functionalities on Data Lake formats: you can now run SQL script on parquet files directly in your Data Lake: e.g. using right-click on the files and using 'Open in SQL Script'.

Picture: Analyse your parquet's using SQL

What Azure Synapse Analytics does that was already there before

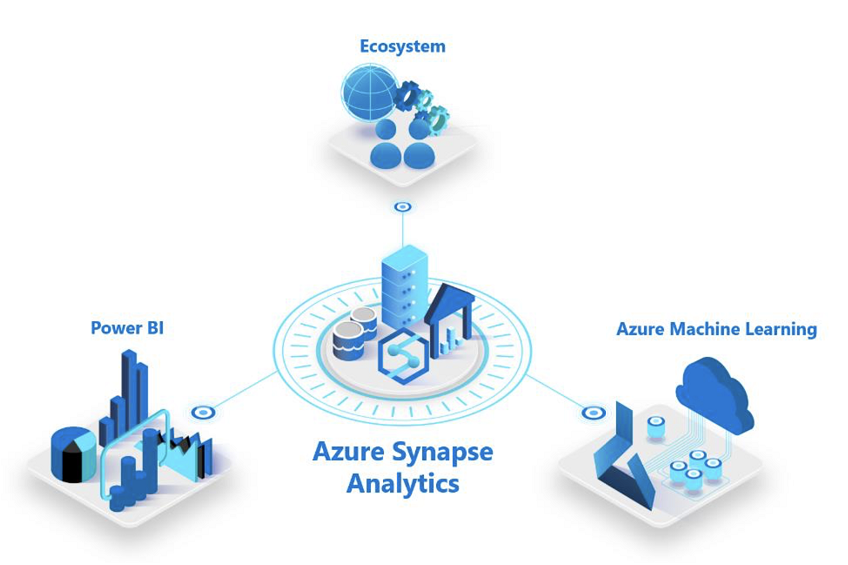

Additional to bringing new features with Azure Synapse, Azure also tries to further simplify the set-up and use of Modern Data Platforms. Rather than having multiple tools in multiple interfaces, Azure delivers one interface in which a user can

- Develop orchestration of data ingestion (powered by Azure Data Factory)

- Analyse data (using SQL or Python Notebooks) on SQL (powered by Azure Data Warehouse) or Spark (powered by Databricks)

- Build and visualize reports in self-service mode (using Power BI)

- And manage an enterprise-grade modern Data Warehouse allowing you to build enterprise data models, facts and dimensions (powered by the Azure Data Warehouse technology)

The interface reflects above integration well by and under the hood refers to the other services. Rather than having to spin up Data Factory and Data Warehouse, you'll thus only need to spin up 1 resource now: Azure Synapse.

Let's get started

Are you keen on getting started with a Modern Data Platform or Synapse in general: get in touch & let's get you started!

More information

Continue reading on how Microsoft & element61 look towards a Modern Data Platform build for end-to-end analytics including real-time, AI analytics and big data integration.