Introduction

In order to create a sustainable solution it is important to establish a repetitive method. If you want to make sure that for each picture you take, you will also be able to make a quality print, then you need to make sure that you define each single step from the raw image up to the print; which image to start from, which functionalities to apply on the raw image and in which order, which functionalities to apply in Adobe Photoshop and in which order, how to go about when sharpening the image, what about resizing the image, etc.

I think you get the picture here :-).

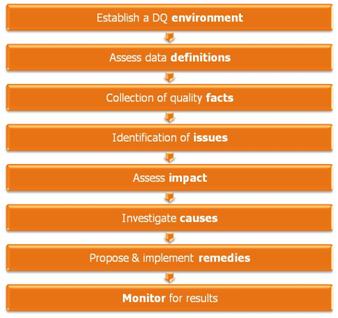

Just like the raw image to print process, aiming for data quality improvement also requires a solid workflow. This insight will elaborate on a practical step by step approach in dealing with just that.

Workflow for moving from inaccurate to accurate data

Best practice is to formalize the data quality improvement process. This can be done by properly documenting and tracking all process activities which allows for progress tracking throughout the entire process. Data quality issues should also be kept in a dedicated issues list, separate from other project issues.

The different process steps are depicted in the workflow shown underneath.

Most of the times when a single error fact is identified, a stand-alone remedy is implemented. In the methodology put forward here several additional steps are added to reach to a more solid and repeatable approach to success.

Establish a Data Quality environment

For more information on this first step we like to refer to the earlier insight "The alternative to garbage in, garbage out: a data quality program” in which we discussed the different components of a data quality program (dimensions, organization, methodologies and activities).

Assess data definitions

It is essential that the existing metadata definitions are not taken for granted. You cannot tell if something is wrong unless you can define what is right.

Most of the time, metadata found in documentation or if you are lucky, in a data modeling tool is inaccurate, incomplete or no longer relevant. It is therefore important to collect as much metadata as possible.

- Metadata on column level (data types, lengths, precisions …)

- Structural information (primary keys, foreign keys …)

- Triggers, stored procedures …

- Interface definitions feeding the database

- Data dictionaries, metadata repositories …

- Application source code performing DML in the database

All this information should be re-captured in a transparent and unique way. As a second step these findings should be completed with information from and verified by business analysts, key users, DBAs ….

In a later step the documented metadata plus the perceived metadata will be verified against the real data in the data profiling exercise.

Collection of quality facts

The most important technology for finding quality facts (or lack of quality facts) is data profiling. Data profiling uses analytical techniques to discover the true content, structure and quality of data.

The data profiling exercise itself should also follow a specific method to be most effective. Here ideally a bottom-up approach should be used. The exercise should start at the most atomic level of the data. Problems found at the lower levels can be used in the analysis at the higher level. Ideally analysts correct data inaccuracies at each level before moving to a higher level, which makes the data profiling of the higher level more successful.

Each step concentrates on a specific type or rule and verifies the rule definition(s). The process steps are as follows:

- Column property analysis. Here invalid values are encountered which are stored in a single column, independent of all other columns.

- E.g.: BusinessUnitId column properties might be: numeric & within the range 100 – 999. As such a value 010 would be an invalid value.

- Structure analysis. Here invalid combinations of valid values are encountered between columns in terms of relationships between different tables.

- E.g.: primary keys & foreign keys.

- Simple data rule analysis. This concerns rules which require that related values across multiple columns across single records are acceptable values.

- E.g.: if LicenseType = "Truck”, then DateOfBirth >= 21 years.

- Complex data rule analysis. This concerns rules that require that related values across multiple columns across multiple records are acceptable values.

- E.g.: a specific customer cannot be a business customer and a retail customer at the same time. This requires a validation of all transactional records for each customer and checking all related conditions in function of the customer type.

- Value rule analysis. Here inaccurate data is identified through aggregation numbers leading to outlier values.

- E.g.: for a column, which is expected to have an equally distributed number of occurrences for all its values, the number of occurrences for each value may indicate that one or more values have a far too low or far too high number of occurrences.

It is important to note here that data profiling does not find all inaccurate data, it can only find the violations to a specific set of predefined rules. Therefore it is crucial to start with the proper technical & business metadata definitions as discussed in the previous process step.

Data profiling will produce facts about data inaccuracies and as such it will generate metrics based on the facts.

Metrics specifics:

- For each rule, the number of violations

- For each rule, the number of tests performed that violated the rule

- For each invalid values, the frequency of each

- The number of rows containing at least one wrong value

- Chart with errors found by data element

- The number of key violations (primary key, primary-foreign key orphans …)

- Chart of data rules executed and the number of violations returned

- Breakdown of errors based on data entry locations

- Breakdown of errors based on data creation date

As such an entire data source can be graded, which dependent on the situation could be useful of not. This gradation will be a computed value that weighs each rule based on its importance and the number of violations associated with it.

Metrics can be useful to demonstrate to the management that the process is finding inaccurate facts. Next to that, metrics can also show the progress in terms of how far a certain database is already analyzed. Finally metrics can also qualify data when data profiling is executed on external data which is (to be) bought or when data profiling is executed on internal data which is required to populate for example a data warehouse.

On the other hand the metrics do not identify the problem, they only provide an indication that a certain problem exists. It is a certainty that not 100% of all inaccuracies will ever be found, after all it is impossible to accurately estimate the percentage of inaccuracies.

Identification of issues

In a way this is the real output of the fact collection phase. Here the idea is that not each individual quality fact or violation will be analyzed on an individual basis, but that a number of facts are converted into a single issue, which is then taken further.

An issue can be defined as a problem that has surfaced, which is clearly defined and which either is costing the organization something valuable (money, time, customers …) or has the potential of costing the organization something valuable.

One or multiple facts can result in a single issue. Most likely these groups of problems have a single root cause. Some examples:

- 20% of the purchase orders have no supplier id. Here a single fact results in a single issue.

- Customer name & address data show various problems; invalid names, blank address fields, city names misspelled, blank zip codes … Here several facts can be grouped into one issue. Addressing each inaccuracy fact in a stand-alone way would be an inefficient use of time.

Ideally the issues are recorded in a database. In this database a clear description of the findings / facts is given, the data source is identified, samples are given, the extraction date is mentioned, meeting minutes are added, owners are appointed …

If possible all open issues should be reviewed on a periodic basis in order to keep the problems highly visible until they are no longer problems.

Assess impact

Now that we have found certain data quality issues, what is next? It is necessary to justify the development of defining and deploying corrective actions.

- What are the operational & tactical costs associated with the defects?

- Cost of rework, cost of lost customers, cost of late reporting, cost of incorrect reporting, cost of wrong decisions, cost of lost production, cost of poor service, cost of end-users not using or even bypassing systems, cost of people spending time in dealing with data quality issues.

- What are the project costs?

- Projects running over budget due to lack of correctly understanding of the data quality issues present in the data.

- Many or large data quality issues might often be factors for even cancelling projects.

- Thinking upfront of data quality issues and prevention may enable the organization to finish projects sooner and as such support the organization in their "asap” business needs.

Here we need to look for

- Impacts already happening. E.g. two departments using different supplier identifiers. Here one could easily, for example, calculate the discount value difference which is missed out.

- Time spent in correcting wrong information. E.g. on invoices sent out.

- Hidden costs: delayed payments, invoices rejected by customers …

- Impacts not yet happening, which are typically the most dangerous ones.

Investigate causes

Essentially before remedies can be produced, there is a need for identifying the root causes of the identified wrong values. Only then improvements in quality can really structurally occur.

Two basic approaches can be followed here; error cluster analysis and data event analysis.

Error cluster analysis tries to narrow down the sources of errors. Here the information contained in the database is used to provide clues to where the inaccuracies may be coming from. The collection of all these erroneous record lines which have violations to predefined rules forms the initial analysis set. Within this set we need to look for data elements which show a certain affinity, which can be data source location (e.g. a certain sales rep), customer information (e.g. only first-time customers), dates (e.g. specific range of dates), product characteristics (e.g. expensive products only) … In the end, we are looking for any factor that may indicate a starting point in examining the cause of errors.

Data event analysis studies the events where data is created and changed in order to help identify the root causes of problems. The points of examination can be the points at which data is extracted and loaded into another data store or the data capture process.

Specifically the data capture process is the most important point where data can be made accurate or inaccurate for that matter. It is important that all data capture points are identified properly.

- Some data is captured only once

- Some data is updated on an exception base only

- Some data is updated / enhanced over a long period of time

- Some of these points can happen on multiple forms (internet, mail, application ...)

Building a diagram of the data paths of a business object, identifying the distinct points of data capture, and specifying the characteristics of each is a time-consuming, but extremely important task. Also CRUD matrices (Create, Read, Update & Delete) could help out here. It is best practice to make this an essential part of any serious software application project.

Within the data capture process, the following characteristics are worthwhile zooming in on.

- Time between the actual event & the recording of it in the database

- Physical distance between the actual event & the recording of it in the database

- Number of manual or oral stations, through which information passes through before recording it in the database

- Motivation of persons responsible for recording the data

- Availability of all facts at the time of recording the data

- Ability to verify information at the moment of recording the data

- Skills and experience of the persons responsible of recording the data

- Feedback in terms of data checkers, confirmation buttons, etc. provided by the software to the recorder

- Software automatic assistance in the recording process

- Error checking in the recording process

Propose & implement remedies

Remedies are changes to systems that were previously designed and build in order to prevent data inaccuracies from happening (again) in the future and in order to detect as many inaccuracies as possible when they occur. Without remedies, problems are likely to persist, or even get worse. Remedies are mostly designed to improve the quality of new data being entered as opposed to fixing the data that is already there.

They should be a trade-off

- Between quick improvements through patching an existing system versus long-term reengineering the data processes and application programs.

- Between making changes to primary systems versus data cleansing to fix problems when moving data.

Remedies can consist of

- Improving data capture

Redesign data entry screens and their associated logic; appropriate training of data entry staff; replacing the entry process; providing meaningful feedback; changing motivations to encourage quality.

- Adding defensive checkers

Defensive checkers assist in enforcing rules at the point of data entry to prevent invalid values, invalid combinations of invalid values and structural problems from getting into the database in the first place. This can be done in the data entry screen, in the application logic and add database level.

- Performing periodic data profiling

This consists of adding programs that run periodically over the database to check for the conformance to rules that are not practical to execute at transaction level.

- Using data cleansing techniques & programs

- Reengineering and re-implementing the application

This is only done in extreme cases. Mostly this is not a solution solely for data quality reasons, but the data quality issues become additional justification for this change.

- Better educating user community on data quality in general

- Changing the data entry and / or associated process

Some best practices

- Go for continuous (and many) short-term improvements. Improvement of data quality is not a goal in itself. Reaching the additional business benefits, in a reasonable amount of time, is. Short-term improvements will definitely result in a number of quick-wins, but in the end, they will be thrown away as they are getting replaced or absorbed by more durable long-term improvement projects.

- This might lead to throwaway efforts in order to result in some short-time improvements while waiting for long-term projects to complete.

- Avoid remedies ending up on a to-do list, which then get dropped in favor of other projects.

- Avoid temporary improvements becoming permanent. An issue should stay open, as long as it is not fully addressed.

- Monitor the results of an implemented remedy.

- Do not over-engineer. Do not require that all data rules discovered should be implemented as transaction checks. A balance should be reached between catching as many errors as possible and sustaining an acceptable performance at transaction level and data-entry user friendliness. Therefore it is essential that the rule set is prioritized based on the probability of errors occurring and the importance of an inaccurate value.

- Do not try to get implementation commitments on individual issues, which could result in resistance. Instead try combining issues into change initiatives. These initiatives will anyway have a bigger impact on the value returned for the organization.

- The implementation of the remedies itself should also be monitored closely as a quality assurance effort. After all why would it be executed in a perfect way if other projects or not 100% perfect either?

Monitor for results

It is essential to continue monitoring the database after remedies have been implemented.

This monitoring provides two benefits. Firstly the improvement effort is validated towards its positive impact and at the same time the value provided for the business is quantified. Secondly the database is checked for the occurrence of new problems.

Conclusion

Let's take a practical example: Assume a "blue” hospital, in which for all patients, amongst- other-things the medical procedure and the gender are recorded.

Via data profiling, the data quality team discovered that certain procedures which are recorded are not possible for certain genders, leading to inaccurate facts. Instead of immediately solving the issues, first the potential causes are investigated. The actual root cause here is that the information is first handwritten by the doctors, leading to certain mistakes, missing data, etc. and afterwards the data entry staff enters the information as quickly as possible in the system, next to their other daily tasks.

The following remedies were proposed and implemented: a process change by providing an online data entry process and as such omitting the hand written step. Secondly a set of data checkers were introduced (e.g. gender versus procedure, age versus procedure …). Thirdly clear text descriptions of the procedures were made available next to the procedure codes. Finally education was done on the importance of entering the data correctly, next to providing insight in the benefits of future analyses based on the initial information.

Because of the appropriate remedies, based on a thorough investigation of the causes, the number of errors prevented not only included those which were initially detected as error facts, but many others which were not known at first sight.

Conclusion: Improving data quality can only be done properly when a certain workflow is followed at all times. Now get out and take some pictures :-).